DeepSeek-R1 and DeepSeek-V3 have caused a global sensation since their open source launch.

They are a gift from the DeepSeek team to all of humanity, and we are sincerely happy for their success.

After days of hard work by the Silicon Mobility and Huawei Cloud teams, today we are also giving Chinese users a Chinese New Year gift: the large-scale model cloud service platform SiliconCloud has launched DeepSeek-V3 and DeepSeek-R1, which are based on Huawei Cloud’s Ascend cloud service.

It should be emphasized that we have received great support from DeepSeek and Huawei Cloud, both in adapting DeepSeek-R1 & V3 on Ascend and in the process of launching other models previously, and we would like to deep gratitude and high respect.

Features

These two models launched by SiliconCloud mainly include five major features:

Based on Huawei Cloud’s Ascend cloud service, we have launched the DeepSeek x Silicon Mobility x Huawei Cloud R1 & V3 model inference service for the first time.

Through joint innovation between the two parties, and with the support of the self-developed inference acceleration engine, the DeepSeek model deployed by the Silicon Mobility team based on Huawei Cloud’s Ascend cloud service can achieve the same effect as a high-end GPU deployment model in the world.

Provide stable production-level DeepSeek-R1 & V3 inference services. This allows developers to run stably in large-scale production environments and meet the needs of commercial deployment. Huawei Cloud Ascend AI services provide abundant, elastic, and sufficient computing power.

There is no deployment threshold, allowing developers to focus more on application development. When developing applications, they can directly call the SiliconCloud API, which provides an easier and more user-friendly experience.

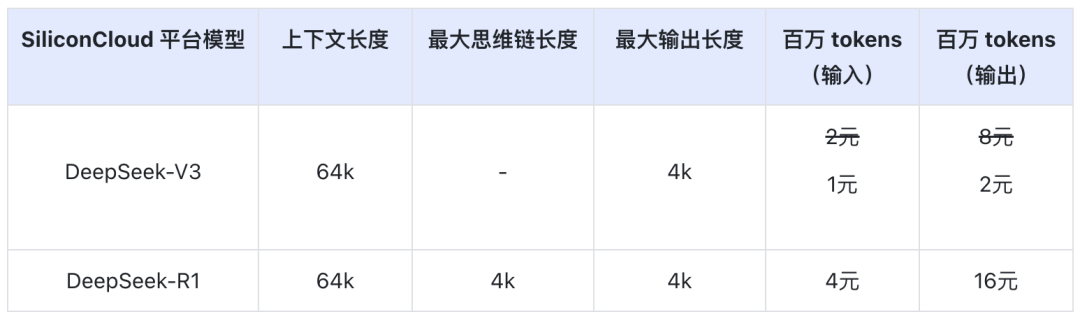

The DeepSeek-V3 price on SiliconCloud during the official discount period (until 24:00 on February 8) is ¥1 / M tokens (input) & ¥2 / M tokens (output), and the DeepSeek-R1 price is ¥4 / M tokens (input) & ¥16 / M tokens (output).

Online experience

DeepSeek-R1 with SiliconCloud

DeepSeek-V3 with SiliconCloud

API documentation

Developers can experience the effect of DeepSeek-R1 & V3 accelerated on domestic chips on SiliconCloud. The faster output speed is still being continuously optimized.

Experience in client application

If you want to experience the DeepSeek-R1 & V3 model directly in the client application, you can install the following products locally and access the SiliconCloud API (you can customize and add these two models) to experience DeepSeek-R1 & V3.

- Large model client applications: ChatBox, Cherry Studio, OneAPI, LobeChat, NextChat

- Code generation applications: Cursor, Windsurf, Cline

- Large model application development platform:Dify

- AI knowledge base:Obsidian AI, andFastGPT

- Translation plug-in:Immersive Translate, andEurodict

For more scenario and application case access tutorials, please refer to here

Token Factory SiliconCloud

Qwen2.5 (7B), etc. 20+ models free to use

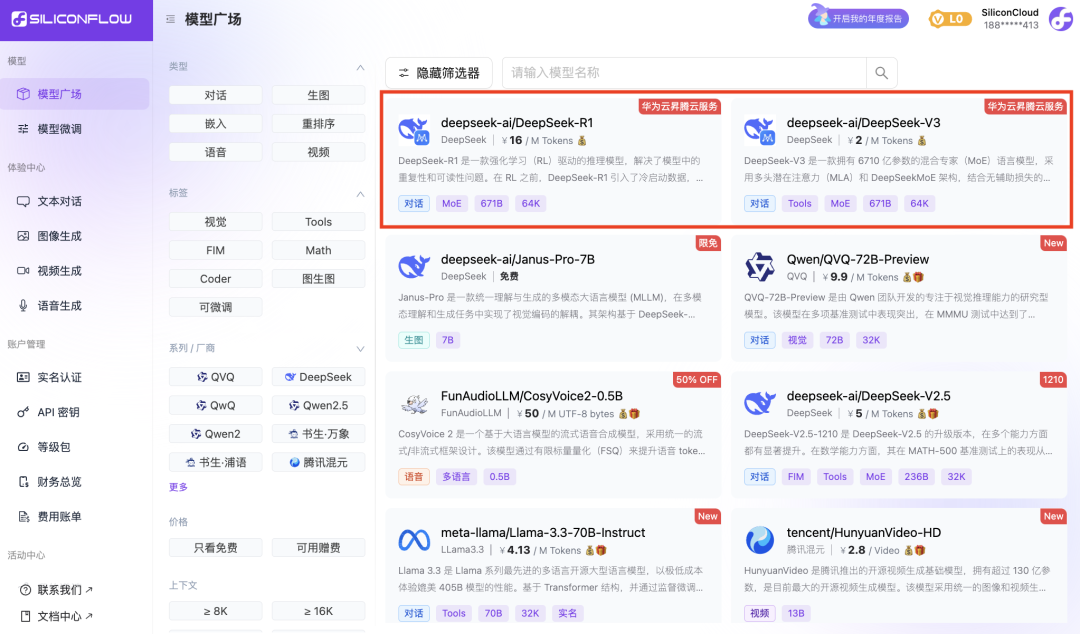

As a one-stop cloud service platform for large models, SiliconCloud is committed to providing developers with model APIs that are ultra-responsive, affordable, comprehensive, and have a silky-smooth experience.

In addition to DeepSeek-R1 and DeepSeek-V3, SiliconCloud has also launched Janus-Pro-7B, CosyVoice2, QVQ-72B-Preview, DeepSeek-VL2, DeepSeek-V2.5-1210, Llama-3.3-70B-Instruct, HunyuanVideo, fish-speech-1.5, Qwen2.5 -7B/14B/32B/72B, FLUX.1, InternLM2.5-20B-Chat, BCE, BGE, SenseVoice-Small, GLM-4-9B-Chat,

dozens of open source large language models, image/video generation models, speech models, code/math models, and vector and reordering models.

The platform allows developers to freely compare and combine large models of various modalities to choose the best practice for your generative AI application.

Among them, 20+ large model APIs such as Qwen2.5 (7B) and Llama3.1 (8B) are free to use, allowing developers and product managers to achieve “token freedom” without worrying about the cost of computing power during the research and development stage and large-scale promotion.