o3-mini arrived late at night, and OpenAI finally revealed its latest trump card. During a Reddit AMA Q&A, Altman deeply confessed that he had stood on the wrong side of the open source AI.

He said that the internal strategy of open source is being considered, and the model will continue to be developed, but OpenAI’s lead will not be as big as before.

While everyone was still marveling at the amazing power of DeepSeek, OpenAI finally couldn’t sit still anymore.

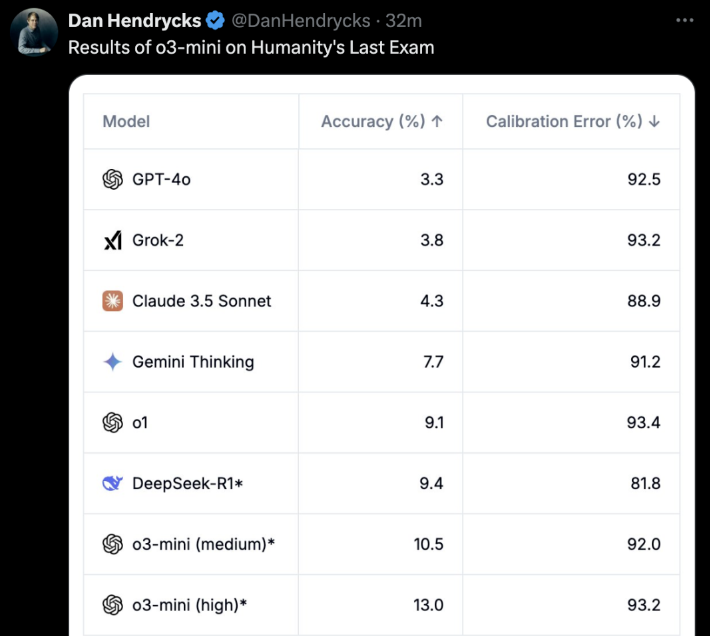

In the early morning of yesterday, o3-mini was urgently launched, setting new SOTA in benchmark tests such as mathematical code and returning to the throne.

The most important thing is that free users can experience it too! o3-mini is no joke. In the “last exam for humans,” o3-mini (high) directly achieved the best accuracy and calibration error.

A few hours after o3-mini went online, OpenAI officially opened a Reddit AMA for about an hour.

Altman himself also went online and answered all the questions from netizens.

The main highlights are:

- DeepSeek is indeed very good, and we will continue to develop better models, but the lead will not be as big as before

- Compared to a few years ago, I am now more inclined to believe that AI may experience rapid leaps and bounds

- We are on the wrong side of the issue of open-sourcing weighted AI models

- An update to the advanced voice mode is coming soon, and we will call it GPT-5 directly, not GPT-5o. There is no specific timetable yet.

In addition to Altman himself, Chief Research Officer Mark Chen, Chief Product Officer Kevin Weil, Vice President of Engineering Srinivas Narayanan, Head of API Research Michelle Pokrass, and Head of Research Hongyu Ren were also online and carefully answered all the questions from netizens.

Next, let’s take a look at what they all said.

Altman deeply repents, taking the wrong side in the open source AI battle

DeepSeek’s sudden comeback may have been unexpected for everyone.

During the AMA Q&A, Altman himself also deeply repented of taking the wrong side in the open source AI battle and had to admit DeepSeek’s strong advantages.

What amazed many people was that Altman even said that OpenAI’s lead was not as strong as it used to be.

The following are all the classic answers we have compiled from Altman.

Q: Let’s talk about the big topic of the week: Deepseek. It’s clearly a very impressive model, and I know it was probably trained on top of the outputs of other LLM’s. How will this change your plans for future models?

Altman: It is indeed a very impressive model! We will develop better models, but we won’t be able to keep such a big lead as in previous years.

Q: Do you think recursive self-improvement will be a gradual process or a sudden take-off?

Altman: Personally, I think I’m more inclined to think that AI may make rapid progress than I was a few years ago. Maybe it’s time to write something on this topic…

Q: Can we see all the tokens that the model thinks about?

Altman: Yes, we’ll soon be showing a more helpful and detailed version. Thanks to R1 for the update.

Kevin Weil, Chief Product Officer: We are working on showing more than we do now – this will happen soon. Whether we show everything or not remains to be determined. Showing all the thought chains (CoT) would lead to competitor model distillation, but we also know that users (at least advanced users) want to see this, so we will find a good balance.

Q: When will the full version of o3 be available?

Altman: I would estimate it will be more than a few weeks, but not more than a few months.

Q: Will there be an update to the voice mode? Is this potentially a focus for GPT-5o? What is the rough timeline for GPT-5o?

Altman: Yes, an update to the advanced voice mode is coming! I think we will just call it GPT-5, not GPT-5o. There is no specific timeline yet.

Q: Would you consider releasing some model weights and publishing some research?

Altman: Yes, we are discussing it. I personally think we’re on the wrong side of this issue and need to come up with a different open source strategy; not everyone at OpenAI shares this view, and it’s not our highest priority at the moment.

One more question set:

- How close are we to offering Operator in the regular Plus program?

- What are the robotics department’s top priorities?

- How does OpenAI feel about more specialized chips/TPUs, like Trillium, Cerebras, etc.? Is OpenAI paying attention to this?

- What investments are being made to hedge against future risks in AGI and ASI?

- What was your most memorable holiday?

Altman:

- A few months

- to produce a really good robot on a small scale and learn from the experience

- The GB200 is currently hard to beat!

- A good choice would be to improve your inner state – resilience, adaptability, calm, joy, etc.

- It’s hard to choose! But the first two that come to mind are: backpacking in Southeast Asia or a safari in Africa

Q: Are you planning to raise the price of the Plus series?

Altman: Actually, I want to gradually reduce it.

Q: Suppose it’s now the year 2030, and you’ve just created a system that most people would call an AGI. It excels in all the benchmark tests and outperforms your best engineers and researchers in terms of speed and performance. What’s next? Apart from “putting it on the website and offering it as a service”, do you have any other plans?

Altman: The most important impact, in my opinion, will be to accelerate scientific discovery, which I think is the factor that will contribute most to improving the quality of life.

4o image generation, coming soon

Next, responses from other OpenAI members were added.

Q: Are you still planning to launch the 4o image generator?

Kevin Weil, Chief Product Officer: Yes! And I think the wait is worth it.

Q: Great! Is there a rough timetable?

Kevin Weil, Chief Product Officer: You’re asking me to get into trouble. Maybe a few months.

And another similar question.

Q: When can we expect to see ChatGPT-5?

Kevin Weil, Chief Product Officer: Shortly after o-17 micro and GPT-(π+1).

And another question pops up:

- What other types of agents can we expect?

- And also provide an agent for free users, which could accelerate adoption…

- Any updates on the new version of DALL·E?

- One last question, and it’s the one everyone asks… When will AGI be implemented?

Kevin Weil, Chief Product Officer:

- More agents: very, very soon. I think you’ll be happy.

- Image generation based on 4o: in a few months, I can’t wait for you to use it. It’s great.

- AGI: Yes

Q: Do you plan to add file attachment functionality to the reasoning model?

Srinivas Narayanan, VP Engineering: It’s in development. In the future, the reasoning model will be able to use different tools, including search functions.

Kevin Weil, Chief Product Officer: Just to say, I can’t wait to see the reasoning model being able to use tools

Q: Really. When you solve this problem, some very useful AI application scenarios will be opened up. Imagine it being able to understand the content of your 500GB work documents.

When you’re about to reply to an email, a panel will open next to your email app that continuously analyzes all the information related to this person, including your relationship, the topics discussed, past work, etc. Perhaps something from a document you’ve long forgotten will be flagged because it’s highly relevant to the current discussion. I want this feature so badly.

Srinivas Narayanan, VP Engineering: We’re working on increasing the length of the context. There’s no firm date/announcement yet.

Q: How important is the Stargate project for the future of OpenAI? Kevin Weil, Chief Product Officer: Very important. Everything we’ve seen suggests that the more compute power we have, the better models we can build and the more valuable products we can create.

We’re scaling models in two dimensions right now — larger pretraining and more reinforcement learning (RL)/“strawberry” training — both of which require compute resources.

Serving hundreds of millions of users also requires compute resources! And as we move towards more intelligent agent products that can work for you continuously, this also requires compute resources. So you can think of Stargate as our factory, where electricity/GPUs are converted into amazing products.

Q: Internally, which model are you using now? o4, o5 or o6? How much smarter are these internal models compared to o3?

Michelle Pokrass, Head of API Research: We’ve lost count.

Q: Please allow us to interact with text/canvas while using advanced voice features. I want to be able to speak to it and have it iteratively modify documents.

Kevin Weil, Chief Product Officer: Yes! We have a lot of great tools that have been developed relatively independently – the goal is to get those tools into your hands as quickly as possible.

The next step is to integrate all these features so that you can talk to a model, which searches and reasons at the same time, and generate a canvas that can run Python. All tools need to work better together. And by the way, all models need full tool access (the o-series models currently cannot use all tools), which will also be implemented.

Q: When will the o-series models support the memory function in ChatGPT?

Michelle Pokrass, Head of API Research: It’s in development! Unifying all our features with the o-series models is our top priority.

Q: Will there be any major improvements to 4o? I really like the custom GPT, and it would be great if it could be upgraded, or if we could choose which model to use in the custom GPT (such as the o3 mini).

Michelle Pokrass, Head of API Research: Yes, we haven’t finished with the 4o series yet!