The story of Gemini 2.0 is accelerating.

The Flash Thinking Experimental version in December brought developers a working model with low latency and high performance.

Earlier this year, 2.0 Flash Thinking Experimental was updated in the Google AI Studio to further improve performance by combining the speed of Flash with enhanced inference capabilities.

Last week, the updated version 2.0 Flash was fully launched on the Gemini desktop and mobile apps.

Today, three new members have been unveiled at the same time: the experimental version of Gemini 2.0 Pro, which has so far performed best in coding and complex prompts, the cost-effective 2.0 Flash-Lite, and the thinking-enhanced version 2.0 Flash Thinking.

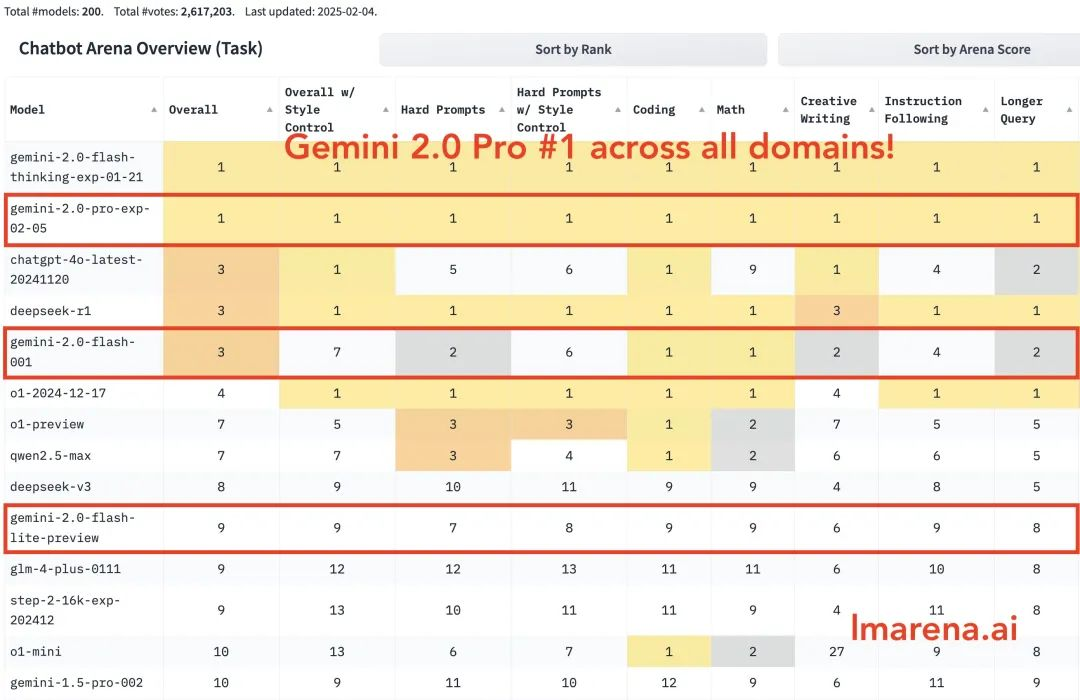

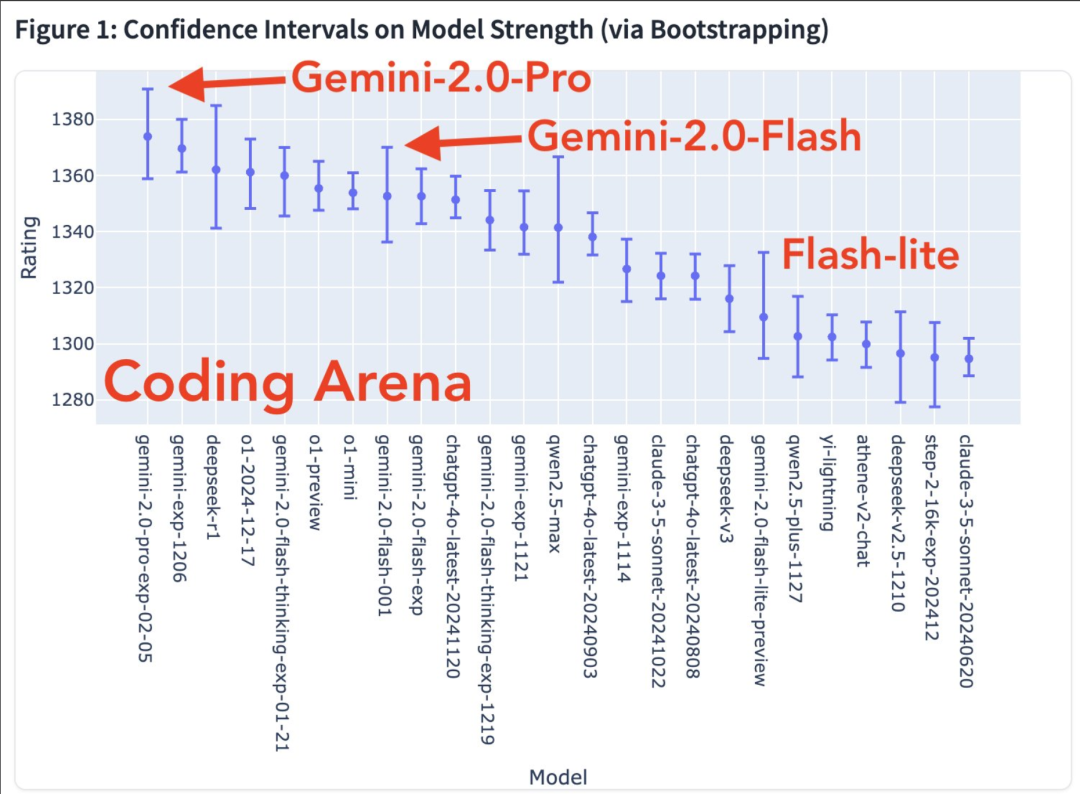

Gemini 2.0 Pro ranks first in all categories. Gemini-2.0-Flash ranks in the top three in coding, math, and puzzles. Flash-lite ranks in the top ten in all categories.

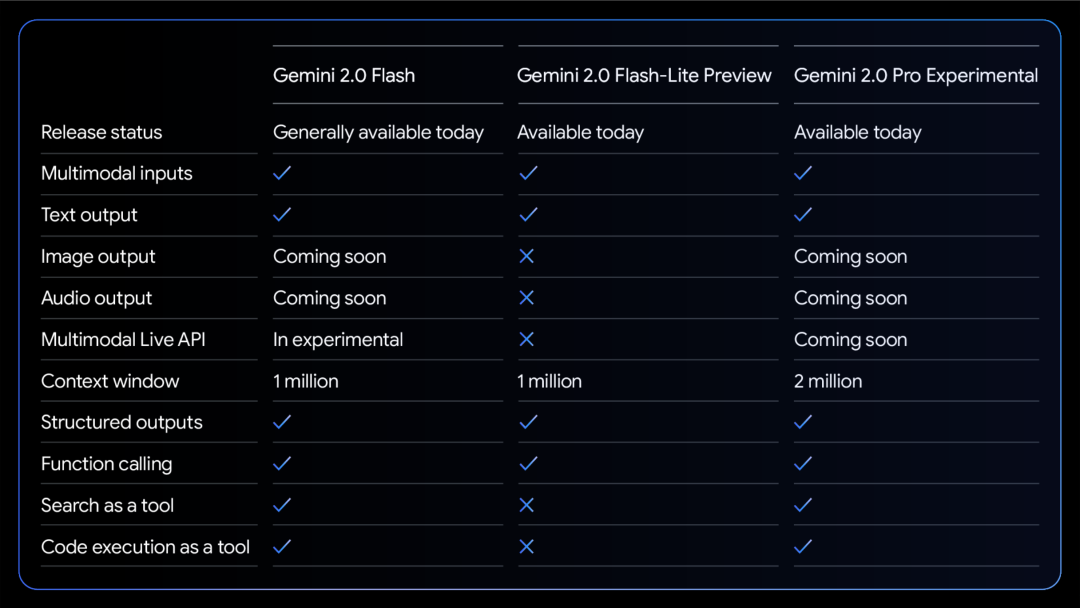

A comparison chart of the three models’ abilities:

All models support multimodal input and output text.

More modal abilities are on the way. Model strength chart in the coding arena

Win rate heat map

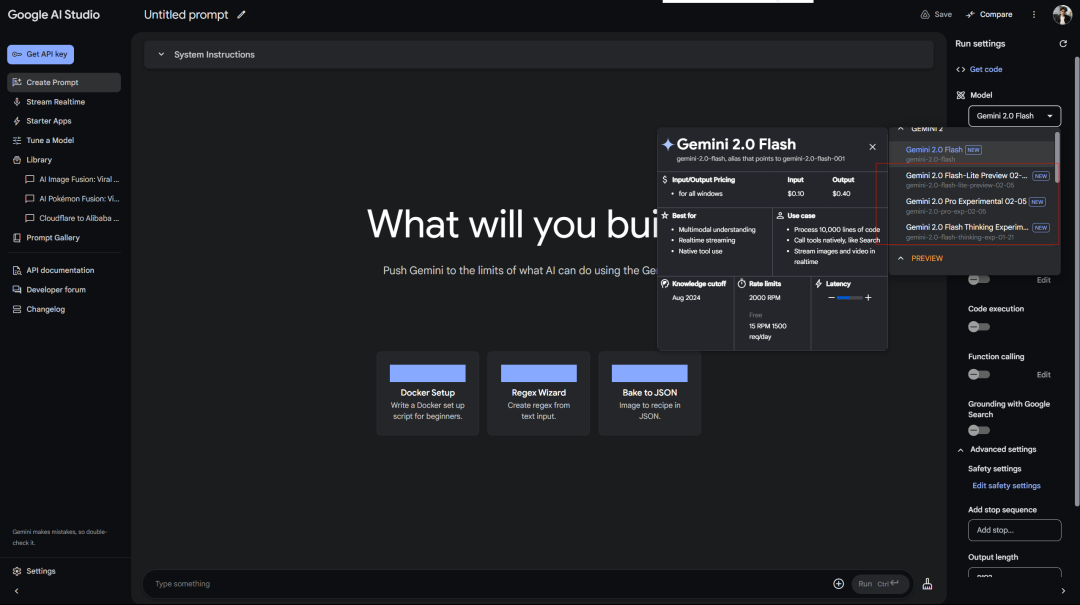

Google treats free users better than OpenAI treats Plus users. Free access to Gemini 2.0 Pro Experimental in AI Studio:

Deepseek service always displays an error waiting… Remember that the first inference-free model was also 2.0 Flash Thinking, which was used in Google aistudio.

In addition, there is the web version of Gemini:

There is also a connected inference model (so why separate it…)

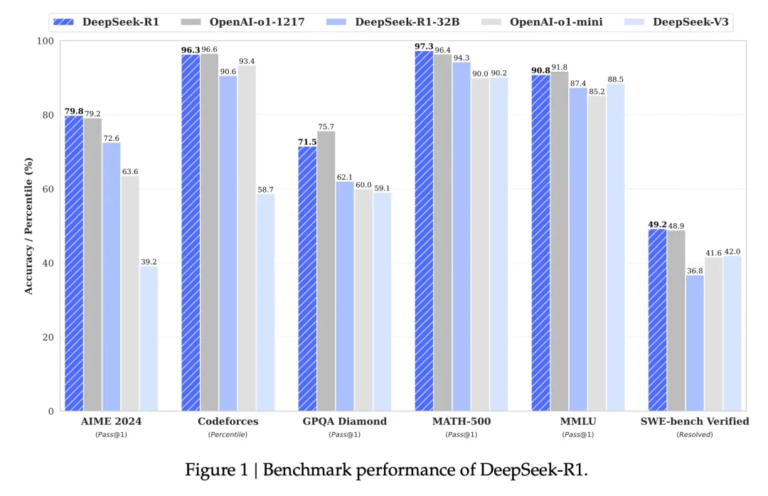

Google released the experimental version of Gemini 2.0 Pro, and the improvement in official benchmark tests is quite eye-catching.

It has the most powerful coding capabilities and the ability to process complex prompts, and has a better ability to understand and reason about world knowledge than any model released by Google so far.

It has the largest context window (200k, and my long context is a relatively big advantage of the Gemini model), which enables it to comprehensively analyze and understand a large amount of information, and to call tools such as Google search and code execution.

In the MATH test, it achieved 91.8%, an increase of about 5 percentage points over version 1.5. GPQA reasoning ability reached 64.7%, and SimpleQA world knowledge test even reached 44.3%.

The most notable is the programming ability. It achieved 36.0% in the LiveCodeBench test, and the Bird-SQL conversion accuracy exceeded 59.3%. Coupled with the super-large context window of 2 million tokens, it is enough to handle the most complex code analysis tasks.

You can try it out in the cursor.

The multi-language understanding ability is also impressive, with a Global MMLU test score of 86.5%. Image understanding MMMU is 72.7%, and video analysis ability is 71.9%.

Gemini 2.0 Flash-Lite is an interesting balance.

It maintains the speed and cost of 1.5 Flash, but brings better performance. The context window with 1 million tokens allows it to process more information.

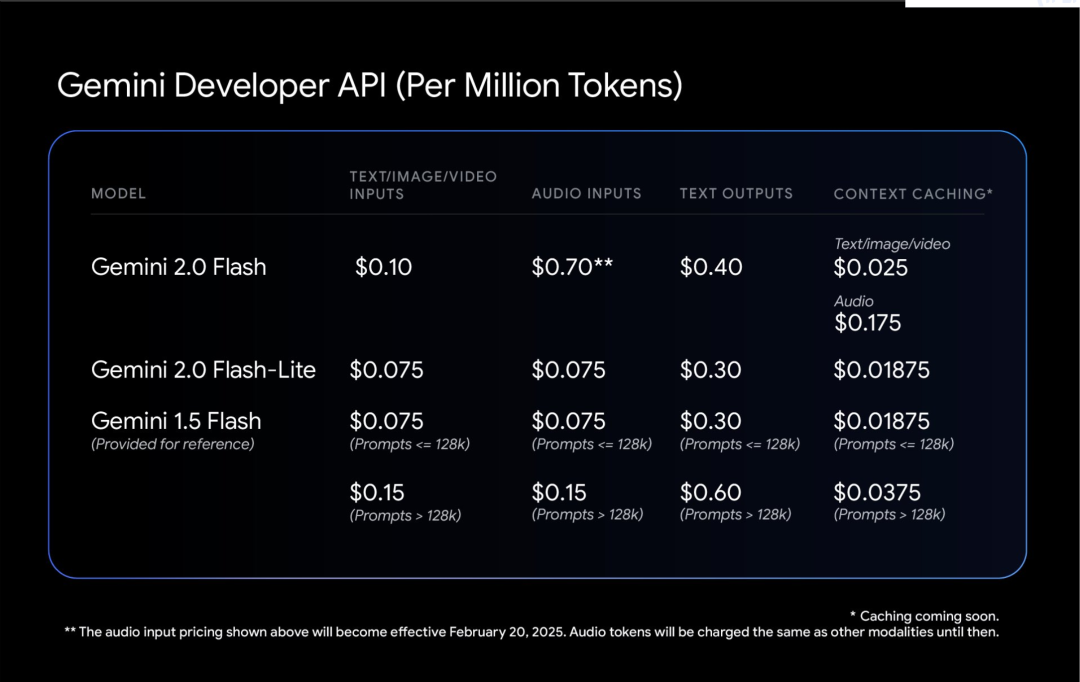

The most practical thing is its price/performance ratio: caption generation for 40,000 photos costs less than $1. This makes AI more down-to-earth.

Blogger Shrivastava mentioned: Gemini 2.0 Pro encoding is crazy!

Tip: use Three.js to create a solar system simulation. Add a time scale, a focus drop-down menu, show orbits and show labels. Create everything in one file so I can paste it into an online editor and view the output.

In addition, some users mentioned that Gemini 2.0 Flash produced better results in one of his own paradox tests:

Finally, Google mentioned that the security of Gemini 2.0, not just the patch, is at the core of the design from the beginning.

Let the model learn to be self-critical. Use reinforcement learning to let Gemini evaluate its own answers and provide more accurate feedback. This makes it more robust when dealing with sensitive topics.

The automated red team testing is interesting. It is specifically designed to prevent the injection of indirect prompt words, which is like equipping the AI with an immune system to prevent someone from hiding malicious commands in the data.