Today we will share DeepSeek R1, Title: DeepSeek-R1: Incentivizing Reasoning Capability in LLMs via Reinforcement Learning: Incentivizing the reasoning capability of LLM via reinforcement learning.

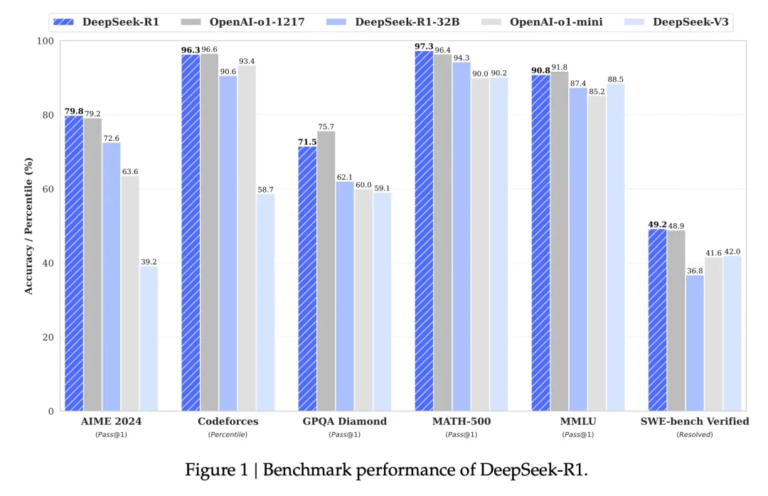

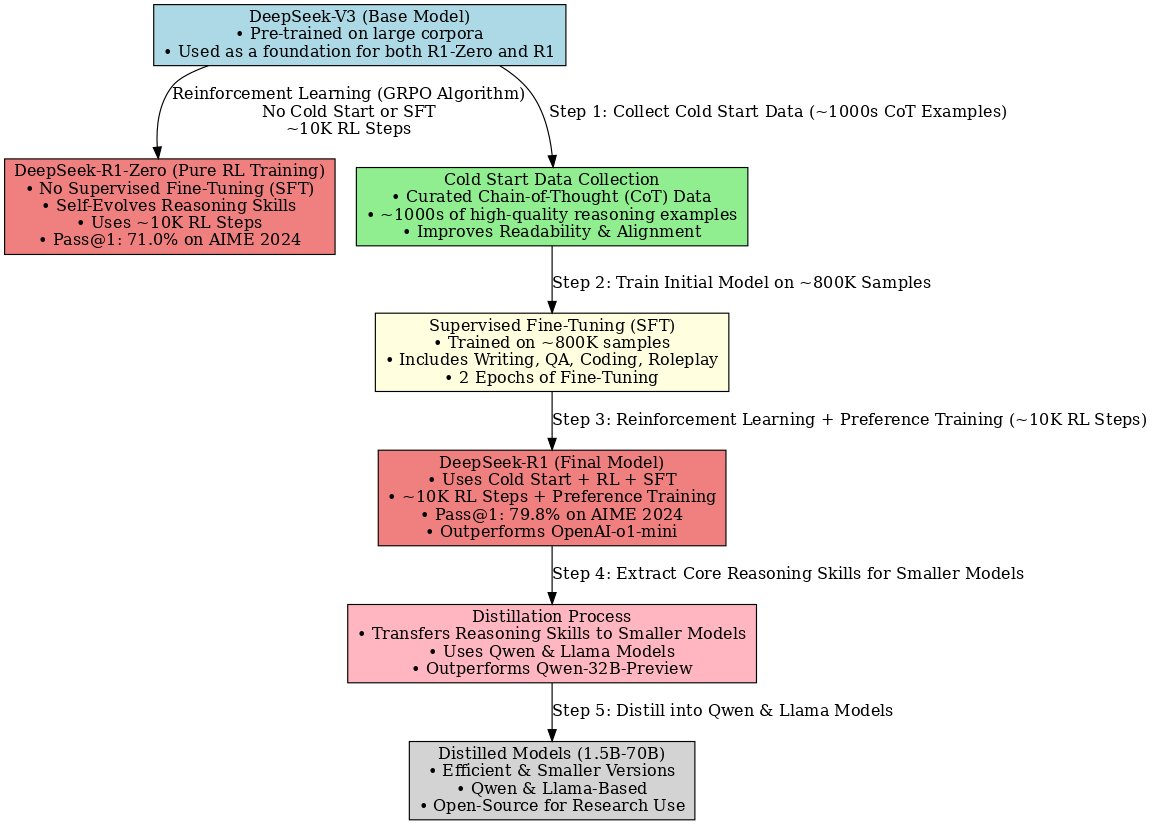

This paper introduces DeepSeek’s first generation of reasoning models, DeepSeek-R1-Zero and DeepSeek-R1. The DeepSeek-R1-Zero model was trained through large-scale reinforcement learning (RL) without supervised fine-tuning (SFT) as an initial step, demonstrating the potential of RL and the superior reasoning capabilities it brings. Through reinforcement learning, DeepSeek-R1-Zero naturally emerged with many powerful and interesting reasoning behaviors. To further optimize some of the issues with R1-Zero (linguistic confusions, improved generalization ability), they released DeepSeek-R1, which combines multi-stage training and cold-start data fine-tuning before reinforcement learning. DeepSeek-R1 achieved comparable performance on the reasoning task with OpenAI-01-1217. To support the research community, they have open-sourced DeepSeek-R1-Zero, DeepSeek-R1, and six dense models (1.5B, 7B, 8B, 14B, 32B, 70B) distilled from DeepSeek-R1, which are based on Qwen and Llama.

The characteristics of the method are summarized as follows:

- Reinforcement learning is applied directly to the base model, without relying on supervised fine-tuning (SFT) as an initial step.

- The DeepSeek-R1 development process is introduced, which combines two reinforcement learning phases and two supervised fine-tuning phases to lay the foundation for the model’s reasoning and non-reasoning capabilities.

- The performance of small models on reasoning tasks is improved by transferring the reasoning patterns of large models to small models through distillation techniques.

Overview

- Title: DeepSeek-R1: Incentivizing Reasoning Capability in LLMs via Reinforcement Learning

- Authors: DeepSeek-AI

- Github: deepseek R1

Motivation

- Current large language models (LLMs) have made significant progress in inference tasks, but still face challenges.

- The potential of pure reinforcement learning (RL) in improving the reasoning ability of LLMs has not been fully explored, especially without relying on supervised data.

- Models trained through RL, such as DeepSeek-R1-Zero, have problems with readability and language mixing (e.g., speaking Chinese and English mixed), and need further improvement to improve user-friendliness.

Methods

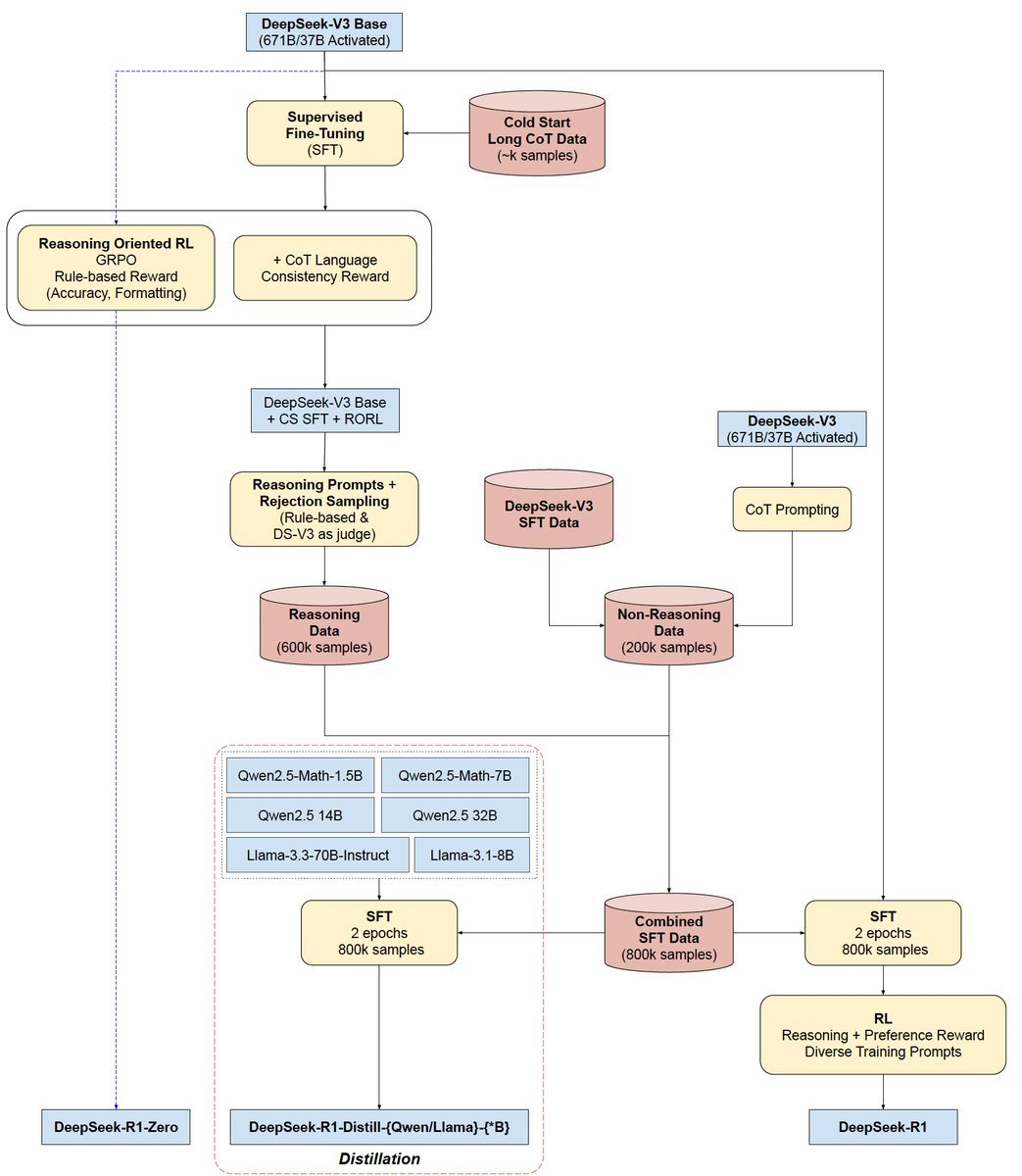

DeepSeek-R1-Zero: Uses DeepSeek-V3-Base as the base model, and GRPO (Group Relative Policy Optimization) as the reinforcement learning framework, without supervised data to improve the model’s performance in inference.

DeepSeek-R1:

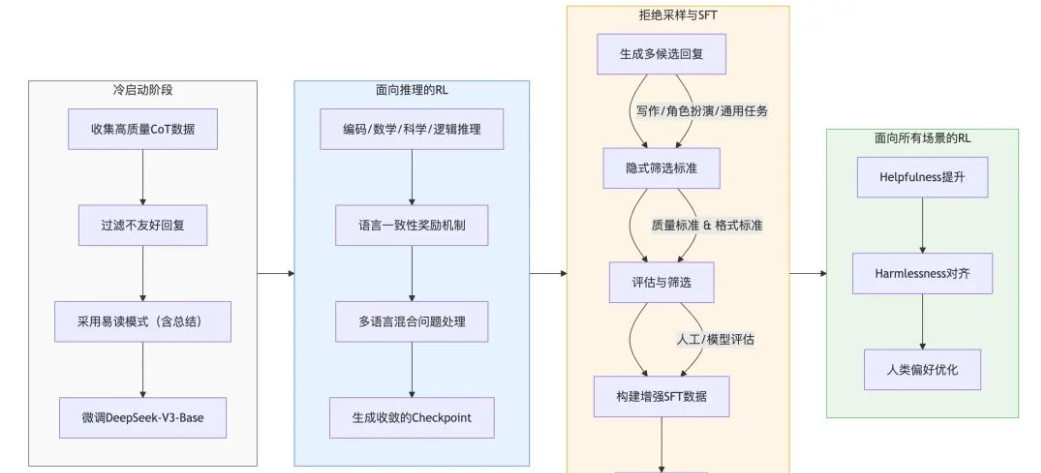

- Cold Start: Collects a small amount of high-quality long CoT (Chain-of-Thought) data and fine-tunes the DeepSeek-V3-Base model as the initial actor for reinforcement learning.

- Reasoning-oriented Reinforcement Learning: The same reinforcement learning training process as DeepSeek-R1-Zero is applied, but with a focus on enhancing the model’s reasoning abilities in areas such as coding, mathematics, science and logical reasoning. Linguistic consistency rewards are introduced to mitigate the problem of linguistic mixing that occurs in CoT.

- Rejection Sampling and Supervised Fine-Tuning: Uses the converged checkpoint of reinforcement learning to collect Supervised Fine-Tuning (SFT) data for subsequent training.

- Reinforcement Learning for all Scenarios: Implements a second-level reinforcement learning phase, which aims to improve the helpfulness and harmlessness of the model while optimizing its reasoning ability.

- Knowledge distillation: Fine-tunes the open source models Qwen and Llama directly using the 800k samples curated by DeepSeek-R1.

Detailed methods and procedures:

DeepSeek-R1-Zero: Reinforcement learning for base models

- Reinforcement learning algorithm: Uses the Group Relative Policy Optimization (GRPO) algorithm, which does not require a critic model, estimates the baseline by group scores, and reduces training costs.

- Reward modeling: Uses a rule-based reward system, including

- accuracy reward: Evaluates whether the answer is correct, such as the correctness of the final result of the math problem answer, the feedback from the compiler for code problems.

- Format reward: Encourages the model to place the thinking process between

<think>and</think>tags.

Training template: A template containing <think> and </think> tags is designed to guide the model to output the thinking process first, and then the final answer.

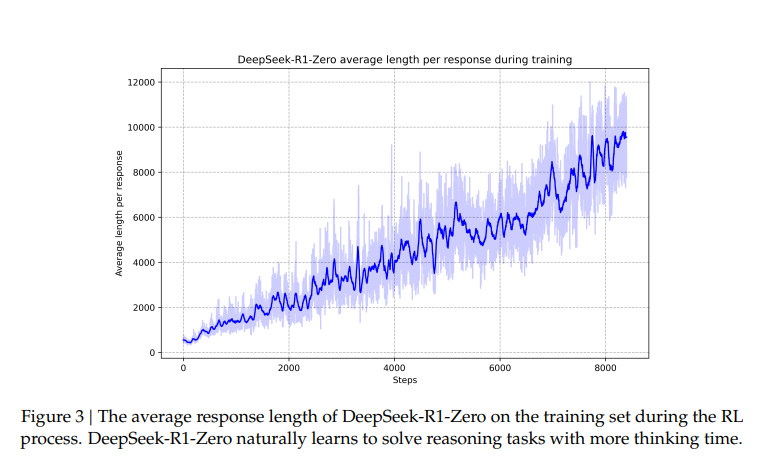

- Self-evolutionary process: DeepSeek-R1-Zero demonstrated self-evolutionary characteristics during training, and was able to autonomously learn more complex reasoning strategies, such as reflection and exploration of multiple problem-solving paths.

DeepSeek-R1: Reinforcement learning combined with cold start

- Cold start: To solve DeepSeek-R1-Zero’s readability problem, DeepSeek-R1 first collects a small amount of high-quality CoT data and fine-tunes the DeepSeek-V3-Base model to serve as the initial actor for reinforcement learning. The cold start data contains summary tags and unfriendly replies are filtered out.

- Method: 1) Select high-quality Long COT data. 2) Add and tags.

- Advantages: 1) Optimized readability (solve the multilingual problem of R1-Zero or the markdown format problem). 2) Carefully selected human-preferred data can continue to improve performance on R1-Zero.

- Question: Why solve the readability problem? Isn’t it possible to do better without solving it (e.g., reducing the length of the output and inferring more efficiently)?

- Reasoning-oriented RL: Based on the cold-start model, a reinforcement learning process similar to DeepSeek-R1-Zero is applied, focusing on improving the model’s ability in tasks such as coding, mathematics, scientific and logical reasoning. To solve the problem of mixed languages (multi-language reasoning), language consistency rewards are introduced.

- Question: How are scientific and logical reasoning tasks and datasets trained?

- Rejection Sampling and SFT: After the inference-guided reinforcement learning converges, the obtained checkpoint is used for rejection sampling to generate new SFT data, which is combined with the data from DeepSeek-V3 to enhance the model’s capabilities in writing, role-playing, and general tasks.

- Purpose:

- This phase is initiated after the inference-oriented reinforcement learning (RL) process converges.

- The main objective is to collect supervised fine-tuning (SFT) data for use in subsequent training rounds.

- Unlike the initial cold-start data, which focuses only on inference, this phase aims to expand the model’s capabilities to cover writing, role-playing and other general-purpose tasks, not just inference.

- Data collection – Inference data:

- Method: Use checkpoints obtained from the inference-oriented RL phase to generate inference trajectories by rejection sampling.

- Data set expansion: Unlike the previous RL phase, which only used rule-based reward data, non-rule-based reward data is introduced here. In some cases, a generative reward model (DeepSeek-V3) is used to determine the response.

- Data filtering: To ensure quality and readability, the output is filtered to remove:

- thought chains containing mixed languages

- long paragraphs

- code blocks

- Sampling and selection: For each prompt, multiple responses were generated. Only the “correct” response was retained for the dataset.

- Dataset size: Approximately 600,000 inference-related training samples were collected in this way.

- Data collection – non-inference data:

- Coverage: Writing, factual question answering (QA), self-awareness and translation.

- The paper mentions the use of DeepSeek-V3’s process and reuses part of the DeepSeek-V3 SFT dataset to handle these non-inference tasks. About 200,000 inference-independent samples were collected. (Note: The details of the collection of non-inference data are further described in Section 2.3.4)

- Use of collected data:

- The collected reasoning and non-reasoning data (a total of about 800,000 samples – 600,000 reasoning samples + 200,000 non-reasoning samples) was then used to fine-tune the DeepSeek-V3-Base model for two epochs. This fine-tuned model was then used in the final RL phase described in Section 2.3.4.

- Summary This step uses the inference capabilities learned through RL to generate a diverse and high-quality SFT dataset. This dataset strengthens the inference capabilities and also expands the general capabilities of the model for training in the final alignment and improvement phase.

- Purpose:

- Reinforcement Learning for all Scenarios: To further align human preferences, a second phase of reinforcement learning is implemented to improve the model’s helpfulness and harmlessness.

- Inference data: e.g. math, code, logical inference or supervised with rule base methods.

- General data: reward models are still used to provide preference information for complex and subtle scenarios. Models trained with pairwise data are also estimated.

- Usefulness: only focus on the final summary results, reducing interference with the inference process.

- Harmlessness: supervise the entire response to reduce any risks.

Model distillation (Distillation):

- In order to obtain a more efficient small inference model, the paper distills the inference ability of DeepSeek-R1 into the open source models of the Qwen and Llama series. The distillation process only uses supervised fine-tuning (SFT) and does not use the reinforcement learning stage.

Conclusion

DeepSeek-R1-Zero: Demonstrates the potential of pure reinforcement learning in motivating LLM inference ability, and can achieve strong performance without relying on supervised data.

- Aha-moment: The beauty of reinforcement learning (the model’s moment of enlightenment, where it allocates more thinking time for a problem by learning to re-evaluate the initial approach)

- The output length continues to increase (thinking time continues to increase)

- The accuracy continues to improve (sampling 16 responses to calculate the accuracy)

- DeepSeek-R1: Further improves model performance by combining cold-start data and iterative reinforcement learning fine-tuning, achieving a level comparable to OpenAI-01-1217 on various tasks.

- Knowledge distillation: Using DeepSeek-R1 as a teacher model, 800K training samples were generated and several small, dense models were fine-tuned. The results show that this distillation method can significantly improve the inference ability of small models.

Limitation

- Limitation 1: The general ability of DeepSeek-R1 needs to be improved. DeepSeek-R1 is still inferior to DeepSeek-V3 in tasks such as function calls, multi-turn dialogue, complex role-playing, and JSON output.

- Limitation 2: Language mixing problem. DeepSeek-R1 may encounter a language mixing problem when processing non-Chinese and non-English queries, for example, reasoning and responding in English.

- Limitation 3: Prompt sensitivity. DeepSeek-R1 is sensitive to prompt words, and few-shot prompting will reduce its performance.

- Limitation 4: Limited application to software engineering tasks. Due to the long evaluation time, large-scale reinforcement learning has not been fully applied to software engineering tasks, and DeepSeek-R1 has limited improvement over DeepSeek-V3 in software engineering benchmarks.