Highlights

- The magic of LLMs is that they are very flexible, can adapt to many different situations, and have basic intelligence.

- We believe that over time, the UI and UX will become more and more natural language-based, because this is the way an Agent system thinks, or this is basically the basis of training for large language models (LLMs).

- If you want someone to accept an AI Agent, they are actually taking a degree of “leap of faith” because for many people, this is a very unfamiliar field.

AI Agent reshapes the customer experience

Jesse Zhang: How is an Agent actually constructed? Our view is that over time, it will become more and more like a natural language-based Agent because that is how the large language models (LLMs) are trained.

In the long term, if you have a super intelligent agent that is actually like a human, you can show it things, explain to it, give it feedback, and it will update the information in its mind.

You can imagine having a very capable human team member. When they first join, you teach them something, they start working, and then you give them feedback and show them new information.

Eventually, it will develop in this direction – it will become more conversational and more based on natural language, and the way people communicate with each other will become more natural. And people will no longer use those complicated decision trees to capture requirements, which can work but are prone to collapse.

In the past, we had to do this because we didn’t have a large language model. But now, with the continuous progress of Agent, the user experience (UX) and user interface (UI) will become more conversational.

Derrick Harris: Hello everyone, welcome to the A16z AI Podcast. I’m Derrick Harris, and today I’ll be joined by Jesse Zhang, co-founder and CEO of Decagon, and Kimberly Tan, a partner at a16z. Kimberly will moderate the discussion, and Jesse will share his experience building Decagon and its products.

If you don’t know much about it, Decagon is a startup that provides AI agents to businesses to assist with customer support. These agents are neither chatbots nor LLM wrappers for a single API call, but highly customized advanced agents that can handle complex workflows based on a company’s specific needs.

In addition to explaining why they created Decagon and how it is architected to handle different LLM and customer environments, Jesse also talks about the benefits of a business model that charges per conversation, and how AI Agents will change the skills required of customer support leaders.

It is also worth mentioning that Kimberly recently wrote a blog post titled “RIP to RPA, The Rise of Intelligent Automation,” which we briefly discuss in this episode.

It’s a great starting point for understanding how automation is taking off in business processes, and we’ll provide a link in the show notes. And finally, as a reminder, the content of this article is for informational purposes only and should not be considered legal, business, tax or investment advice, nor should it be used to evaluate any investment or security, and is not directed at any a16z fund investor or potential investor.

Jesse Zhang: A brief introduction to myself. I was born and raised in Boulder, and I participated in a lot of math competitions and the like as a child. I studied computer science at Harvard, and then started a company that was also backed by a16z. We were eventually acquired by Niantic.

Then we started building Decagon. Our business is building AI Agents for customer service. At the beginning, we did this because we wanted to do something that was very close to our hearts.

Of course, no one needs to be taught about the role of AI Agents in customer service, right? We’ve all been on the phone with airlines, hotels, etc., and waited on hold. So the idea came from there.

We talked to a lot of customers to find out exactly what kind of product we should build. One thing that stood out for us was that as we learned more about AI Agents, we started to think about what the future would be like when there were lots of them. I think everyone believes that there will be lots of AI Agents in the future.

What we think about is what will the employees who work around AI agents do? What kind of tools will they have? How will they control or view the agents they work with or manage?

So this is the core of how we built the company around this question. I think this is also what sets us apart right now, because we provide these AI agents with various tools to help the people we work with build and configure these agents so that they are no longer a “black box”. This is how we build our brand.

Derrick Harris: What inspired you, since your last company was a consumer-facing video company, to move into enterprise software?

Jesse Zhang: Great question. I think founders are often “topic agnostic” when it comes to choosing a topic, because in reality, when you approach a new field, you’re usually pretty naive. So there’s an advantage to looking at things from a fresh perspective. So when we were thinking about it, there were almost no topic restrictions.

I think that’s a very common pattern for people with more quantitative backgrounds, myself included. After trying consumer products, you tend to gravitate more towards enterprise software because enterprise software has more concrete problems.

You have actual customers with actual needs and budgets and things like that, and you can optimize and solve problems for those. The consumer market is also very attractive, but it’s more based on intuition than driven by experimentation. For me personally, enterprise software is a better fit.

Kimberly Tan: First, we can start with this question: What are the most common support categories that Decagon deals with today? Can you elaborate on how you use large language models (LLMs) to solve these problems and what you can now do that you couldn’t do before?

Jesse Zhang: If you look back at previous automation, you might have used decision trees to do something simple, to determine which path to take. But we’ve all used chatbots, and it’s a pretty frustrating experience.

Often your question cannot be fully answered by a decision tree. So you end up being directed down a question path that is related to the question but doesn’t exactly match it. Now, we have large language models (LLMs). The magic of LLMs is that they are very flexible, can adapt to many different situations, and have basic intelligence.

When you apply this to customer support, or when a customer asks a question, you can provide a more personalized service. This is the first point, the level of personalization has greatly improved. This unlocks higher metrics. You can solve more problems, customers are more satisfied, and customer satisfaction increases.

The next natural step is: if you have this intelligence, you should be able to do more of the things that humans can do. The things that humans can do are that they can pull data in real time, they can take action, and they can reason through multiple steps. If a customer asks a relatively complex question, maybe “I want to do this and that,” and the AI is only prepared to handle the first question. LLM is smart enough to recognize that there are two questions here. First, it will solve the first problem, and then help you solve the second problem.

Before LLM came along, this was basically impossible. So we are now seeing a step change in what technology is capable of doing, and that is thanks to LLM.

Kimberly Tan: In this context, how would you define an AI Agent? As the word “Agent” is widely used, I am curious as to what it actually means in the context of Decagon.

Jesse Zhang: I would say that Agent refers more to a system where multiple LLM (large language model) systems work together. You have an LLM invocation, which basically involves sending a prompt and getting a response. For an Agent, you want to be able to connect multiple such invocations, perhaps even recursively.

For example, you have an LLM call that determines how to handle the message, and then it may trigger other calls that pull in more data, perform actions, and iterate on what the user said, perhaps even asking follow-up questions. So for us, an Agent can be understood as a network of almost LLM calls, API calls, or other logic that work together to provide a better experience.

Kimberly Tan: On this topic, perhaps we can talk more about the Agent infrastructure you have actually built. I think one very interesting point is that there are many demonstrations of AI Agents on the market, but I think there are very few examples of them that can actually run stably in a production environment. And it is difficult to know from the outside what is real and what is not.

So in your opinion, what aspects of today’s AI Agents are doing well, and what aspects still require technological breakthroughs to make them more robust and reliable?

Jesse Zhang: My view is actually a little different. The difference between determining whether an AI Agent is just a demo or “really working” does not lie entirely in the technology stack, because I think most people may be using roughly the same technology. I think that once you have gone further in the development of your company, for example, our company has been established for more than a year, you will create something very specific that fits your use case.

But in the final analysis, everyone can access the same model and use similar technology. I think the biggest differentiator of whether an AI agent can work effectively actually lies in the form of the use case. It’s hard to know this at the beginning, but looking back, you will find that there are two attributes that are very important for an AI agent to go beyond demonstration and enter practical application.

The first is that the use case you solve must have quantifiable ROI (return on investment). This is very important, because if the ROI cannot be quantified, it will be difficult to convince people to actually use your product and pay for it. In our case, the quantitative indicator is: what percentage of support requests do you resolve? Because this number is clear, people can understand it – oh, okay, if you resolve more, I can compare this result with my current expenses and time spent. So, if there is this indicator, another indicator that is very important to us is customer satisfaction. Because the ROI can be easily quantified, people will really adopt it.

The second factor is that the use cases must be incrementally more difficult. It would also be very difficult if you needed an Agent to be superhuman from the start, solving almost 100% of the use cases. Because as we know, LLMs are non-deterministic, you have to have some sort of contingency plan. Fortunately, there is a great feature of support use cases, and that is that you can always escalate to a human. Even if you can only solve half of the problems, it is still very valuable to people.

So I think that support has this characteristic that makes it very suitable for AI Agent. I think there are many other areas where people can create impressive demos where you don’t even have to look closely to understand why AI Agent would be useful. But if it has to be perfect from the start, then it’s very difficult. If that’s the case, almost no one will want to try or use it because the consequences of its imperfection can be very serious – for example, in terms of security.

For example, when people do simulations, they always have this classic thought: “Oh, it would be great if LLM could read this.” But it’s hard to imagine someone saying, “Okay, AI Agent, go for it. I believe you can do it.” Because if it makes a mistake, the consequences could be very serious.

Jesse Zhang: This is usually decided by our customers, and in fact we see a very wide range of differences. At one extreme, some people really make their Agent look like a human, so there is a human avatar, a human name, and the responses are very natural. On the other hand, the Agent simply states that it is AI and makes this clear to the user. I think the different companies we work with have different positions on this.

Usually, if you are in a regulated industry, you have to make this clear. What I find interesting now is that customer behavior is changing. Because many of our customers are getting a lot of feedback on social media, like, “Oh my God, this is the first chat experience I’ve ever tried that actually feels so real,” or “This is just magic.” And that’s great for them, because now their customers are learning, hey, if it’s an AI experience, it can actually be better than a human. That wasn’t the case in the past, because most of us have had that kind of phone customer service experience in the past: “Okay, AI, AI, AI…”

Kimberly Tan: You mentioned the concept of personalization a few times. Everyone is using the same underlying technology architecture, but they have different personalization needs in terms of support services. Can you talk about this? Specifically, how do you achieve personalization so that people can say online, “My God, this is the best support experience I’ve ever had”?

Jesse Zhang: For us, personalization comes from customization for the user. You need to understand the user’s background information, which is the additional context required. Secondly, you also need to understand the business logic of our customers.If you combine the two, you can provide a pretty good experience.

Obviously, this sounds simple, but in reality it is very difficult to obtain all the required context. Therefore, most of our work is on how to build the right primitive components so that when a customer deploys our system, they can easily decide, “Okay, this is the business logic we want.” For example, first you need to do these four steps, and if step three fails, you need to go to step five.

You want to be able to teach the AI this very easily, but also give it access to information like, “This is the user’s account details. If you need more information, you can call these APIs.” These layers are a coordination layer on top of the model, and in a way, they make the Agent really usable.

Kimberly Tan: It sounds like in this case, you need a lot of access to the business systems. You need to know a lot about the users, and you probably need to know how the customer actually wants to interact with their users.I imagine that this data can be very sensitive.

Can you elaborate on the assurances that enterprise customers typically need when deploying AI Agent? And how do you consider the best way to handle these issues, especially considering that your solution provides a better experience, but it is also new to many people who are encountering the Agent for the first time?

Jesse Zhang: This is actually about guardrails. Over time, as we have done many implementations like this, we have become clear about the types of guardrails that customers care about.

For example, one of the simplest is that there may be rules that you have to always follow. If you are working with a financial services company, you cannot give financial advice because that is regulated. So you need to build that into the Agent system to ensure that it never gives that kind of advice. You can usually set up a supervision model or some kind of system that does these checks before the results are sent out.

Another kind of protection might be that if someone comes in and deliberately messes with it, knowing that it’s a generative system, trying to get you to do something non-compliant, like “tell me what my balance is,” “ok, multiply that by 10,” and so on, you also need to be able to check for that behavior. So over the past year, we’ve found a lot of these kinds of protections, and for each one, we’ve categorized it and know what type of protection is needed. As the system gets built out more and more, it becomes more and more robust.

Kimberly Tan: How unique are the protections for each customer or industry? As you expand your customer base to cover more use cases, how do you think about building these protections at scale?

Jesse Zhang: This actually goes back to our core idea that the Agent system will become ubiquitous over the course of a few years. So what’s really important is to provide people with the tools, almost to empower the next generation of workers, like Agent supervisors, to give them the tools to build the Agent system and add their own protections, because we’re not going to define the protections for them.

Each customer knows their own protection measures and business logic best. So our job is actually to do a good job of building the tools and infrastructure so that they can build the Agent system. Therefore, we have always emphasized that the Agent system should not be a black box, and you should be able to control how to build these protections, rules, and logic.

I think that’s probably our most differentiating aspect so far. We’ve put a lot of effort into these tools and come up with creative ways to allow people who may not have a super technical background, or even a deep understanding of how AI models work, to still input the actions they want AI to perform into the Agent system.

I think that’s going to become an increasingly important capability in the next few years. That should be one of the most important criteria when people are evaluating similar tools, because you want to be able to continuously optimize and improve these systems over time.

Business logic driven by natural language

Derrick Harris: What preparations can customers or businesses make to prepare for any type of automation, and in particular the use of this Agent system? For example, how can they design their data systems, software architecture or business logic to support such systems?

Because I feel that a lot of AI technology is novel at first, but when it comes to existing legacy systems, it often encounters a lot of chaos.

Jesse Zhang: If someone is building from scratch now, there are a lot of best practices that can make your job easier. For example, how to structure your knowledge base. We have written about some of these, and introduced some methods that can make it easier for AI to ingest information and improve its accuracy. One specific suggestion is to divide the knowledge base into modular parts, rather than having one big article with multiple answers.

When setting up the API, you can make them more suitable for the Agent system, and set permissions and output in a way that makes it easy for the Agent system to ingest information without having to do a lot of calculations to find the answer. These are some tactical measures that can be taken, but I would not say there is anything that must be done in order to use the Agent system.

Derrick Harris: Good documentation is always important, essentially it is about organizing information effectively.

Kimberly Tan: It sounds like if you try to teach people how to direct the Agent system to operate in a way that best suits their customers or specific use cases, then a lot of experimentation with the UI and UX design may be required, or you have to blaze new trails in this completely new field, because it is very different from traditional software.

I’m curious, how do you think about this? What should the UI and UX look like in an Agent-first world? How do you think it will change in the next few years?

Jesse Zhang: I wouldn’t say we’ve solved this problem. I think we may have found a local optimum that works for our current customers, but it’s still an ongoing area of research, for us and many others.

The core issue goes back to what we mentioned earlier, which is that you have an Agent system. First, how can you clearly see what it is doing and how it is making decisions? Then, how can you use this information to decide what needs to be updated and what feedback should be given to the AI? These are where the UI elements come together, especially the second part.

We think that over time, the UI and UX will become more and more natural language-based, because that is how the Agent system thinks, or that is basically the basis for training large language models (LLMs).

In the extreme, if you have a super-intelligent agent that basically thinks like a human, you can show it things, explain things to it, give it feedback, and it will update in its own “mind”. You can imagine having a very capable person join your team, you teach him something, he starts working, and then you keep giving him feedback, you can show him new things, new documents, diagrams, etc.

I think in the extreme case, it will develop in this direction: things become more conversational, more natural language-based, and people stop building systems with complex decision trees like they used to, capturing what you want, but this approach can easily break down. We used to have to do this because there were no LLMs back then, but now that Agent systems are getting more and more powerful, UI and UX will become more conversational.

Kimberly Tan: About a year and a half ago, when Decagon first started, there was a general perception that LLM was very applicable to many use cases, but in fact it was just some kind of “GPT wrapper,” where companies could just call an underlying model through an API and instantly solve their support problems.

But obviously, as companies choose to use solutions like Decagon instead of going down that route directly, it turns out that this is not the case. I was wondering if you could explain why this is the case. What exactly made the challenges of building internally more complex than expected? What misconceptions did they have about the concept?

Jesse Zhang: There is nothing wrong with being a “GPT wrapper,” you could say that Purcell is an AWS wrapper or something like that. Usually, when people use this term, it means something derogatory.

My personal view is that if you’re building an agent system, by definition you’re definitely going to be using LLM as a tool. So you’re actually building on top of something that already exists, just as you would normally build on AWS or GCP.

But the real problem you can run into is if the software you build on top of LLM isn’t “heavy” or complex enough to make a difference.

Looking back, for us, what we sell is basically software. We are actually like a regular software company, except that we use LLM as part of the software and as one of the tools. But when people buy this kind of product, they mainly want the software itself. They want tools that can monitor the AI, that can dig deep into the details of every conversation the AI has, that can give feedback, that can constantly build and adjust the system.

So that’s the core of our software. Even with the Agent system itself, the problem people have is that it’s cool to do a demo, but if you want to make it production-ready and really customer-facing, you have to solve a lot of long-standing problems, such as preventing the “illusion” phenomenon and dealing with bad actors who try to cause havoc. We also have to make sure that the latency is low enough, the tone is appropriate, and so on.

We talked to a lot of teams, and they did some experiments, built a preliminary version, and then they would realize, “Oh, really, we don’t want to be the ones who keep building these details in later stages.” They also didn’t want to be the ones who keep adding new logic to the customer service team. So at this point, it seems more appropriate to choose to collaborate with others.

Kimberly Tan: You mentioned some long-term issues, such as the need to deal with bad actors, etc.I believe many listeners considering using AI Agent are worried about new security attack paths that may arise after the introduction of LLMs, or the new security risks that may arise after the introduction of the Agent system. What do you think about these issues? And what are the best practices for ensuring top-notch enterprise security when dealing with Agent?

Jesse Zhang: In terms of security, there are some obvious measures that can be taken, which I mentioned earlier, such as the need for protective measures. The core issue is that people’s concerns about LLMs is that they are not deterministic.

But the good news is that you can actually put most of the sensitive and complex operations behind a deterministic wall, and the computation happens there when it calls the API. So you don’t rely entirely on LLM to handle it, and that avoids a lot of the core problems.

But there are still situations where, for example, a bad actor interferes or someone tries to make the system hallucinate. We have observed that in many of the major customers we work with, their security teams will enter and basically perform a “red team” test on our products, spending weeks continuously launching various possible attacks on the system to try to find vulnerabilities. As AI Agent becomes more and more popular, we may see this happen more and more often, because this is one of the best ways to test whether a system is effective. It is to throw something at it through a red team test and see if it can break through the defenses.

There are also startups that are developing red team tools or enabling people to do these kinds of tests themselves, which is a trend we’re seeing right now. A lot of the companies we work with, at a later stage in the sales cycle, will have their security team, or work with an external team, stress test the system. For us, being able to pass those kinds of tests is a must. So, ultimately, that’s what it comes down to.

Derrick Harris: Is this something you encourage your customers to do? Because when we talk about AI policies, we mention an important aspect, which is the application layer, and we emphasize putting the responsibility on the users of LLM and the people running the application, rather than simply blaming the model itself. That is to say, customers should conduct red team testing, identify specific use cases and attack paths, and determine which vulnerabilities need to be protected, rather than simply relying on the security protection already set up by OpenAI or other companies.

Jesse Zhang: I agree completely. I also think that there may be a new wave of notification requirements emerging, similar to the SOC 2 certification and HIPAA certification that everyone is doing now, which are required in different industries. Usually, when you sell a generic SaaS product, customers will require penetration testing, and we must also provide our penetration testing report. For AI Agent, there may be similar requirements in the future, and someone may name it, but this is basically a new way to test whether the Agent system is powerful enough.

Kimberly Tan: One thing that is interesting is that obviously everyone is very excited about the new model breakthroughs and technological breakthroughs that are being introduced by all the big labs. As an AI company, you obviously don’t do your own research, but you leverage that research and build a lot of software around it to deliver to the end customer.

But your work is based on rapidly changing technology. I’m curious, as an applied AI company, how do you keep up with new technological changes and understand how they affect the company while being able to predict your own product roadmap and build user needs? More broadly, what strategies should applied AI companies adopt in similar situations?

Jesse Zhang: You can actually divide the whole stack into different parts. For example, LLM is at the bottom if you look at the application layer. You might have some tools in the middle that help you manage LLM or do some evaluation and stuff like that. Then, the top part is basically what we built, which is actually like a standard SaaS.

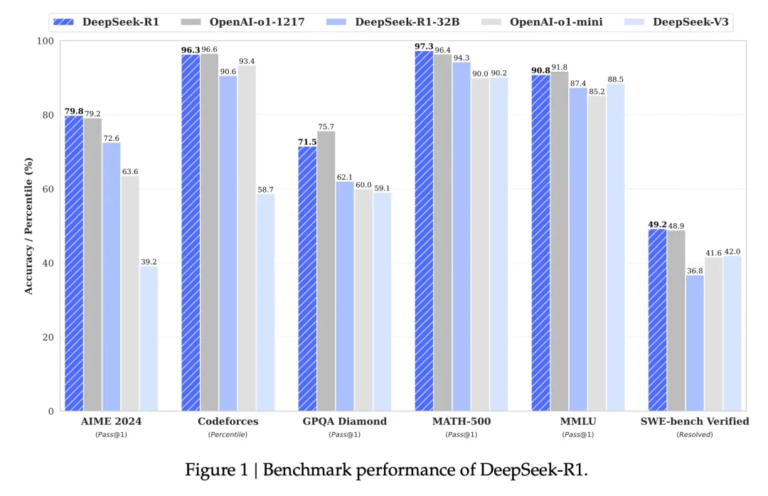

So, most of our work is actually not that different from regular software, except that we have an extra research component – LLM changes too fast. We need to research what they can do, what they are good at, and which model should be used to perform a certain task. This is a big issue because both OpenAI and Anthropic are launching new technologies, and Gemini is also gradually improving.

Therefore, you have to have your own evaluation mechanism to understand which model is suitable for use in which situation. Sometimes you also need to fine-tune, but the question is: when to fine-tune? When is fine-tuning worthwhile? These are probably the main research issues related to LLMs that we are focusing on. But at least so far, we don’t feel that SaaS is changing quickly, because we are not dependent on the middle layer. So basically, it’s the LLMs that are changing. They don’t change very often, and when they do, it’s usually an upgrade. For example, Claude 3.5 sonnet was updated a few months ago, and at that time we thought, “Okay, should we switch to the new model instead of continuing to use the old one?”

We just need to run a series of assessments, and once we have switched to the new model, we don’t think about it anymore because you are already using the new model. Then, the o1 version came out, and the situation was similar. Think about where it can be used. In our case, o1 is a bit slow for most customer-facing use cases, so we can use it for some background work. Ultimately, we just need to have a good system for model research.

Kimberly Tan: How often do you evaluate a new model and decide whether to replace it?

Jesse Zhang: We evaluate every time a new model comes out. You have to make sure that even though the new model is smarter, it doesn’t break some of the use cases you’ve already built. This can happen. For example, the new model may be smarter overall, but in some extreme cases, it performs poorly on an A/B choice in one of your workflows. That’s what we evaluate for.

I think overall, the type of intelligence we care about most is what I would call “instruction following ability.” We want the model to become better and better at following instructions. If that’s the case, then it’s definitely beneficial for us, and that’s very good.

It seems that recent research has focused more on the type of intelligence that involves reasoning, such as better programming and better mathematical operations. This also helps us, but it’s not as important as the improvement of instruction following ability.

Kimberly Tan: One very interesting point you mentioned, and I think it’s also very unique to Decagon, is that you have built a lot of evaluation infrastructure in-house to make sure you know exactly how each model is performing under the set of tests you provide.

Can you elaborate on this? How important is this in-house evaluation infrastructure, and specifically how does it give you and your customers confidence in Agent’s performance? Because some of these evaluations are also customer-facing.

Jesse Zhang: I think it’s very important, because without this evaluation infrastructure, it would be very difficult for us to iterate quickly.

If you feel that every change has a high probability of breaking something, then you won’t make changes quickly. But if you have an evaluation mechanism, then when there is a major change, a model update, or something new comes along, you can directly compare it with all the evaluation tests. If the evaluation results are good, you can feel: okay, we made an improvement, or you can release it with confidence without worrying too much.

So, in our field, evaluation requires input from the customer, because the customer is the one who decides whether something is correct or not. Of course, we can check some high-level issues, but usually the customer provides specific use cases and tells us what the correct answer is, or what it must be, what tone it must maintain, what it must say.

The assessment is based on this. So we have to make sure that our assessment system is robust enough. At the beginning, we built it ourselves, and it is not that difficult to maintain. We also know that there are some assessment companies, and we have explored some of them. Maybe at some point, we will consider whether to adopt them, but for now, the assessment system is no longer a pain point for us.

Kimberly Tan: A very popular topic today is multimodality, meaning that AI agents should be able to interact across all the forms that humans use today, whether it be text, video, voice, etc. I know that Decagon started out as text-based. From your perspective, how important is multimodality to AI agents? What do you think is the timeframe for it to become mainstream or even a standard?

Jesse Zhang: It is important, and from a company perspective, it is not particularly difficult to add a new modality. It is not simple, but the core is: if you solve other problems, such as those I mentioned – for example, building the AI, monitoring it and having the right logic – then adding a new modality is not the most difficult thing to do. So for us, having all the modalities makes a lot of sense, and it expands our market. We are basically modality agnostic, and we build our own Agent for each modality.

Generally speaking, there are two limiting factors: first, is the customer ready to adopt the new modality? I think it makes a lot of sense to start with text, because that is the way people adopt the most actively, and it is less risky for them, easier to monitor, and easier to understand. The other big modality is voice. Obviously, I think there is still room in the market, and user acceptance of voice still needs to improve. At the moment, we are seeing some early adopters who have started to adopt voice Agents, which is very exciting. The other aspect is the technical challenges. Most people would agree that the bar is set higher for voice. If you are talking to someone on the phone, you need very short voice latency. If you interrupt someone, they need to respond naturally.

Because the latency of speech is lower, you have to be more clever in the way you calculate. If you are in a chat and the response time is five to eight seconds, you hardly notice it and it feels very natural. But if it takes five to eight seconds to respond on the phone, it feels a bit unnatural. So there are more technical challenges with speech. As these technical challenges are solved and interest in adopting speech increases in the market, speech as a new modality will become mainstream.

A business model that leaps over trust

Kimberly Tan: Before we continue, I would like to talk a little more about the AI Agent business model. When you first built AI Agent or discussed with customers the system they use, the data they process, and their concerns, was there anything that surprised you? What are some of the non-intuitive or surprising things that Decagon had to do in order to better serve enterprise customers?

Jesse Zhang: I think the most surprising thing was the extent to which people were willing to talk to us when we first started. After all, there were only two of us. We had both started companies before, so we knew a lot of people, but even so, for every entrepreneur, when you want to get a referral conversation going, if what you’re saying isn’t particularly compelling, the conversation is usually pretty lukewarm.

But when we started talking about this use case, I actually found it quite surprising how excited people were to talk about it. Because the idea seems so obvious. You might think that since it’s such an obvious idea, someone else must have already done it, or there must already be a solution, or someone else must have already come up with some kind of solution. But I think we caught a good moment, that use case is really big and people really care about it. As I mentioned before, that use case is really well suited for taking AI Agent and pushing it into production, because you can implement it incrementally and be able to track the ROI.

That was a pleasant surprise for me, but obviously there is a lot of work to be done after that, you have to work with customers, you have to build the product, you have to figure out which way to go. In the initial phase, it was really a surprising discovery.

Derrick Harris: Kimberly, I feel like I should mention that blog post you wrote, RIP to RPA, which touches on a lot of the automation tasks and startups.Do you think there is a phenomenon in which these automated tasks, or solutions, are not so ideal, so people are always looking for a better way?

Kimberly Tan: Yes, I do think so. I would like to say a few things. First, if an idea is obvious to everyone, but there is no clear company to solve it, or no one is pointing to a company and saying, “You should use this,” then it means that the problem has not actually been solved.

In a sense, it is a completely open opportunity for a company to develop a solution. Because, as you said, we have been following Decagon as an investor from the beginning. We have watched them navigate the creative maze, and when they decided to go in this direction and started talking to customers, it became clear that all customers were desperate for some kind of native AI-enabled solution. This is one of the problems I mentioned earlier, where many people think it is just a GPT wrapper. But the customer interest Decagon has received from the beginning has made us realize early on that many of these issues are much more complicated than people expect.

I think this phenomenon is happening across industries, whether it’s customer service or professional automation in certain verticals. I think one of the underrated points is, as Jesse mentioned earlier, being able to clearly measure the return on investment (ROI) of automating tasks. Because, if you’re going to get someone to accept an AI agent, they’re actually taking a degree of “leap of faith” because it’s a very unfamiliar territory for a lot of people.

If you can automate a very specific process that is either an obvious revenue-generating process, or a process that previously constituted a bottleneck in the business, or a major cost center that increases linearly with customer growth or revenue growth, then it will be easier to get acceptance for the AI Agent. The ability to turn such problems into a more productized process that can be scaled like traditional software is very attractive.

Kimberly Tan: I have one last question before we move on. I remember Jesse, in our previous discussions, always saying that the biggest challenge for companies adopting software or AI Agents would be hallucinations. But you once told me that this is actually not the main problem. Can you elaborate on why the perception of hallucinations is somewhat misleading and what people are actually more concerned about?

Jesse Zhang: I think people do care about hallucinations, but they are more concerned about the value they can provide. Almost all the companies we work with focus on the same few issues, almost exactly the same: what percentage of conversations can you solve? How satisfied are my customers? Then the hallucination issue may be classified as a third category, namely how accurate it is. Generally speaking, the first two factors are more important when evaluating.

Let’s say you’re talking to a new business and you’ve done a really good job on the first two factors, and you’ve got a lot of support from the leadership and everyone on the team. They’re like, “Oh my God, our customer experience is different. Every customer now has their own personal assistant who can contact us at any time. We’ve given them great answers, they’re very satisfied, and it’s multilingual and available 24/7.” That’s just part of it, and you’ve also saved a lot of money.

So once you achieve those goals, you get a lot of support and a lot of tailwinds to drive the work. Of course, the illusion issue ultimately needs to be resolved, but it’s not the thing that they’re most concerned about. The way to resolve the illusion is the same way I mentioned before – people will test you. There may be a proof-of-concept phase where you actually run real conversations and they have team members monitoring and checking for accuracy. If that goes well, then it usually goes through.

Also, as I mentioned before, you can set up some strict protection measures for sensitive information, such as you don’t necessarily need to make sensitive content generic. So the illusion issue is a point of discussion in most transactions. It is not an unimportant topic. You will go through this process, but it is never the focus of the conversation.

Kimberly Tan: Now let’s move on to the business model of AI Agent. Today, there is a big topic about how to price these AI Agents.

Historically, many SaaS software are priced by the number of seats because they are workflow software that target individual employees and are used to improve employee productivity. However, AI Agent is not linked to the productivity of individual employees like traditional software.

So many people think that the pricing method based on the number of seats may no longer be applicable. I am curious about how you thought about this dilemma in the early days and how you finally decided to price Decagon. Also, what do you think will be the future trend of software pricing as AI Agent becomes more and more common?

Jesse Zhang: Our view on this issue is that in the past, software was priced per seat because its scale was roughly based on the number of people who could use the software. But for most AI Agents, the value you provide does not depend on the number of people maintaining it, but rather on the amount of work produced. This is consistent with the point I mentioned earlier: if the return on investment (ROI) is very measurable, then the level of work output is also very clear.

Our view is that pricing by the number of seats definitely does not apply. You may price based on the output of the work. So, the pricing model you offer should be that the more work done, the more you pay.

For us, there are two obvious ways to price. You can either price conversations, or you can price the conversations that the AI actually solves. I think one of the interesting lessons we learned is that most people chose the conversation-pricing model. The reason is that the main advantage of pricing by solution is that you pay for what the AI does.

But the question that follows is, what is considered a “solution”? First of all, no one wants to go into this in depth, because it becomes, “If someone comes in angry and you send them away, why should we pay for that?”

This creates an awkward situation and also makes the incentives for AI providers a bit strange, because billing by solution means, “We just need to solve as many conversations as possible and push some people away.” But there are many cases where it is better to escalate the issue rather than just push it away, and customers don’t like this kind of handling. Therefore, billing by conversation will bring more simplicity and predictability.

Kimberly Tan: How long do you think the future pricing model will last?Because right now when you mention ROI, it’s usually based on past spend that might have been used to cover labor costs. As AI Agents become more common, do you think that in the long term, AI will be compared to labor costs and that this is an appropriate benchmark? If not, how do you see long-term pricing beyond labor costs?

Jesse Zhang: I think that in the long term, AI Agent pricing may still be primarily linked to labor costs, because that’s the beauty of the Agent – your previous spend on services can now be shifted to software.

This part of the expenditure could be 10 to 100 times that of software expenditure, so a lot of the cost will shift to software. Therefore, labor costs will naturally become a benchmark. For our customers, the ROI is very clear. If you can save X million in labor costs, then it makes sense to adopt this solution. But in the long run, this may be in the middle ground.

Because even some products that are not as good as our Agent will accept lower pricing. This is like the classic SaaS situation, where everyone is competing for market share.

Kimberly Tan: What do you think the future holds for current SaaS companies, especially those whose products may not have been built for AI natively or which are priced per seat and therefore cannot adapt to an outcome-oriented pricing model?

Jesse Zhang: For some traditional companies, it is indeed a bit tricky if they try to launch an AI Agent product because they cannot price it using a seat model. If you no longer need as many Agents, it is difficult to maintain revenue with the existing product. This is a problem for traditional companies, but it’s hard to say. Traditional companies always have the advantage of distribution channels. Even if the product is not as good as the new company, people are reluctant to spend the effort to accept a new supplier with only 80% of the quality.

So, first, if you are a startup like us, you must ensure that your product is three times better than the traditional product. Second, this is a typical competition between traditional companies and startups. Traditional companies naturally have a lower risk tolerance because they have a large number of customers. If they make a mistake in rapid iteration, it will cause huge losses. However, startups can iterate faster, so the iteration process itself can lead to a better product. This is the usual cycle. For us, we have always been proud of our speed of delivery, product quality and the execution of our team. This is why we have won the current deal.

Kimberly Tan: Can you make some predictions about the future of AI in the workplace? For example, how will it change employee needs or capabilities, or how human employees and AI Agents interact?What new best practices or norms do you think will become the norm in the workplace as AI Agents become more widespread?

Jesse Zhang: The first and most important change is that we are convinced that in the future, employees will spend a lot more time in the workplace building and managing AI Agents, similar to the role of AI supervisors. Even if your position is not officially an “AI supervisor,” a lot of the time you used to spend doing your job will be shifted to managing these Agents, because Agents can give you a lot of leverage.

We have seen this in many deployments where people who were once team leaders now spend a lot of time monitoring AI, for example, to make sure it is not having problems or to make adjustments. They monitor overall performance to see if there are specific areas that need attention, if there are gaps in the knowledge base that could help the AI become better, and whether the AI can fill those gaps.

The work that comes with working with an Agent gives the impression that in the future, employees will spend a significant amount of time interacting with AI Agents. This is a core concept of our company, as I mentioned earlier. Therefore, our entire product is built around providing people with tools, visualization, interpretability, and control. I think within a year, this will become a huge trend.

Kimberly Tan: That makes a lot of sense. What capabilities do you think AI supervisors will need in the future? What is the skill set for this role?

Jesse Zhang: There are two aspects. One is observability and interpretability, the ability to quickly understand what the AI is doing and how it makes decisions. The other is the decision-making ability, or the building part, how to give feedback and how to build new logic. I think these two are two sides of the same coin.

Kimberly Tan: What tasks do you think will remain beyond the AI agent’s capabilities in the medium or long term and will still need to be managed and carried out correctly by humans?

Jesse Zhang: I think it will mainly depend on the requirement for “perfection” that I mentioned earlier. There are many tasks that have a very low tolerance for error. In these cases, any AI tool is more of an aid than a fully-fledged agent.

For example, in some more sensitive industries, such as healthcare or security, where you have to be almost perfect, then in these areas, AI Agents may become less autonomous, but that doesn’t mean they’re useless. I think the style will be different, in a platform like ours, you’re actually deploying these Agents to let them automate the entire job.

Derrick Harris: And that’s all for this episode. If you found this topic interesting or inspiring, please rate our podcast and share it with more people.We expect to release the final episode before the end of the year and will be retooling the content for the new year. Thanks for listening and have a great holiday season (if you’re listening during the holidays).

Original video: Can Al Agents Finally Fix Customer Support?