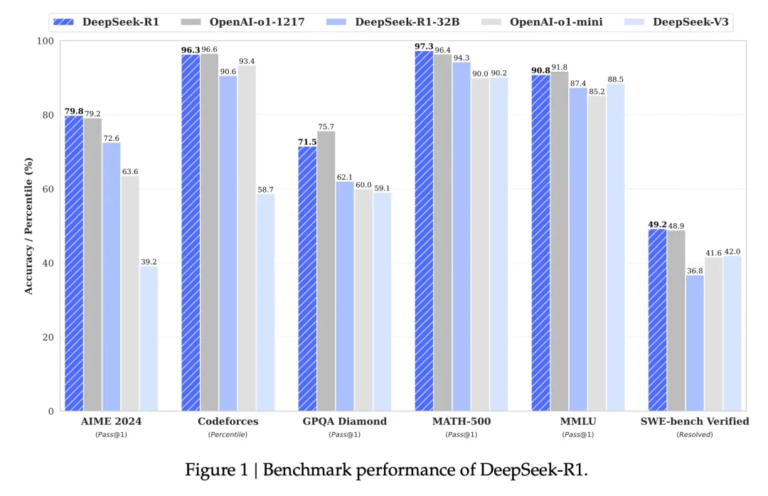

DeepSeek-R1 technology revealed: core principles of the paper are broken down and the key to breakthrough model performance is revealed

Today we will share DeepSeek R1, Title: DeepSeek-R1: Incentivizing Reasoning Capability in LLMs via Reinforcement Learning: Incentivizing the reasoning capability of LLM via reinforcement learning. This paper introduces DeepSeek’s first generation of reasoning models, DeepSeek-R1-Zero and DeepSeek-R1. The DeepSeek-R1-Zero model was trained through large-scale reinforcement learning (RL) without supervised fine-tuning (SFT) as an initial step,…