The Google Gemini 2.0 family is finally complete! It dominates the charts as soon as it is released.

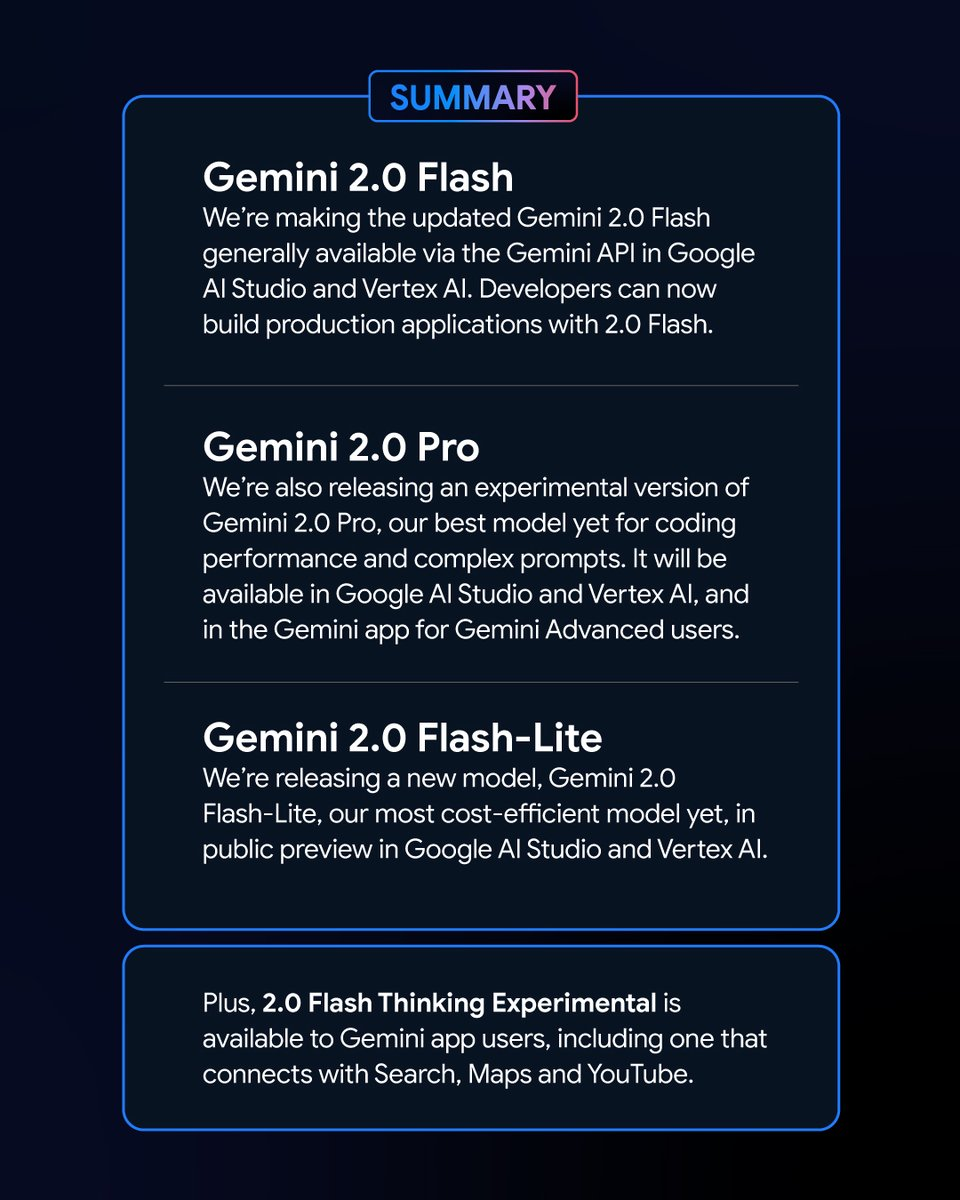

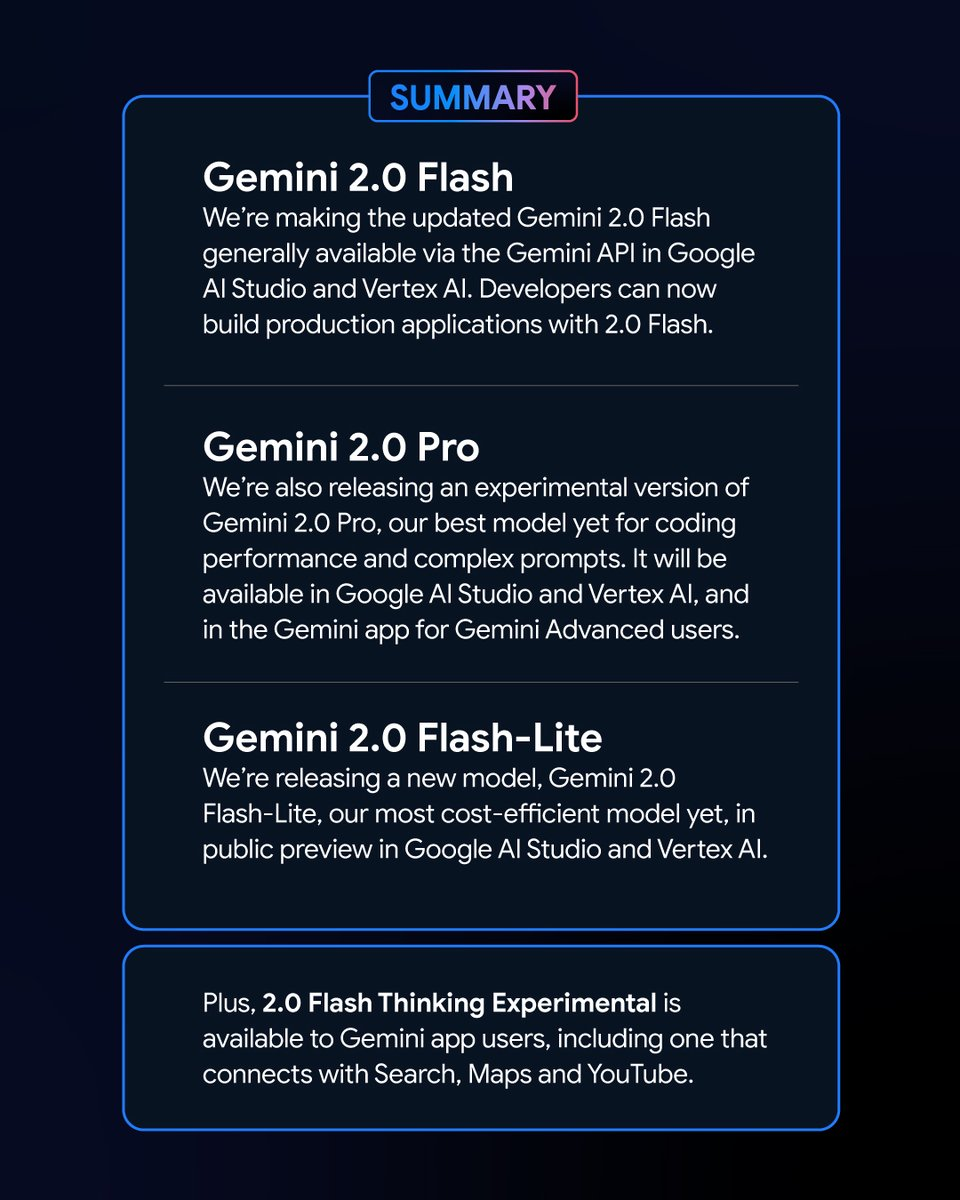

Amidst the pursuit and blockades of Deepseek, Qwen and o3, Google released three models in one go early this morning: Gemini 2.0 Pro, Gemini 2.0 Flash and Gemini 2.0 Flash-Lite.

On the large model LMSYS rankings, Gemini 2.0-Pro has shot to the top, and the Gemini-2.0 family has all advanced into the top 10.

Let’s first look at the model performance

The Gemini 2.0 models released this time all have their own highlights in terms of performance!

Gemini 2.0 Pro (Experimental)

As the flagship model of the Gemini series, the Pro version represents the most advanced AI capabilities of Google, and excels in coding and inference in particular:

- Extra-large context window: supports context processing of up to 2M tokens

- Powerful tool integration: deeply integrates Google search and code execution

- Availability: already available as an experimental version on Google AI Studio, Vertex AI and the Gemini Advanced platform

Gemini 2.0 Flash

is positioned as a “highly efficient workhorse”. It is designed with a focus on balancing speed and performance, and is intended to provide ideal support for application scenarios that require low-latency responses:

- Millions of context windows: Supports 1M tokens context

- Excellent multimodal inference capabilities: Good at processing multimodal data, currently supports multimodal input and single-modal text input

- Future feature expansion: Image generation and text-to-speech functions will be available soon

- Availability: Officially released on the Vertex AI Studio and Google AI Studio platforms, and can be accessed via the Gemini API.

Gemini 2.0 Flash-Lite (Preview)

As the “most cost-effective” model, Flash-Lite offers the best balance between speed, cost and performance.

- Cost-effective advantages: While maintaining the same speed and cost as 1.5 Flash, it outperforms 1.5 Flash in most benchmark tests.

- Million-level context window: Also supports 1M tokens of context processing power.

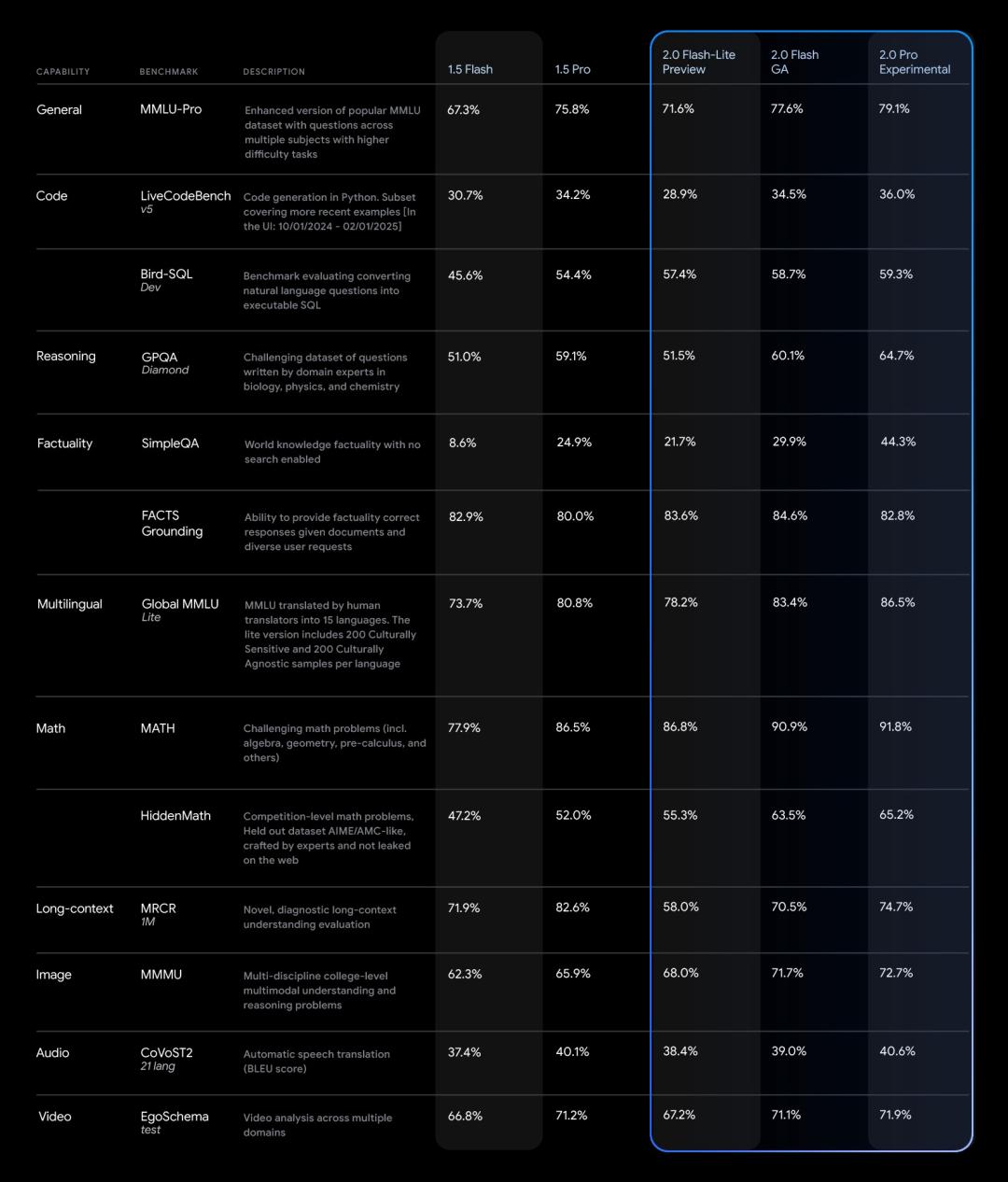

According to the performance evaluation comparison released by Google, the Gemini 2.0 Pro Experimental version achieved the highest scores in almost all benchmark tests, performing excellently:

It performed particularly well in code generation tasks (such as LiveCodeBench v5) and complex mathematical problems (such as algebra, geometry and calculus). In addition, there was a significant improvement in the test of understanding complex long documents.

And the pricing

Google is also a conscientious manufacturer in terms of API cost-effectiveness.

Gemini 2.0 Flash’s million tokens cost less than one dollar… It supports multiple modes, networked searches, and an unprecedented context window.

In contrast, Deepseek V3 currently costs one dollar for a million tokens, and R1 inference costs four dollars.

PS: But I still want to thank DeepSeek for lowering the price. Anyone who can lower the price is family.

This is really too cheap! Compared to the performance, I think what Gemini has been overlooked is the price!

Case performance

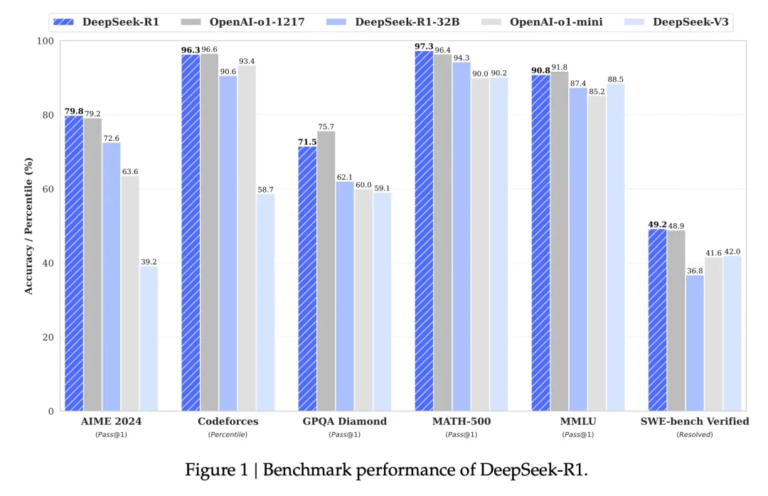

Since it claims to be as good as Deepseek, we definitely have to see how it actually performs in cases and see how various netizens have tested it

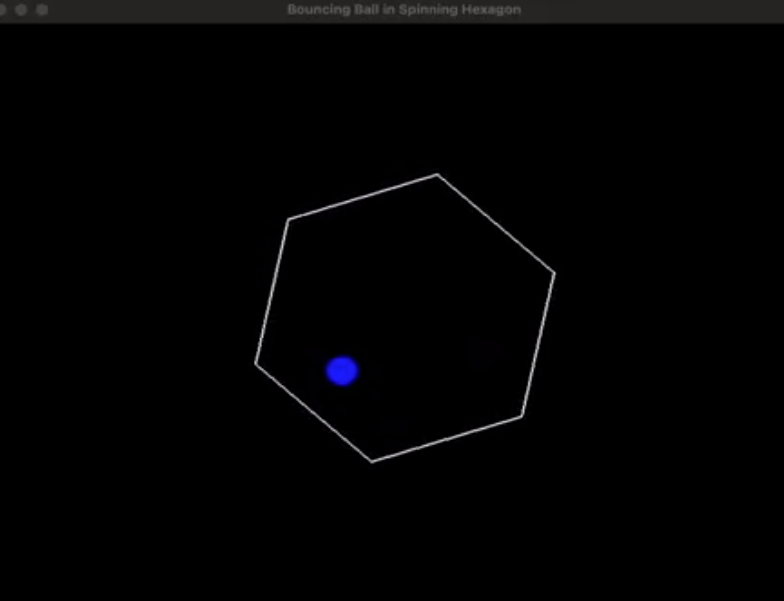

A physics-based pinball game

Let’s first look at this popular case, which uses a physics engine to simulate realistic effects such as collisions, friction, and gravity.

Hint: Write a Python program that displays a ball bouncing inside a rotating hexagon. The ball should be affected by gravity and friction, and must bounce realistically off the rotating walls

This is how Deepseek R1 and o3-min perform:

The version generated by Gemini 2.0 Pro Experimental:

The remaining two models don’t perform well

Double the difficulty! Make the ball split into 100 balls!

Hint: Write a script for 100 bouncing bright yellow balls inside a sphere, making sure to handle collision detection correctly. Make the sphere slowly rotate. Make sure the balls stay inside the sphere. Implement in p5.js

Well done! The slow rotation of the sphere is very smooth, and the simulation of physical laws is excellent. The 100 balls are also colliding steadily and “doing their jobs” ~

Write a p5.js script to simulate 25 particles bouncing around in a vacuum space inside a cylindrical container. Use a different color for each ball and make sure they leave a trail to show their movement. Add a slow rotation of the container to better observe what is happening in the scene. Make sure to create appropriate collision detection and physics rules to ensure the particles remain inside the container. Add an external spherical container. Add a slow zoom in and out effect to the entire scene.

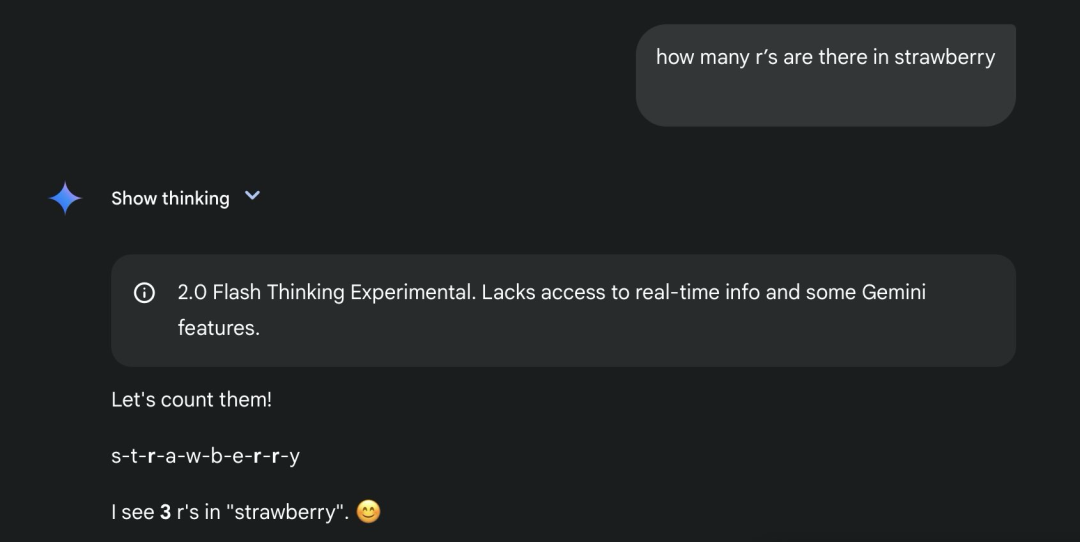

A strawberry test question that can’t be bypassed

And clever (sly) netizens have thrown out the classic strawberry test again:

How many r’s are there in strawberry

And Gemini 2.0 Flash Thinking Experimental got the answer right:

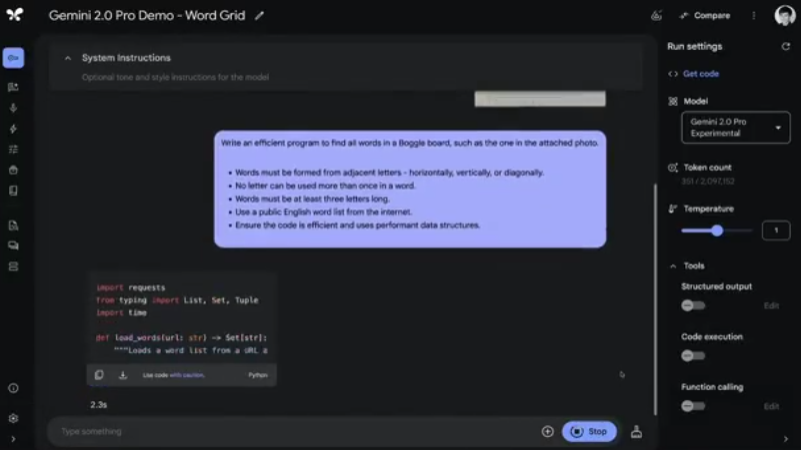

Google boss Jeff Dean personally tested his programming skills

Jeff Dean, chief scientist at Google DeepMind and Google Research, also tested the programming skills of a wave of Gemini 2.0 Pro:

He had the model complete the classic Boggle game, and the code generated the first time completed finding all valid words in the “letter square” game:

Moreover, Jeff Dean said that the code completed in only 18.9 seconds, which is very fast.

The CEO of Google DeepMind is full of confidence in this major update to the model, saying that this release lays the foundation for Google to achieve future future intelligent agent work:

Google CEO Sundar Pichai has previously made it clear that 2025 will be a critical period for Google to accelerate development in the field of AI. It feels like after this release, Google’s route is clearer!

Compared to the routes of other giants, Google’s AI route focuses more on practicality and directly provides multiple version options, just like an AI toolbox, where you can pick and choose as you like, according to your needs, flexible and convenient, and able to meet all kinds of needs.