None of us expected that this is how 2025 would begin in the AI field.

DeepSeek R1 is truly amazing!

Recently, the “mysterious Eastern power” DeepSeek has been “hard controlling” Silicon Valley.

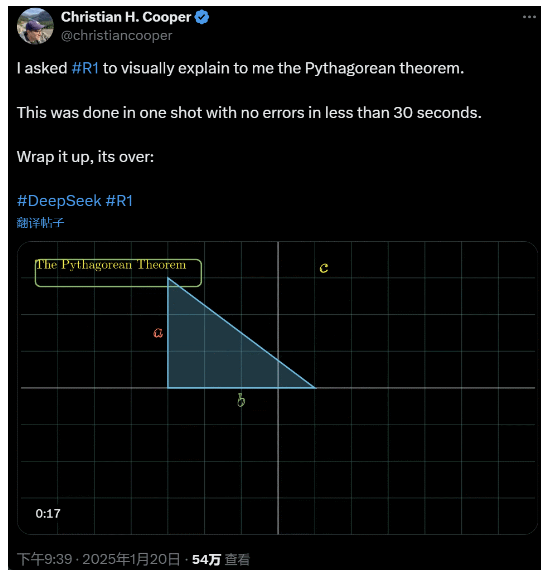

I asked R1 to explain the Pythagorean theorem in detail. All this was done by AI in less than 30 seconds without any mistakes. In short, its over.

In the domestic and foreign AI circles, ordinary netizens have discovered the amazing and powerful new AI (which is also open source), and academic experts have shouted “we must catch up”. There is also hearsay that overseas AI companies are already facing a major threat.

Just take this DeepSeek R1 released this week. Its pure reinforcement learning route without any supervised training is shocking. From the development of the Deepseek-v3 base in December last year to the current thinking chain capabilities comparable to OpenAI o1, it seems to be a matter of time.

But while the AI community is busy reading technical reports and comparing actual measurements, people still have doubts about R1: apart from being able to outperform a bunch of benchmarks, can it really lead?

Can it build its own simulations of “physical laws”?

You don’t believe it? Let’s let the big model play with a pinball?

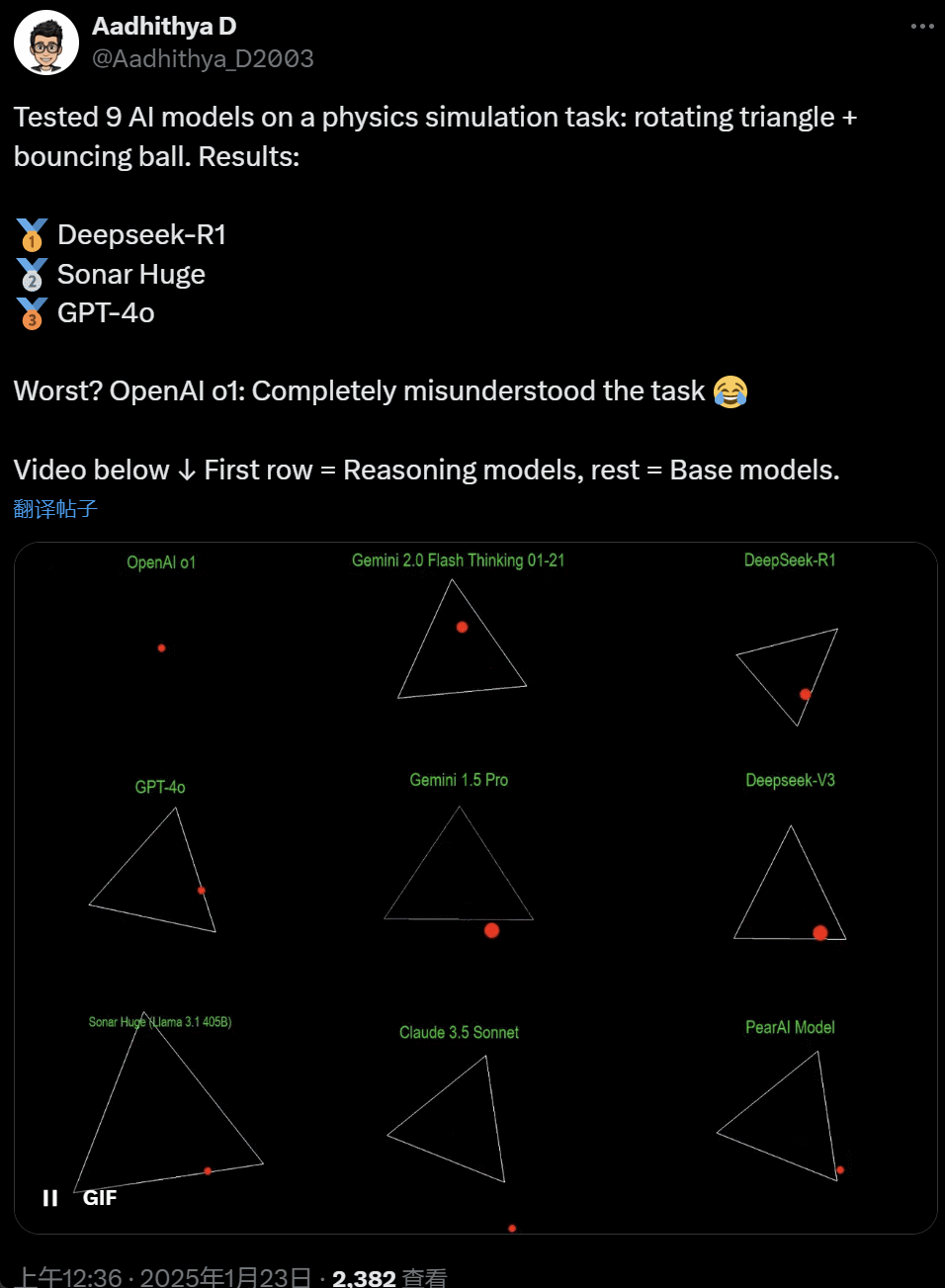

In recent days, some people in the AI community have become obsessed with a test – testing different AI big models (especially the so-called reasoning models) to solve a problem: “Write a Python script to make a yellow ball bounce inside a certain shape. Make the shape rotate slowly and make sure the ball stays inside the shape.”

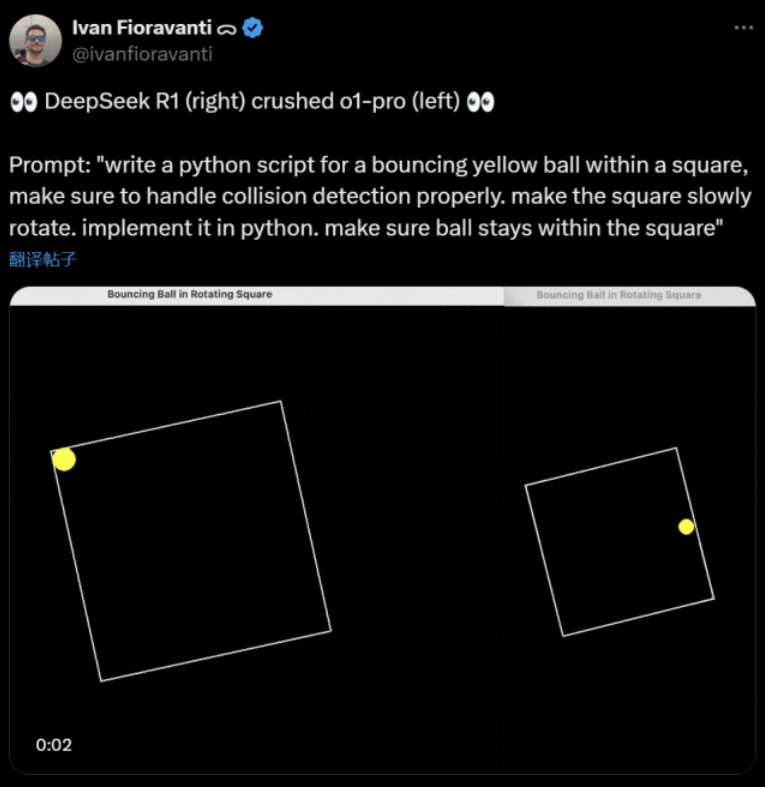

Some models outperform others in this “rotating ball” benchmark. According to CoreView CTO Ivan Fioravanti, DeepSeek, a domestic artificial intelligence laboratory, has an open source large model R1 that beats OpenAI’s o1 pro model, which costs $200 per month as part of OpenAI’s ChatGPT Pro program.

On the left is OpenAI o1, and on the right is DeepSeek R1. As mentioned above, the prompt here is: “write a python script for a bouncing yellow ball within a square, make sure to handle collision detection properly. make the square slowly rotate. implement it in python. make sure ball stays within the square.”

According to another user on X, the Anthropic Claude 3.5 Sonnet and Google’s Gemini 1.5 Pro models made incorrect judgments about physical principles, causing the ball to deviate from its shape. Some users have also reported that Google’s latest Gemini 2.0 Flash Thinking Experimental, as well as the relatively older OpenAI GPT-4o, passed the assessment at once.

But there is a way to tell the difference here:

Netizens under this tweet said: o1’s ability was originally very good, but it became weaker after OpenAI optimized the speed, even with the $200/month membership version.

Simulating a bouncing ball is a classic programming challenge. Accurate simulation combines collision detection algorithms, which need to identify when two objects (such as a ball and the side of a shape) collide. An improperly written algorithm can affect the performance of the simulation or cause obvious physical errors.

N8 Programs, a researcher at AI startup Nous Research, said it took him about two hours to write a bouncing ball in a rotating heptagon from scratch. “Multiple coordinate systems must be tracked, an understanding of how collisions are handled in each system is required, and the code must be designed from scratch to be robust.”

Although bouncing balls and spinning shapes are a reasonable test of programming skills, they are still new projects for large models, and even small changes in the prompts can produce different results. So if it is to eventually become part of the benchmark test for large AI models, it still needs to be improved.

In any case, after this wave of practical tests, we have a sense of the differences in capabilities between large models.

DeepSeek is the new “Silicon Valley myth

DeepSeek is causing panic” across the Pacific.

Meta employees have posted that “Meta engineers are frantically analyzing DeepSeek to try to copy anything they can from it.”

Alexandr Wang, founder of AI technology startup Scale AI, also publicly stated that the performance of DeepSeek’s AI large model is roughly equivalent to the best model in the United States.

He also believes that the United States may have been ahead of China in the AI competition over the past decade, but DeepSeek’s release of its AI large model may “change everything.”

X Blogger @8teAPi believes that DeepSeek is not a “side project” but is like Lockheed Martin’s former “Skunk Works”.

The so-called “Skunk Works” refers to a highly confidential, relatively independent small team that Lockheed Martin originally set up to develop many advanced aircraft, engaged in cutting-edge or unconventional technology research and development. From the U-2 reconnaissance aircraft and SR-71 Blackbird to the F-22 Raptor and F-35 Lightning II fighter, they all came from here.

Later, the term gradually evolved into a generic term used to describe “small but fine”, relatively independent and more flexible innovation teams set up within large companies or organizations.

He gave two reasons:

- On the one hand, DeepSeek has a large number of GPUs, reportedly more than 10,000, and Alexandr Wang, CEO of Scale AI, even said it could reach 50,000.

- On the other hand, DeepSeek only recruits talent from the top three universities in China, which means that DeepSeek is as competitive as Alibaba and Tencent.

These two facts alone show that DeepSeek has clearly achieved commercial success and is well-known enough to obtain these resources.

As for DeepSeek’s development costs, the blogger said that Chinese technology companies can receive a variety of subsidies, such as low electricity costs and land use.

Therefore, it is very likely that most of DeepSeek’s costs have been “placed” in an account outside the core business or in the form of some kind of data center construction subsidy.

Even apart from the founders, no one fully understands all the financial arrangements. Some agreements may simply be “verbal agreements” that are finalized based on reputation alone.

Regardless, a few things are clear:

- The model is excellent, comparable to the version released by OpenAI two months ago, and of course it is possible that it is not as good as the new models that OpenAI and Anthropic have yet to release.

- From the current perspective, the research direction is still dominated by American companies. The DeepSeek model is a “quick follow-up” to the o1 version, but DeepSeek’s research and development progress is very rapid, catching up faster than expected. They are not plagiarizing or cheating, at most they are reverse engineering.

- DeepSeek is mainly training its own talent, rather than relying on American-trained PhDs, which greatly expands the talent pool.

- Compared with US companies, DeepSeek is subject to fewer constraints in terms of intellectual property licensing, privacy, security, politics, etc., and there are fewer concerns about the wrongful use of data that people don’t want to be trained on. There are fewer lawsuits, fewer lawyers, and fewer concerns.

There is no doubt that more and more people believe that 2025 will be a decisive year. In the meantime, companies are gearing up for it. Meta, for example, is building a 2GW+ data center, with an estimated investment of $60-65 billion by 2025, and will have more than 1.3 million GPUs by the end of the year.

Meta even used a chart to compare its 2-gigawatt data center with Manhattan, New York.

But now DeepSeek has done better with lower cost and fewer GPUs. How can this not make people anxious?

Yann LeCun: We have to thank the CTO and co-founder of the open source

Hyperbolic, Yuchen Jin, for posting that in just 4 days, DeepSeek-R1 has proven 4 facts to us:

- Open source AI is only 6 months behind closed source AI

- China is dominating the open source AI competition

- We are entering the golden age of large language model reinforcement learning

- Distillation models are very powerful, and we will run highly intelligent AI on mobile phones

The chain reaction triggered by DeepSeek is still continuing, such as OpenAI o3-mini being made freely available, the hope in the community to reduce the vague discussions about AGI/ASI, and the rumour that Meta is in a panic.

He believes that it is difficult to predict who will ultimately win, but we should not forget the power of the latecomer’s advantage. After all, we all know that it was Google that invented Transformer, while OpenAI unlocked its true potential.

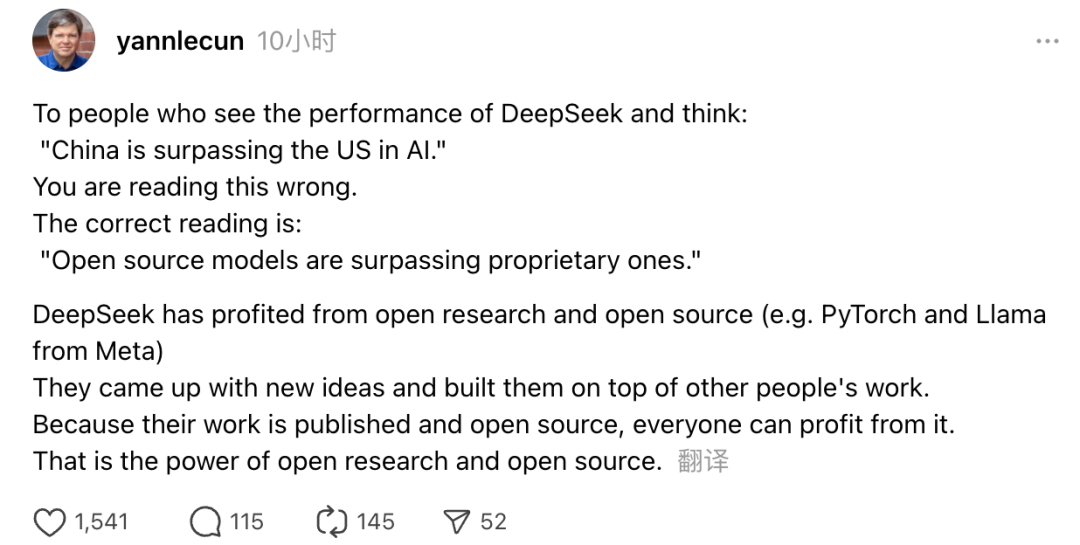

In addition, Turing Award winner and Meta’s Chief AI Scientist Yann LeCun also expressed his views.

“For those who, upon seeing DeepSeek’s performance, think, ‘China is overtaking the US in AI,’ you have it wrong. The correct understanding is that open source models are overtaking proprietary models.”

LeCun said that the reason DeepSeek has made such a splash this time is because they have benefited from open research and open source (such as Meta’s PyTorch and Llama). DeepSeek has come up with new ideas and built on the work of others. Because their work is publicly released and open source, everyone can benefit from it. This is the power of open research and open source.

Netizens’ reflections continue. While they are excited about the development of new technologies, they can also feel a little atmosphere of anxiety. After all, the emergence of DeepSeekers may have a real impact.