Abstract

This paper introduces DeepSeek’s first-generation reasoning models: DeepSeek-R1-Zero and DeepSeek-R1. DeepSeek-R1-Zero, trained through large-scale reinforcement learning (RL) without supervised fine-tuning (SFT), demonstrates remarkable reasoning capabilities. Through RL, it naturally develops powerful reasoning behaviors. However, it faces challenges like poor readability and language mixing. To address these issues and enhance reasoning performance, DeepSeek-R1 was developed, incorporating multi-stage training and cold-start data before RL. DeepSeek-R1 achieves performance comparable to OpenAI-o1-1217 on reasoning tasks. To support research, DeepSeek open-sources both models and six dense models (1.5B, 7B, 8B, 14B, 32B, 70B) distilled from DeepSeek-R1 based on Qwen and Llama.

Key Contributions

Post-Training: Large-Scale Reinforcement Learning

- Successfully applied RL directly to the base model without SFT

- Developed DeepSeek-R1-Zero, demonstrating capabilities like self-verification and reflection

- First open research validating that reasoning capabilities can be incentivized purely through RL

- Introduced pipeline for DeepSeek-R1 with two RL stages and two SFT stages

Distillation: Empowering Smaller Models

- Demonstrated that reasoning patterns from larger models can be effectively distilled into smaller ones

- Open-sourced DeepSeek-R1 and its API to benefit research community

- Fine-tuned several dense models showing exceptional benchmark performance

- Distilled models significantly outperform previous open-source models

Evaluation Results

Reasoning Tasks

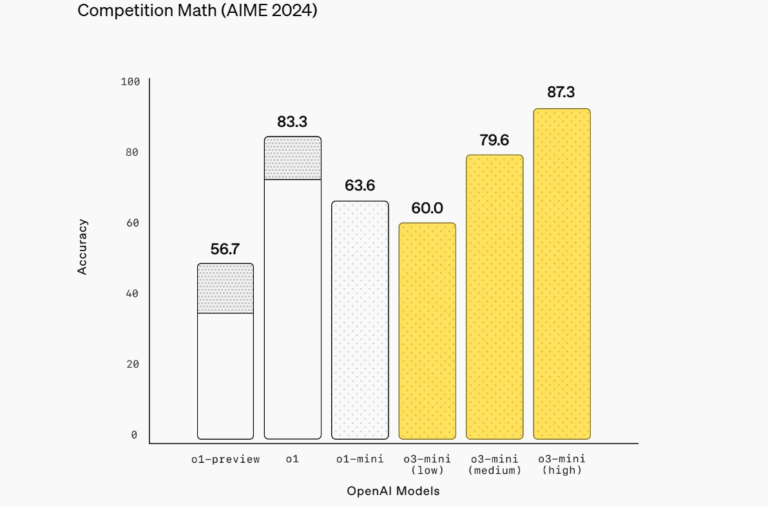

- DeepSeek-R1 achieves 79.8% Pass@1 on AIME 2024, surpassing OpenAI-o1-1217

- 97.3% score on MATH-500, performing on par with OpenAI-o1-1217

- Expert-level performance in code competition tasks with 2,029 Elo rating on Codeforces

Knowledge Tasks

- Outstanding results on MMLU (90.8%), MMLU-Pro (84.0%), and GPQA Diamond (71.5%)

- Surpasses other closed-source models in educational tasks

- Strong performance on factual benchmarks like SimpleQA

General Capabilities

- Excels in creative writing, question answering, editing, and summarization

- 87.6% win-rate on AlpacaEval 2.0 and 92.3% on ArenaHard

- Strong performance in long-context understanding tasks

Future Work

The team plans to focus on:

- Enhancing general capabilities in areas like function calling and complex role-playing

- Addressing language mixing issues

- Improving prompting engineering

- Enhancing performance on software engineering tasks

Conclusion

DeepSeek-R1 represents a significant advancement in AI reasoning capabilities through reinforcement learning. The success of both the main model and its distilled versions demonstrates the potential of this approach for developing more capable AI systems. The open-source release of these models will contribute to further research and development in the field.