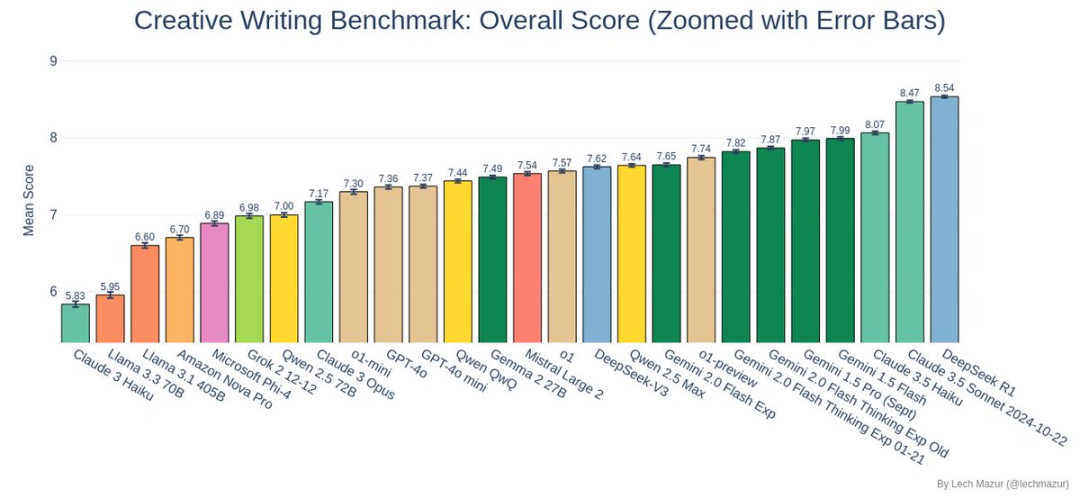

DeepSeek R1 won the championship in the creative short story writing benchmark test, successfully surpassing the previous dominant player Claude 3.5 Sonnet!

Benchmark test

The benchmark test designed by researcher Lech Mazur is not your average writing competition.

Each AI model was required to complete 500 short stories, and each story had to cleverly incorporate 10 randomly assigned elements. This was a challenging open-ended writing task for the AI, which not only required a complete storyline, but also ensured that all the assigned elements were naturally integrated

Judging method

This benchmark test uses a unique scoring system: six top language models act as judges, scoring various aspects of the story. In other words, the AI industry leaders are judging the AI itself, which overall provides a relatively fair and systematic evaluation standard.

Test content

The above chart shows the correlation analysis of the scorers in the creative writing benchmark test. DeepSeek has a correlation coefficient of over 0.93 with other mainstream models (Claude, GPT-4o, Gemini and Grok), indicating that it has highly consistent judgment criteria with other top models when judging the quality of creative writing, which indirectly confirms its reliability in this test.

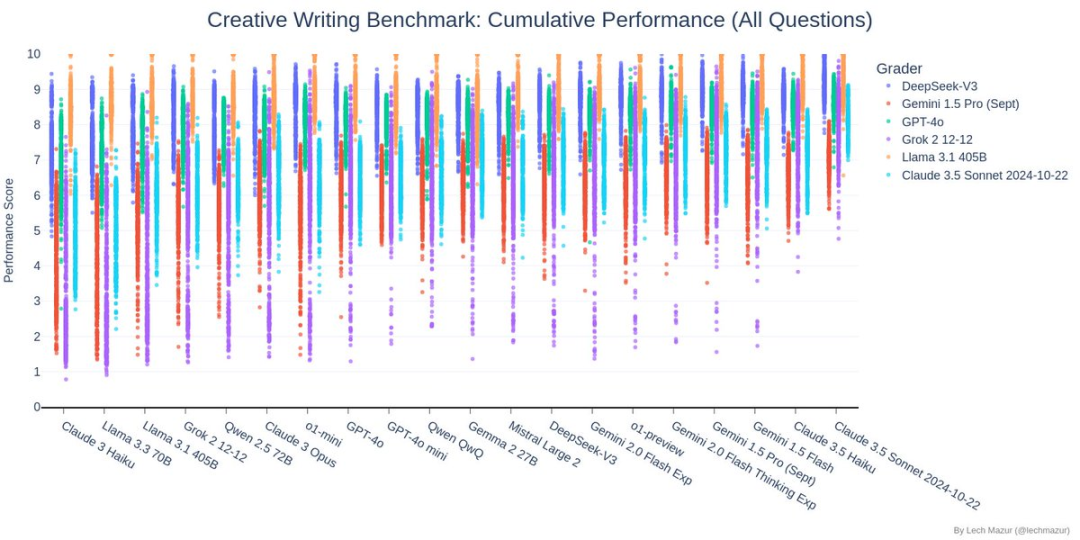

The chart above shows the results of the creative short story writing benchmark test. Each AI model was required to write 500 stories, each of which must contain 10 specified random elements. The points in the chart show the score distribution of each participating AI model for different scoring models (represented by different colors).

In the test, DeepSeek (dark blue points) performed well, with most of its score points concentrated in the upper half of the chart and relatively concentrated, showing a stable and high level of creative writing ability.

This outstanding performance has enabled it to successfully surpass the previous champion, Claude 3.5 Sonnet, and become the new benchmark test leader.

In this chart, each row represents an AI model, and each column represents an evaluation dimension (such as characterization, plot coherence, etc.). DeepSeek is located in the upper middle of the chart, with an overall orange-yellow hue, indicating that it has achieved excellent results in most evaluation dimensions. In particular, it achieved high scores of nearly 8 points in the key dimensions of execution (Q6), characterization (TA), and plot development (TJ). Although it may not be the brightest yellow in individual dimensions, it does not have any obvious weaknesses.

As you can see in the chart, DeepSeek’s story scores are mostly distributed between 7 and 9 points, and the distribution is relatively concentrated. Interestingly, its trend line is almost horizontal, indicating that DeepSeek’s story quality is not closely related to the length of the story. In other words, whether it is writing a long story or a short story, DeepSeek can maintain a consistently high quality output. This shows that DeepSeek focuses more on quality than quantity when creating, and can maintain excellent performance in stories of different lengths.

Why did DeepSeek R1 win?

Judging from the test results, DeepSeek R1 performed amazingly:

- Comprehensive story integration capabilities: R1 showed amazing flexibility and creativity when dealing with different combinations of story elements.

- Stable output quality: Judging from the score distribution chart, R1 not only had a high average score, but also a stable performance with less fluctuation.

- Outstanding creative performance: In this benchmark test, the stories created by R1 were rated among the top three overall, which proves its outstanding ability in creative writing.

How did the other contestants perform?

In addition to the exciting showdown between DeepSeek R1 and Claude 3.5 Sonnet, the performance of other models is also worth noting:

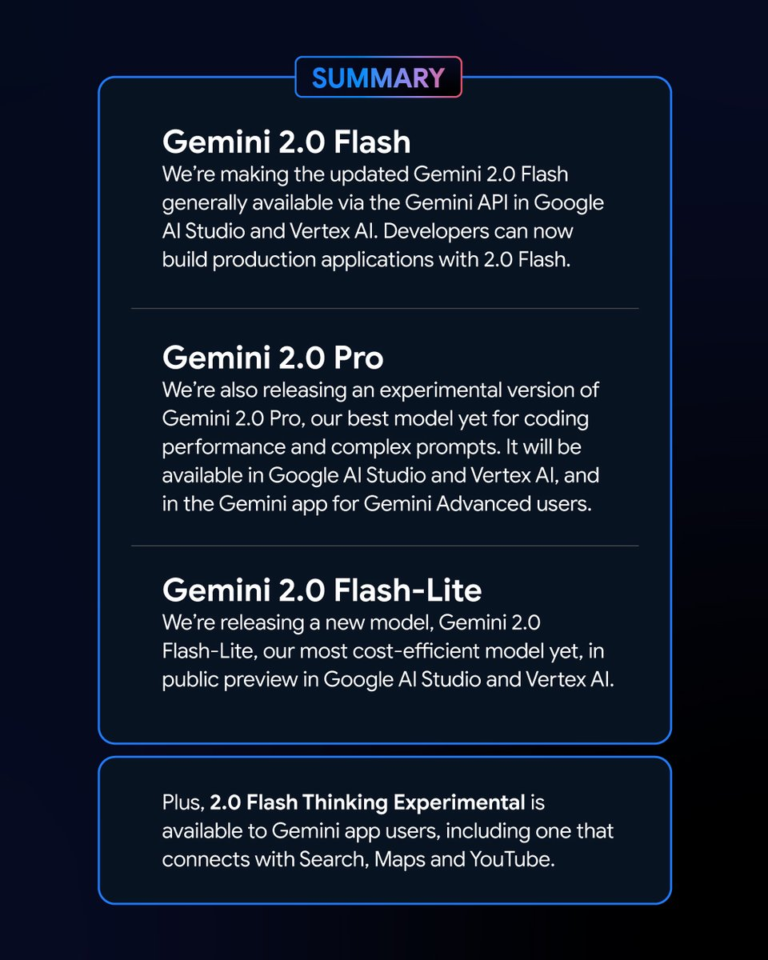

- The Gemini series performed well

- The Llama 3.x series struggled a bit in this test

- The o3-mini did not perform well, ranking 22nd

Finally

DeepSeek R1’s breakthrough in this test has shown us the infinite possibilities of AI in the field of creativity. Although AI creation is still on the path of continuous improvement, such results have already made us full of expectations for the future.

For those who want to learn more about the details of the test, you can visit Lech Mazur’s GitHub for the full data and examples of the best stories. Let’s look forward to more breakthroughs in AI creative writing together!