Many people have already started to deploy and use Deepseek Large Language Models locally, using Chatbox as a visualization tool

This article will continue to introduce two other AI Large Language Model management and visualization artifacts, and will compare the three in detail to help you use AI Large Language Models more efficiently.

In 2025, when AI technology is exploding, how do you choose an AI assistant tool that can manage multiple models and achieve efficient collaboration?

This article will analyze the three most popular AI assistant tools/best partners/efficiency partners from three dimensions: functional positioning, unique advantages, and applicable scenarios: Cherry Studio, AnythingLLM, and Chatbox. It will help you accurately match your needs and easily improve your efficiency! If you want to make better use of these AI tools, keep reading!

A minimalist summary

I have installed and tried out these tools, and here is a minimalist summary of my most intuitive experiences with them

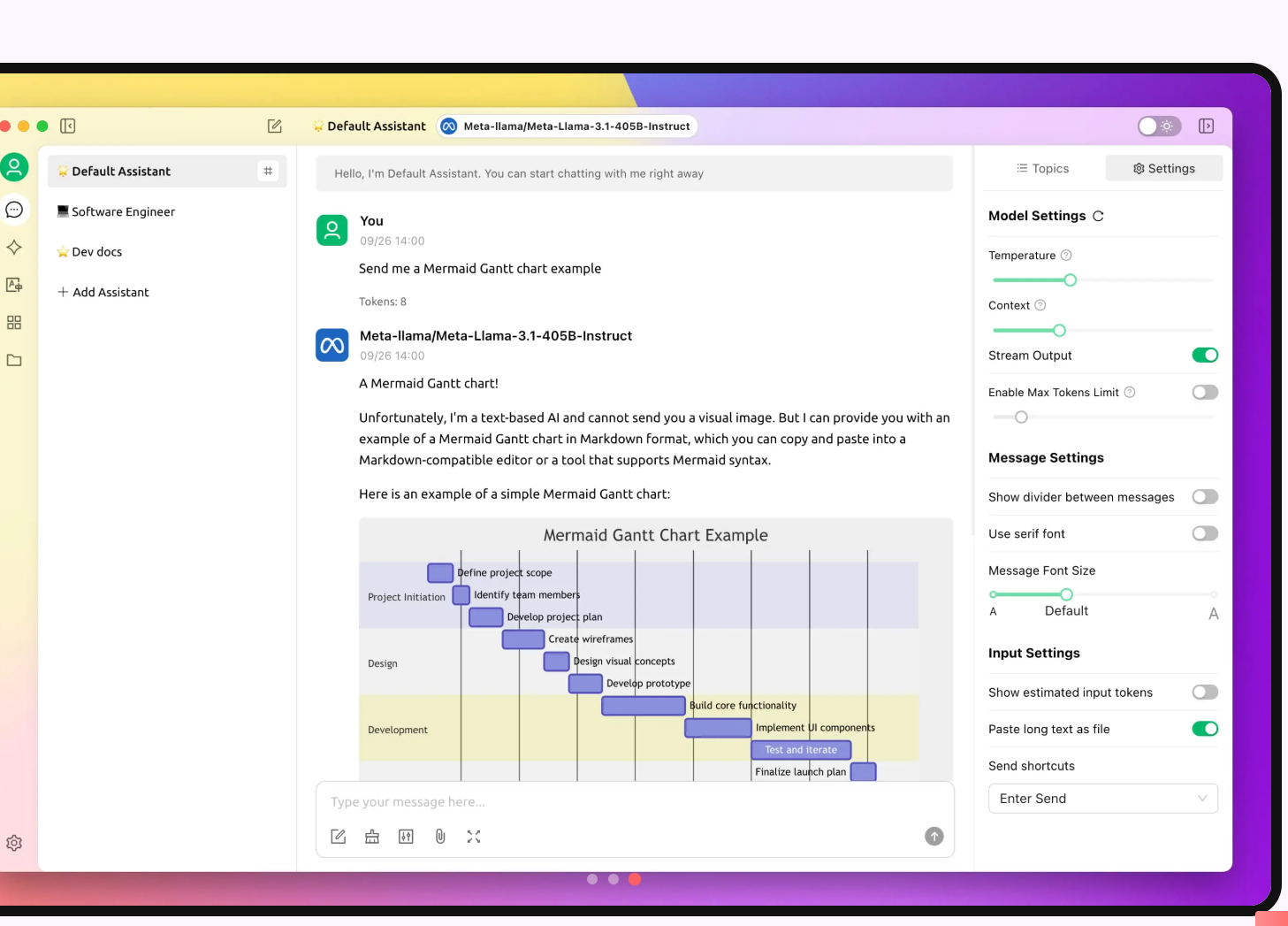

- Cherry Studio: user-friendly, concise and rich, supports a local knowledge base, and has a built-in “agent” that is awesome. It is very detailed, rich and professional in various fields, such as “technical writer,” “DevOps engineer expert,” “explosive copywriter,” “fitness trainer expert,” etc.

- AnythingLLM: A more serious interface with built-in embedded models and vector databases, and a powerful local knowledge base;

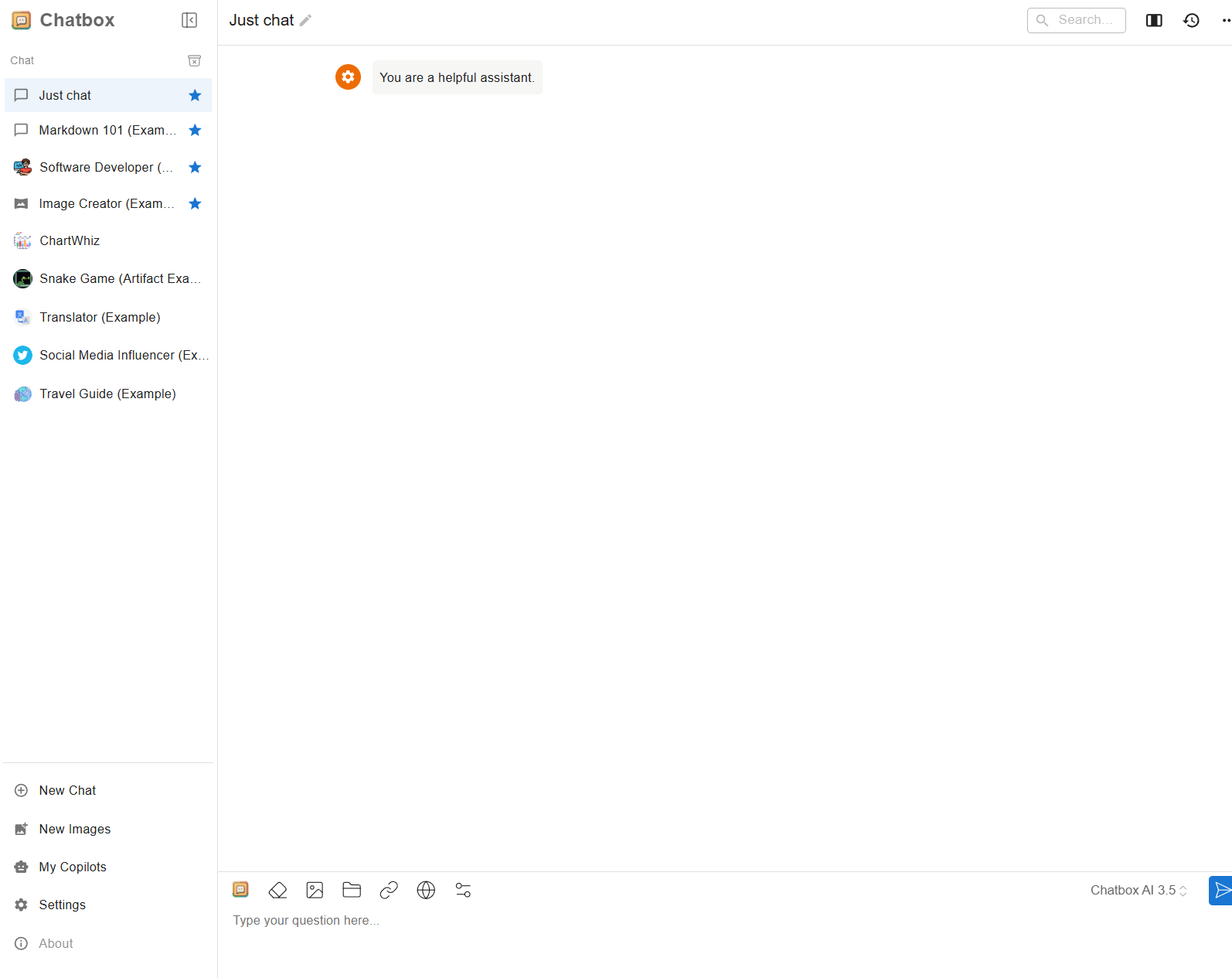

- Chatbox: Focuses on conversational AI, relatively simple, can read documents, but cannot engage in local knowledge base, built-in “My Partner”, less but better, such as “Make Charts”, “Travel Companion”, etc.

Cherry Studio: “All-round Commander” for multi-model collaboration

Unique advantages:

Freely switch between multiple models: Supports mainstream cloud models such as OpenAI, DeepSeek, and Gemini, and integrates Ollama local deployment to achieve flexible calling of cloud and local models.

Built-in 300+ preconfigured assistants: Cover scenarios such as writing, programming, and design. Users can customize assistant roles and functions, and compare the output results of multiple models in the same conversation.

Multi-modal document processing: Supports multiple formats such as text, PDF, and images, integrates WebDAV file management, code highlighting, and Mermaid chart visualization to meet complex data processing needs.

Applicable scenarios:

Developers need to compare and debug code or generate documents in multiple models;

creators need to quickly switch between different styles of text generation;

enterprises need to take into account the hybrid use of data privacy (local models) and high-performance models in the cloud.

Selection advice:

Suitable for technical teams, multitaskers, or users who have high requirements for data privacy and functional scalability.

AnythingLLM: The “intelligent brain” of enterprise-level knowledge bases

Unique advantages:

Document intelligent Q&A: Supports indexing of files in formats such as PDF and Word, and uses vector search technology to accurately locate document fragments and combine with large models to generate contextually relevant answers.

Localized deployment is flexible: It can be connected to local inference engines such as Ollama, without relying on cloud services, to ensure the security of sensitive data.

Search and generation are integrated: The content of the knowledge base is first searched, and then the model is called to generate the answer, ensuring the professionalism and accuracy of the answer.

Applicable scenarios:

Automated question and answer for internal enterprise document libraries (such as employee handbooks and technical documents);

Abstracting and extracting key information from documents in academic research;

Personal users managing massive amounts of notes or e-book resources.

Selection advice:

Recommended for enterprises that rely on document processing, research institutions, or teams that need to build private knowledge bases.

Chatbox: minimalist “lightweight chat expert”

Unique advantages:

Zero configuration for a quick experience: ready to use after installation, providing a simple interface similar to ChatGPT, suitable for novice users to get started quickly. Local model friendly: supports local inference tools such as Ollama, and can run open source models without complex network configuration.

Lightweight and high performance: It consumes few resources and can run smoothly even in a CPU environment, making it suitable for low-configuration devices.

Applicable scenarios:

Individual users can quickly test the effect of local model generation;

developers can temporarily debug models or generate simple code snippets;

and it can be used as a teaching demonstration tool in educational scenarios.

Selection advice:

Suitable for individual developers, educators, or users who only need basic dialogue functions.

Ultimate selection guide: Match as needed and double your efficiency

Looking for versatility and extensibility? Choose Cherry Studio – multiple models, assistants, and format support to meet the needs of complex scenarios.

Focus on document and knowledge management? Choose AnythingLLM – enterprise-level search and Q&A to make AI “understand” your data.

Just need a lightweight tool? Choose Chatbox – out-of-the-box, minimalist design, and quick verification of ideas.

In 2025, there will be a wide variety of AI tools. The essence of choosing a tool is “needs first.” Whether you are a technology geek, a business decision-maker, or a productivity seeker, one of the three magic tools will become your “digital add-on.” Act now, use the right tool, and let AI truly empower you!