OpenAI has released its latest inference model, o3-mini, which is optimized for fields such as science, mathematics, and programming, providing faster response, higher accuracy, and lower cost.

Compared with its predecessor o1-mini, o3-mini has significantly improved its inference capabilities, especially in solving complex problems. Testers prefer o3-mini’s answers by 56%, and the error rate has been reduced by 39%. From today, ChatGPT Plus, Team and Pro users can use o3-mini, and free users can also experience some of its features.

Compared with the inference model DeepSeek-R1, just how much better is OpenAI o3-mini than R1?

This article will first give an overview of the highlights of o3-mini, and then we will extract the data from both sides on each benchmark and make a graph to visually compare them. In addition, we will also compare the price of o3-mini.

Core highlights

1.STEM optimization: excels in the fields of mathematics, programming, science, etc., especially surpassing o1-mini in the high inference effort mode.

2.Developer functions: supports functions such as function calls, structured output, and developer messages to meet the needs of the production environment.

3.Fast response: 24% faster than o1-mini, with a response time of 7.7 seconds per request.

4.Security improvement: ensures secure and reliable output through deep alignment technology.

5.Cost-effective: inference capabilities and cost optimization go hand in hand, greatly reducing the threshold for AI use.

Compare

Open AI In order to highlight its class, its official blog only compares it with its own models. Therefore, this article is a table extracted from the DeepSeek R1 paper and the data from the official OpenAI blog.

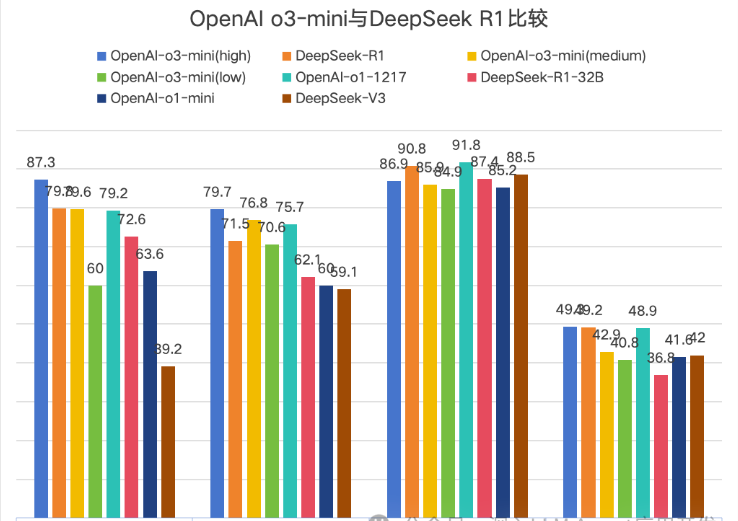

OpenAI officially compares o3-mini in the version list, breaking it down into three versions: low, medium and high, which indicate inference strength. Since DeepSeek uses Math-500 and OpenAI uses the Math dataset, this comparison has been removed here.

A chart is more intuitive, and Codeforces has been removed because the values are too large to be displayed intuitively. However, the comparison on Codeforces shows that o3-mini’s high inference strength is not much of a lead.

↑1AIME2024→2GPQA Diamond→3MMLU→4SWE-bench-Verified

From the chart, there are a total of 4 comparisons, and the O3-mini (high) generally leads, but the lead is very small.

Price

| model | Input price | Cache hit | Output price |

| o3-mini | $1.10 | $0.55 | $4.40 |

| o1 | $15.00 | $7.50 | $60.00 |

| Deepseek R1 | $0.55 | $0.14 | $2.19 |

Summary

With DeepSeek R1 triggering the DeepSeek Panic in the United States, the first to feel threatened was OpenAI, which is especially evident in the pricing of its new model o3-mini.

When Openai o1 was first released, its high price put pressure on many developers and users. The appearance of DeepSeek R1 gave everyone more choices.From the 30-fold price difference between o1 and R1 to the final price of o3-mini being twice the price of DeepSeek R1,

shows the impact of DeepSeek R1 on openai.However, ChatGPT free users can only experience o3-mini in a limited way, while DeepSeek’s Deep Thinking is currently available to all users.I also look forward to openai bringing more leading ai models while reducing the cost of use for users.

From the perspective of a blogger’s personal experience using R1, I would like to say that R1’s Deep Thinking always opens up my mind. I recommend that everyone use it more to think about problems~