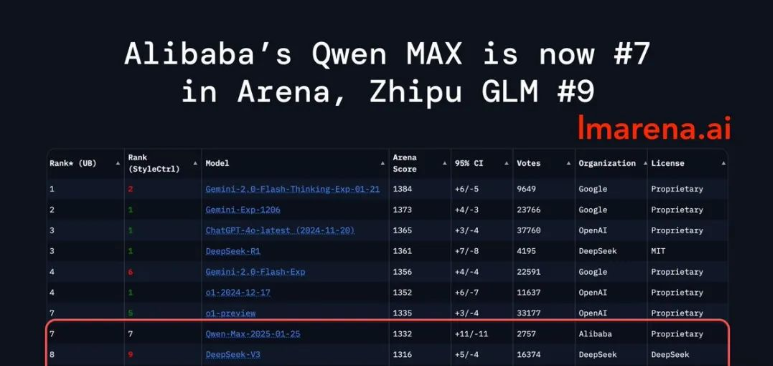

Just now, another domestic model was added to the Big Model Arena list

from Ali, Qwen2.5-Max, which surpassed DeepSeek-V3 and ranked seventh in the overall rankings with a total score of 1332.

It also surpassed models such as Claude 3.5 Sonnet and Llama 3.1 405B in one fell swoop.

In particular, it excels in programming and mathematics, and is ranked first alongside Fullblood o1 and DeepSeek-R1.

Chatbot Arena is a large model performance testing platform launched by LMSYS Org. It currently integrates more than 190 models, and uses models paired in teams of two to be given to users for blind testing, with users voting on the abilities of the models based on their real-life conversation experiences.

For this reason, the Chatbot Arena LLM Leaderboard is the most authoritative and important arena for the world’s top large models.

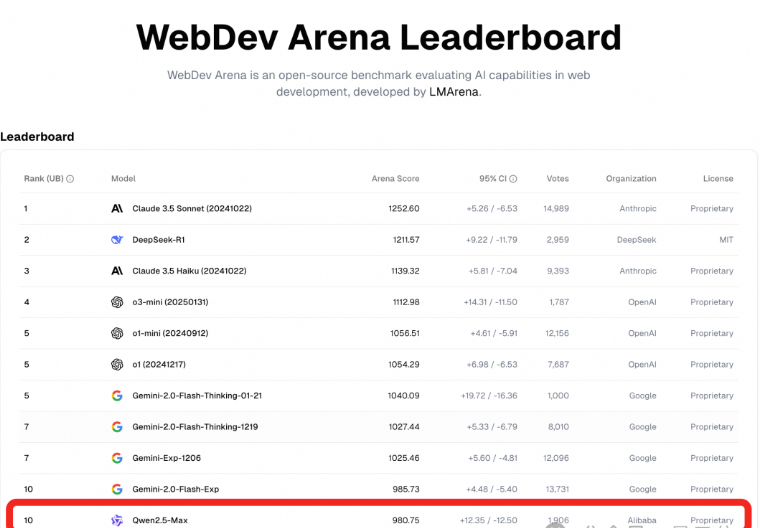

Qwen 2.5-Max also broke into the top ten on the newly opened WebDev list for web application development.

The official lmsys comment on this is that Chinese AI is rapidly closing the gap!

Netizens who have personally used it say that Qwen’s performance is more stable.

Some people even say that Qwen will soon replace all ordinary models in Silicon Valley.

Four individual abilities reach the top

The first and second places in the top three of the overall list were taken by the Google Gemini family, with GPT-4o and DeepSeek-R1 tied for third place.

Qwen2.5-Max tied for seventh place with o1-preview, slightly behind the full o1.

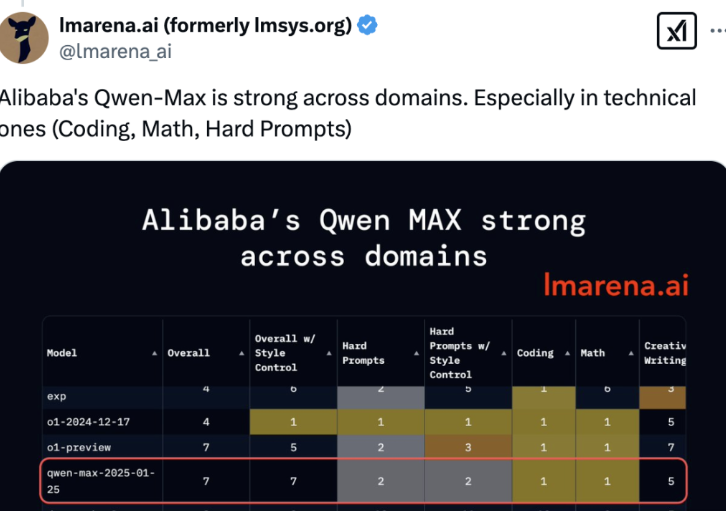

Next is Qwen2.5-Max’s performance in each individual category.

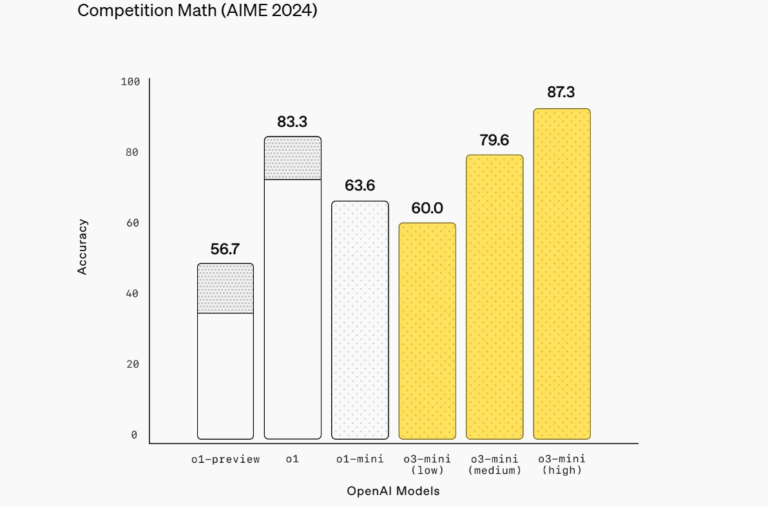

In the more logical math and code tasks, Qwen2.5-Max’s results exceeded those of o1-mini, and it tied for first place with the fully-charged o1 and DeepSeek-R1.

And among the models tied for first place on the math list, Qwen2.5-Max is the only non-reasoning model.

If you look closely at the specific battle records, you can also see that Qwen2.5-Max has a 69% win rate in code ability against the full-blooded o1.

In the complex prompt word task, Qwen2.5-Max and o1-preview tied for second place, and if it is limited to English, it can rank first, on par with o1-preview, DeepSeek-R1, etc.

In addition, Qwen2.5-Max is tied for first place with DeepSeek-R1 in multi-turn dialogue; it ranks third in long text (not less than 500 tokens), surpassing o1-preview.

In addition, Ali also showed Qwen2.5-Max’s performance on some classic lists in the technical report.

In the comparison of command models, Qwen2.5-Max is at the same level as or higher than GPT-4o and Claude 3.5-Sonnet in benchmarks such as Arena-Hard (similar to human preferences) and MMLU-Pro (university-level knowledge).

In the open source base model comparison, Qwen2.5-Max also outperformed DeepSeek-V3 across the board and was well ahead of Llama 3.1-405B.

As for the base model, Qwen2.5-Max also showed a significant advantage in most benchmark tests (the closed source model base model is not accessible, so only the open source model can be compared).

Outstanding code/inference, supports Artifacts

After Qwen2.5-Max was launched, a large number of netizens came to test it.

It has been found to excel in areas such as code and inference.

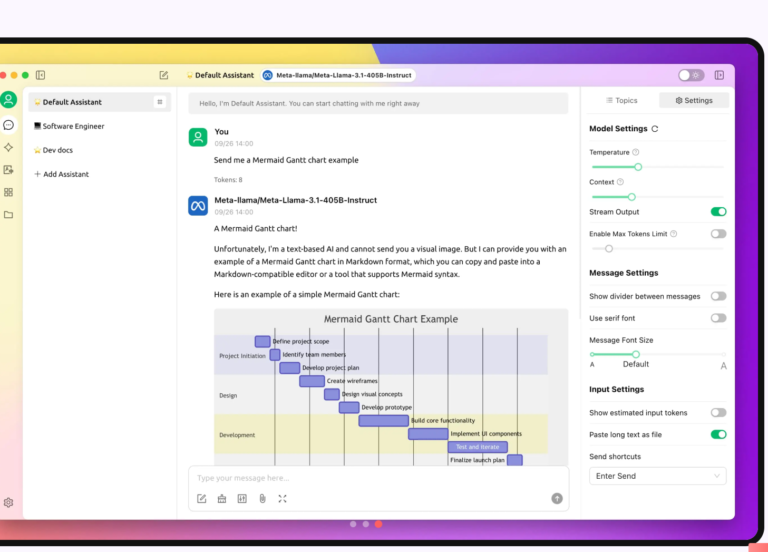

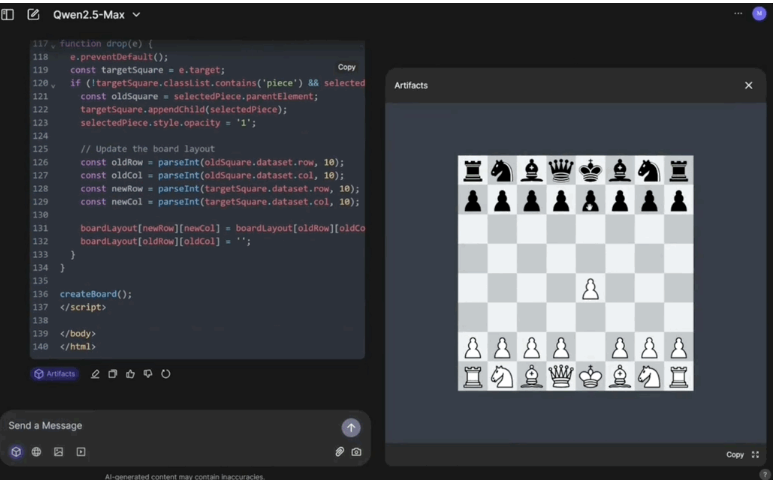

For example, let it write a chess game in JavaScript.

Thanks to Artifacts, a small game developed in a single sentence can be played immediately:

the code it generates is often easier to read and use.

Qwen2.5-Max is fast and accurate when inferring complex prompts:

Your team has 3 steps to handle customer requests:

Data collection (stage A): 5 minutes per request.

Processing (stage B): 10 minutes per request.

Verification (stage C): 8 minutes per request.

The team currently works sequentially, but you are considering a parallel workflow. If you assign two people to each stage and allow for a parallel workflow, the output per hour will increase by 20%. However, adding a parallel workflow will cost 15% more in terms of operating overhead. Considering the time and cost, should you use a parallel workflow to optimize efficiency?

Qwen2.5-Max completes the entire inference in less than 30 seconds, clearly dividing the overall process into five steps: analysis of the current workflow, analysis of parallel workflows, cost implications, cost-efficiency trade-offs, and conclusions.

The final conclusion is quickly reached: parallel workflows should be used.

Compared to DeepSeek-V3, which is also a non-inference model, Qwen2.5-Max provides a more concise and rapid response.

Or let it generate a rotating sphere made up of ASCII digits. The digit closest to the viewing angle is pure white, while the farthest gradually turns grey, with a black background.

Counting the number of specific letters in a word is even easier.

If you want to try it out for yourself, Qwen2.5-Max is already online on the Qwen Chat platform and can be experienced for free.

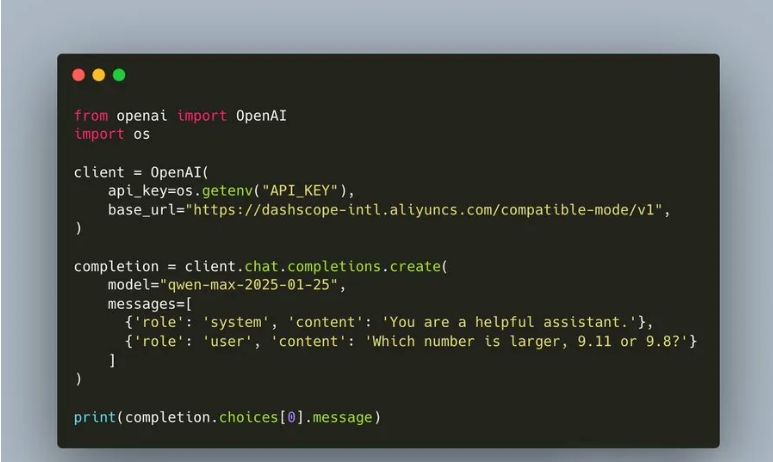

Enterprise users can call the Qwen2.5-Max model API on Alibaba Cloud Bailian.