The DeepSeek R1 model has undergone a minor version upgrade, with the current version being DeepSeek-R1-0528. When you enter the DeepSeek webpage or app, enable the “Deep Thinking” feature in the dialogue interface to experience the latest version.

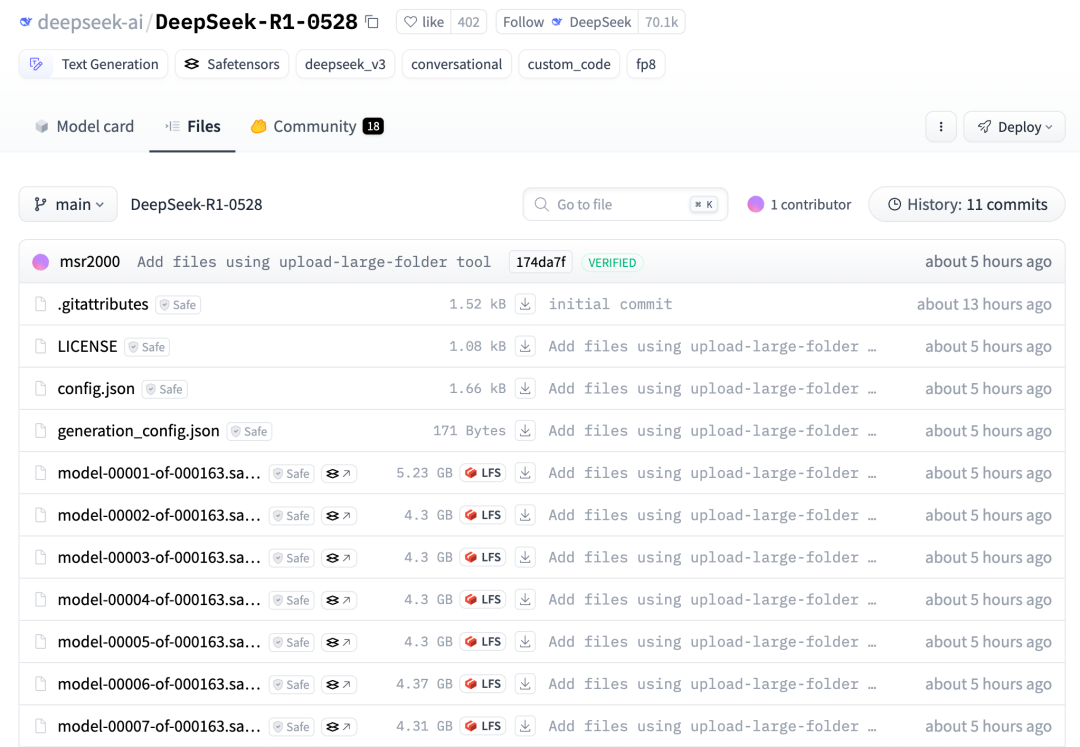

The DeepSeek-R1-0528 model weights have been uploaded to HuggingFace

Over the past four months, DeepSeek-R1 has undergone super-evolution, achieving coding capabilities that are off the charts and significantly longer thinking times. While it may not be the DeepSeek-R2 everyone was expecting, the improvements in the DeepSeek-R1-0528 model are substantial.

According to reports, the new model is trained on DeepSeek-V3-0324 (with 660B parameters).

Let’s first take a quick look at the key updates in this release via a table

| Capability Dimension | deepseek-R1 | Deepseek-R1-0528 |

| Maximum Context | 64k(API) | 128K(API)even more |

| Code Generation | liveCodeBench close openai O1 | Close to O3 |

| Reasoning Depth | Complex questions require segmented prompts. | Supports 30-60 minutes of deep thinking |

| Language Naturalness | rather lengthy | Compact structure, writing similar to O3 |

| Usage Cost | Open-source or API$0.5/M | Open-source or API$0.5/M |

Enhanced deep thinking capabilities

DeepSeek-R1-0528 still uses the DeepSeek V3 Base model released in December 2024 as its foundation, but during post-training, more computing power was invested, significantly enhancing the model’s thinking depth and reasoning capabilities.

The updated R1 model has achieved top-tier performance among all domestic models in multiple benchmark evaluations, including mathematics, programming, and general logic, and its overall performance is now on par with other international top-tier models such as o3 and Gemini-2.5-Pro.

- Mathematics and programming capabilities: In the AIME 2025 mathematics competition, accuracy improved from 70% in the previous version to 87.5%; code generation capabilities in the LiveCodeBench benchmark test are nearly on par with OpenAI’s o3-high model, achieving a score of pass@1 is 73.3%.

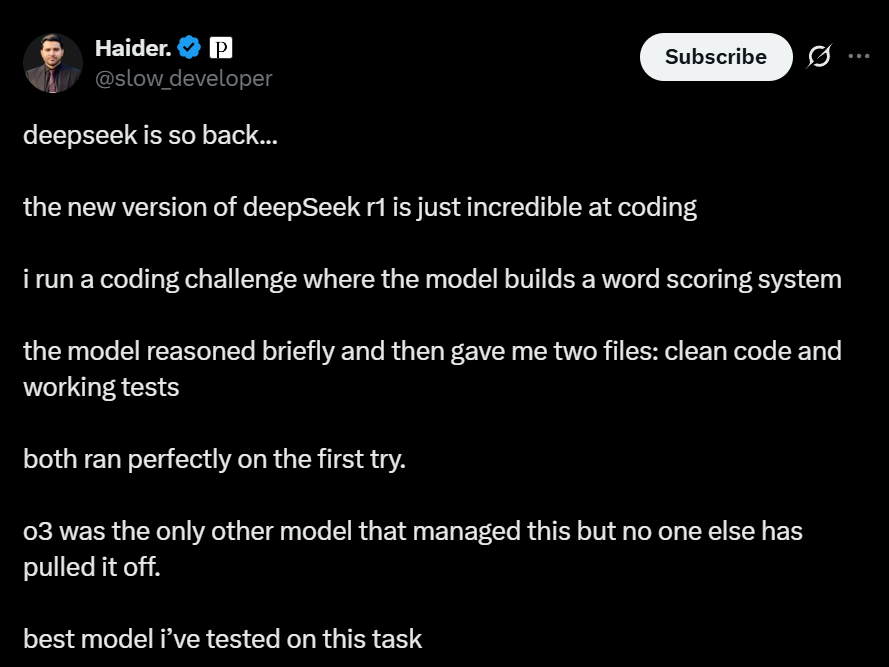

User tests show that the new DeepSeek-R1 is simply astonishing in programming!

AI expert “karminski-dentist” tested DeepSeek-R1-0528 and Claude 4 Sonnet using the same prompt and found that:

Whether it’s the diffuse reflection of light on a wall, the direction of a ball’s movement after impact, or the aesthetic appeal of a control panel, R1 clearly outperforms the competition.

User Haider. had the model build a word-scoring system. R1 briefly considered the task and immediately produced two files—one for code and another for work testing—which ran flawlessly on the first attempt.

Previously, o3 was the only model capable of completing this task. Now, R1 is undoubtedly the best model for this task.

Note that R1’s performance is so remarkable because the two files it returns run flawlessly on the first try, without any editing or retries, which is extremely rare.

Previously, most models either terminated in edge cases, overcomplicated the solution, or lacked adequate test coverage.

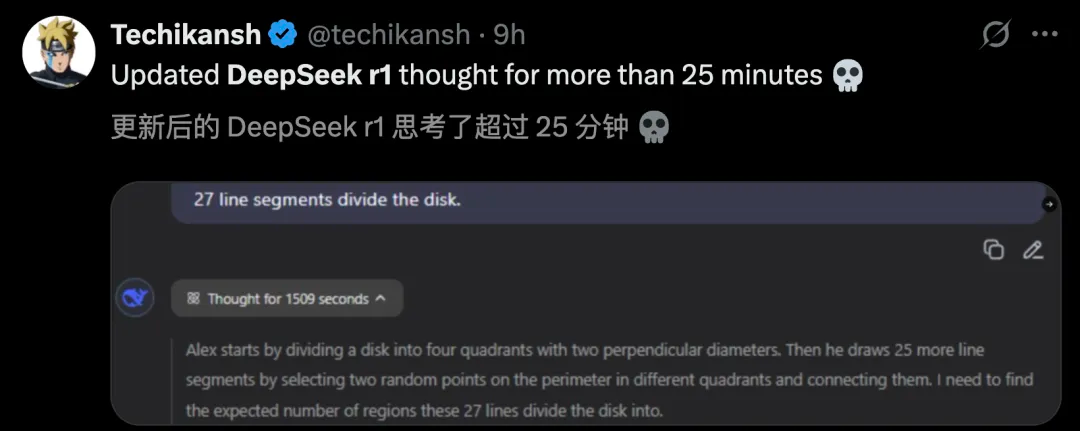

- Inference depth: Single-task thinking time extended to 30–60 minutes, with significantly enhanced problem-solving capabilities for complex issues (e.g., physics simulations, multi-step logical puzzles).

Longer thinking time has become the most discussed feature online. Some users reported that R1’s thinking time exceeded 25 minutes in real-world tests.

Additionally, this appears to be the only model capable of consistently correctly answering “What is 9.9 minus 9.11?”

DeepSeek-R1-0528 achieved excellent performance on all evaluation datasets

Compared to the previous version of R1, the new model shows significant improvements in complex reasoning tasks. For example, in the AIME 2025 test, the new model’s accuracy rate increased from 70% to 87.5%.

This improvement is due to the enhanced depth of reasoning in the model: on the AIME 2025 test set, the old model used an average of 12K tokens per question, while the new model used an average of 23K tokens per question, indicating more detailed and in-depth thinking in the problem-solving process.

Additionally, the deepseek team distilled the reasoning chain from DeepSeek-R1-0528 and fine-tuned Qwen3-8B Base, resulting in DeepSeek-R1-0528-Qwen3-8B.

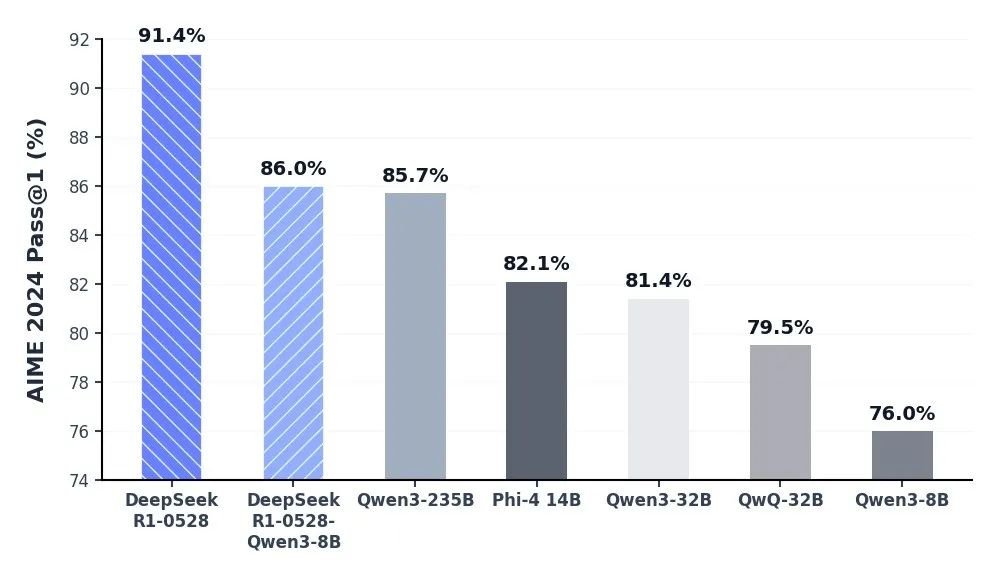

This 8B model ranked second only to DeepSeek-R1-0528 in the AIME 2024 math test, outperforming Qwen3-8B (+10.0%) and matching Qwen3-235B.

The reasoning chains of DeepSeek-R1-0528 will hold significant implications for academic research on reasoning models and industrial development of small-scale models.

Some netizens praised DeepSeek-R1 for being able to correct reasoning chains like o3 and creatively construct worlds like Claude.

It is important to note that DeepSeek is an open-source model, marking a major victory for open-source models.

AIME 2024 comparison results for open-source models such as DeepSeek-R1-0528-Qwen3-8B

Other capability updates

- Hallucination improvement: The new version of DeepSeek R1 has optimized performance for “hallucination” issues. Compared to the previous version, the updated model achieves a 45–50% reduction in hallucination rates across tasks such as rewriting and polishing, summarizing, and reading comprehension, delivering more accurate and reliable results.

- Creative Writing: Based on the previous R1 version, the updated R1 model has been further optimized for essay, novel, and prose writing styles, enabling it to generate longer, more structurally complete works while presenting a writing style that is more aligned with human preferences.

- Tool invocation: DeepSeek-R1-0528 supports tool invocation (tool invocation is not supported in thinking). The current model’s Tau-Bench evaluation scores are 53.5% for airline and 63.9% for retail, comparable to OpenAI o1-high, but still lagging behind o3-High and Claude 4 Sonnet.

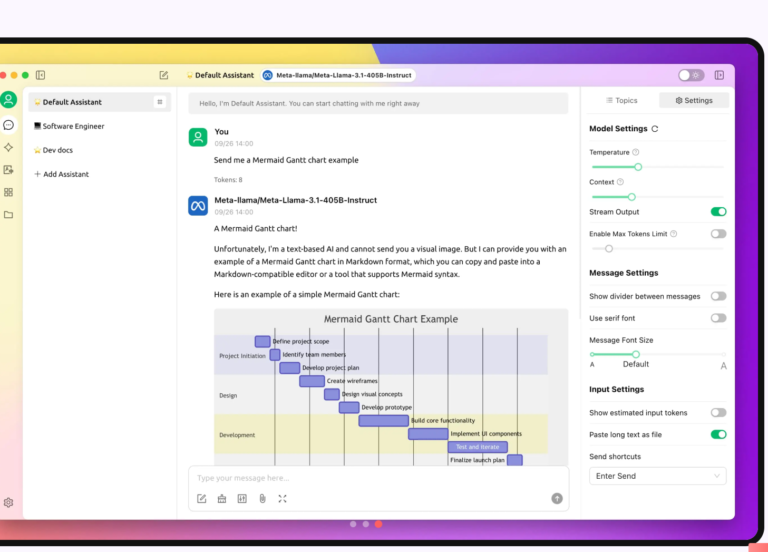

The example shows a web article summary generated using the tool invocation capability of DeepSeek-R1-0528 via LobeChat. In addition, DeepSeek-R1-0528 has been updated and improved in areas such as front-end code generation and role-playing.

The example shows a modern and minimalist word card application developed using HTML/CSS/JavaScript by invoking DeepSeek-R1-0528 on a web page.

Key highlights of the DeepSeek-R1-0528 update

- Deep reasoning capabilities comparable to Google models

- Text generation optimization: more natural and better formatted

- Unique reasoning style: not only faster but also more rigorous

- Support for long-term thinking: single-task processing time can reach 30–60 minutes

The capabilities of the new version of DeepSeek-R1 have been tested by us. Although it is a “minor version” update, its performance has been ‘epically’ enhanced.

Especially in terms of programming capabilities, it feels like it has surpassed or is on par with Claude 4 and Gemini 2.5 Pro. All prompts are “one-shot,” requiring no modifications! And it can be run directly in a web browser to demonstrate its capabilities.

You can clearly feel that the thinking process of the new DeepSeek-R1 version is more stable.

You can ask deepseek-R1 any question you want to know the answer to, even if your question is a bit nonsensical, it will still think carefully and organize the logic. We highly recommend you try the latest deepseek-R1 model.

API update information

The API has been updated, but the interface and calling methods remain unchanged. The new R1 API still supports viewing the model’s thinking process and now also supports Function Calling and JsonOutput.

The deepseek team has adjusted the meaning of the max_tokens parameter in the new R1 API: max_tokens now limits the total length of the model’s single output (including the thinking process), with a default value of 32K and a maximum of 64K. API users are advised to adjust the max_tokens parameter promptly to prevent output from being truncated prematurely.

For detailed instructions on using the R1 model, please refer to the deepseek R1 API guide:

After this R1 update, the model context length on the official website, mini program, app, and API will remain 64K. If users require a longer context length, they can call the open-source version of the R1-0528 model with a context length of 128K through other third-party platforms.

Open source

DeepSeek-R1-0528 uses the same base model as the previous DeepSeek-R1, with only improvements made to the post-training methods.

When deploying privately, only the checkpoint and tokenizer_config.json (tool calls-related changes) need to be updated. The model parameters are 685B (of which 14B is for the MTP layer), and the open-source version has a context length of 128K (64K context length is provided for web, app, and API).