In the future, there will be more and more hardcore innovation. It may not be easy to understand now, because the entire social group needs to be educated by facts. When this society allows people who innovate hardcore to succeed, the collective mindset will change. We just need a bunch of facts and a process. — Liang Wenfeng, founder of DeepSeek

In recent days, DeepSeek has exploded all over the world, but because the company is so low-key and has not made any announcements, the public knows very little about this technology company with great potential – whether it is its founding background, business scope, or product layout.

After finishing sorting through all the materials, I wrote this article

What is the background of the current AI players, what are they up to, and who are they recruiting?

and probably the most complete historical overview of DeepSeek.

This time last year, a friend from Magic Cube Quant came to me and asked, “Do you want to build a big model in China?” And I simply spent the afternoon drinking coffee. As expected, life still depends on choices.

The Magic Cube Quant mentioned here is the investor, or parent company, of DeepSeek.

The so-called “quant” is an investment institution that makes decisions not by human power but by algorithms. The establishment of Quant Fantasy is not long, starting in 2015. By 2021, when it was six years old, the asset management scale of Quant Fantasy had exceeded 100 billion, and it was hailed as one of China’s “four great quant kings”.

The founder of Fantasy Square, Liang Wenfeng, who is also the founder of DeepSeek, is a “non-mainstream” financial leader born in the 1980s: he has no overseas study experience, is not an Olympic competition winner, and graduated from the Department of Electronic Engineering at Zhejiang University, majoring in artificial intelligence. He is a native technology expert who acts in a low-key manner, “reading papers, writing code, and participating in group discussions” every day.

Liang Wenfeng does not have the habits of a traditional business owner, but is more like a pure “tech geek”. Many industry insiders and DeepSeek researchers have given Liang Wenfeng extremely high praise: “someone who has both strong infra engineering capabilities and model research capabilities, and can also mobilize resources,” “someone who can make accurate judgments from a high level, but also excel at the details over frontline researchers,” and also has “a terrifying learning ability.”

Long before DeepSeek was founded, Huanfang had already begun to make long-term plans in the AI industry. In May 2023, Liang Wenfeng mentioned in an interview with Darksurge: “After OpenAI released GPT3 in 2020, the direction of AI development has become very clear, and computing power will become a key element; but even in 2021, when we invested in the construction of Firefly 2, most people still could not understand it.”

Based on this judgment, Huanfang began to build its own computing infrastructure. “From the earliest 1 card, to 100 cards in 2015, 1,000 cards in 2019, and then 10,000 cards, this process happened gradually. Before a few hundred cards, we were hosted in an IDC. When the scale became larger, hosting could no longer meet the requirements, so we started building our own computer room.”

Later, Finance Eleven reported, “There are no more than five domestic companies with more than 10,000 GPUs, and in addition to a few major manufacturers, they also include a quantitative fund company called Magic Cube.” It is generally believed that 10,000 Nvidia A100 chips is the threshold for computing power to train large models.

In a previous interview, Liang Wenfeng also mentioned an interesting point: many people would think there is an unknown business logic behind it, but in fact, it is mainly driven by curiosity.

DeepSeek‘s first encounter

In an interview with Darksurge in May 2023, when asked “Not long ago, Huanfang announced its decision to make large models, why would a quantitative fund do such a thing?”

Liang Wenfeng’s answer was resounding: “Our decision to build a large model has nothing to do with quantification or finance. We have set up a new company called DeepSeek to do this. Many of the key members of the team at Mianfang are involved in artificial intelligence. At the time, we tried many scenarios and finally settled on finance, which is complex enough. General artificial intelligence may be one of the next most difficult things to achieve, so for us, it’s a question of how to do it, not why.

Not driven by commercial interests or chasing market trends, but simply driven by a desire to explore AGI technology itself and a persistent pursuit of “the most important and difficult thing,” the name “DeepSeek” was officially confirmed in May 2023. On July 17, 2023, “Hangzhou DeepSeek Artificial Intelligence Basic Technology Research Co., Ltd.” was incorporated.

On November 2, 2023, DeepSeek delivered its first answer: DeepSeek Coder, a large model of open source code. This model includes multiple sizes such as 1B, 7B, and 33B. The open source content includes the Base model and the command tuning model.

At the time, among the open source models, Meta’s CodeLlama was the industry benchmark. However, once DeepSeek Coder was released, it demonstrated a multi-faceted leading position compared to CodeLlama: in code generation, HumanEval was 9.3% ahead, MBPP was 10.8% ahead, and DS-1000 was 5.9% ahead.

Keep in mind that DeepSeek Coder is a 7B model, while CodeLlama is a 34B model. In addition, the DeepSeek Coder model, after being tuned with instructions, has comprehensively surpassed GPT3.5-Turbo.

Not only is code generation impressive, but DeepSeek Coder also shows off its muscles in mathematics and reasoning.

Three days later, on November 5, 2023, DeepSeek released a large amount of recruitment content through its WeChat public account, including positions such as AGI large model intern, data expert, data architecture talent, senior data collection engineer, deep learning research and development engineer, etc., and began to actively expand the team.

As Liang Wenfeng said, DeepSeek’s “must-have requirements” for talent recruitment are “passion and solid basic skills”, and he emphasized that “innovation requires as little intervention and management as possible, so that everyone has the freedom to make mistakes and try new things. Innovation often comes from within, not from deliberate arrangements, and it certainly doesn’t come from teaching.”

Models are frequently released, and open source is practiced

After DeepSeek Coder made a splash, DeepSeek turned its attention to the main battlefield: general language models.

On November 29, 2023, DeepSeek released its first general-purpose large language model, DeepSeek LLM 67B. This model is benchmarked against Meta’s LLaMA2 70B model of the same level and has performed better in nearly 20 public evaluation lists in Chinese and English. In particular, its reasoning, mathematics, and programming abilities (e.g., HumanEval, MATH, CEval, and CMMLU) are outstanding.

DeepSeek LLM 67B has also chosen the open source route and supports commercial use. To further demonstrate its sincerity and determination to open source, DeepSeek has, unprecedentedly, simultaneously opened source two models of different scales, 7B and 67B, and even made public the nine checkpoints generated during the model training process for researchers to download and use. This kind of operation, which is akin to “teaching everything”, is extremely rare in the entire open source community.

In order to more comprehensively and objectively evaluate the true capabilities of DeepSeek LLM 67B, the DeepSeek research team also carefully designed a series of “new questions” for “stress testing”. These questions cover high-level, high-discrimination tests such as Hungarian high school math exam questions, Google command following evaluation sets, and LeetCode weekly competition questions. The test results were encouraging. DeepSeek LLM 67B showed amazing potential in terms of its ability to generalize beyond the sample, and its overall performance was even close to that of the then-most-advanced GPT-4 model.

On December 18, 2023, DeepSeek opened source the Vincent 3D model DreamCraft3D: it can generate high-quality 3D models from a sentence, achieving the leap from 2D planes to 3D space in AIGC. For example, if the user inputs: “Running through the woods, a funny hybrid image of a pig’s head and the body of the Monkey King,” DreamCraft3D can output high-quality content:

In principle, the model first completes the Venn diagram, and then supplements the overall geometric structure based on the 2D concept map:

In the subjective evaluation that followed, more than 90% of users said that DreamCraft3D had an advantage in generation quality compared to previous generation methods.

On January 7, 2024, DeepSeek released the DeepSeek LLM 67B technical report. This 40+ page report contains many details of DeepSeek LLM 67B, including self-built scaling laws, complete practical details of model alignment, and a comprehensive AGI ability evaluation system.

On January 11, 2024, DeepSeek open-sourced the first MoE (mixed expert architecture) large model in China, DeepSeekMoE: a brand new architecture that supports Chinese and English and is free for commercial use. The MoE architecture was generally considered at the time to be the key to OpenAI GPT-4’s performance breakthrough. DeepSeek’s self-developed MoE architecture is leading in multiple scales such as 2B, 16B, and 145B, and its computational is also very commendable.

On January 25, 2024, DeepSeek released the DeepSeek Coder technical report. This report provides a comprehensive technical analysis of its training data, training methods, and model performance. In this report, we can see that for the first time, it has constructed warehouse-level code data and used topological sorting to analyze the dependencies between files, significantly enhancing the ability to understand long-distance cross-files. In terms of training methods, the Fill-In-Middle method was added, which greatly improved the ability of code completion.

On January 30, 2024, the DeepSeek open platform was officially launched, and the DeepSeek Large Model API service started testing. Register to get 10 million tokens for free. The interface is compatible with the OpenAI API interface, and both Chat/Coder dual models are available. At this time, DeepSeek began to explore the path of a technology service provider in addition to technology research and development.

On February 5, 2024, DeepSeek released another vertical domain model, DeepSeekMath, a mathematical reasoning model. This model has only 7B parameters, but its mathematical reasoning ability is close to that of GPT-4. On the authoritative MATH benchmark list, it surpasses the crowd and outperforms a number of open source models with parameter sizes between 30B and 70B. The release of DeepSeekMath fully demonstrates DeepSeek’s technical strength and forward-looking layout in the research and development of vertical and its forward-looking layout in model research and development.

On February 28, 2024, in order to further alleviate developers’ concerns about using DeepSeek open source models, DeepSeek released an open source policy FAQ, which provides detailed answers to frequently asked questions such as model open source licensing and commercial use restrictions. DeepSeek embraces open source with a more transparent and open attitude:

On March 11, 2024, DeepSeek released the multi-modal large model DeepSeek-VL. This is DeepSeek’s initial attempt at multi-modal AI technology. The model is 7B and 1.3B in size, and the model and technical papers are open sourced simultaneously.

On March 20, 2024, Huanfang AI & DeepSeek was once again invited to participate in the NVIDIA GTC 2024 conference, and founder Liang Wenfeng delivered a technical keynote speech entitled “Harmony in Diversity: Aligning and Decoupling the Values of Large Language Models”. Issues such as “the conflict between a single-value large model and a pluralistic society and culture,” “the decoupling of large model value alignment,” and “the multidimensional challenges of decoupled value alignment” were discussed. This demonstrated DeepSeek’s humanistic care and social responsibility for AI development, in addition to its technological research and development.

In March 2024, DeepSeek API officially launched paid services, which completely ignited the prelude to the price war in the Chinese large model market: 1 yuan per million input tokens and 2 yuan per million output tokens.

In 2024, DeepSeek successfully passed the recordal of large models in China, clearing the policy obstacles for the full opening of its API services.

In May 2024, DeepSeek-V2, an open source general MoE large model, was released, and the price war officially began. DeepSeek-V2 uses MLA (multi-head latent attention mechanism), which reduces the model’s memory footprint to 5%-13% of that of traditional MHA. At the same time, it has also independently developed the DeepSeek MoE Sparse sparse structure, which greatly reduces the model’s computational complexity. Thanks to this, the model maintains an API price of “1 yuan/million inputs and 2 yuan/million outputs”.

DeepSeek has had a huge impact. In this regard, the lead analyst at SemiAnalysis believes that the DeepSeek V2 paper “may be one of the best this year.” Similarly, Andrew Carr, a former OpenAI employee, believes that the paper is “full of amazing wisdom” and has applied its training settings to his own model.

It should be noted that this is a model that benchmarks GPT-4-Turbo, and the API price is only 1/70 of the latter

On June 17, 2024, DeepSeek once again made a big push, releasing the DeepSeek Coder V2 code model open source and claiming that its code capabilities surpassed GPT-4-Turbo, the most advanced closed-source model at the time. DeepSeek Coder V2 continues DeepSeek’s consistent open source strategy, with all models, code, and papers open sourced, and two versions, 236B and 16B, are provided. DeepSeek C oder V2’s API services are also available online, and the price remains at “1 yuan/million inputs and 2 yuan/million outputs”.

On June 21, 2024, DeepSeek Coder supported online code execution. On the same day, Claude3.5 Sonnet was released, with the new Artifacts feature, which automatically generates code and runs it directly in the browser. On the same day, the code assistant on the DeepSeek website also launched the same feature: generate code and run it with one click.

Let’s review the major events of this period:

Continuous breakthroughs, attracting global attention

In May 2024, DeepSeek became famous overnight by releasing DeepSeek V2, an open source model based on MoE. It matched the performance of GPT-4-Turbo, but at a price of only 1 yuan/million input, which was 1/70 of GPT-4-Turbo. At that time, DeepSeek became a well-known “price butcher” in the industry, and then mainstream players such as Zhicheng, ByteDance, and Alibaba… and other major players quickly followed suit and lowered their prices. It was also around that time that there was another round of GPT ban, and a large number of AI applications began to try out domestic models for the first time.

In July 2024, DeepSeek founder Liang Wenfeng once again accepted an interview with Dark Surge and responded directly to the price war: “Very unexpected. I didn’t expect the price to make everyone so sensitive. We just do things at our own pace and then price based on cost. Our principle is not to lose money or make exorbitant profits. This price is also slightly above cost with a little profit.”

It can be seen that, unlike many competitors who pay out of their own pockets to subsidize, DeepSeek is profitable at this price.

Some people may say: price cuts are like robbing users, and this is usually the case in price wars in the Internet era

In response, Liang Wenfeng also responded: “Robbing users is not our main goal. We lowered the price because, on the one hand, the cost has come down as we explore the structure of the next-generation model, and on the other hand, we feel that both the API and AI should be affordable and accessible to everyone.”

So the story continues with Liang Wenfeng’s idealism.

On July 4, 2024, the DeepSeek API went online. The price for 128K context remained unchanged. The inference cost of a model is closely related to the length of the context. Therefore, many models have strict restrictions on this length: the initial version of GPT-3.5 only has 4k context.

At this time, DeepSeek increased the context length from the previous 32k to 128k while keeping the price unchanged (1 yuan per million input tokens and 2 yuan per million output tokens).

On July 10, 2024, the results of the world’s first AI Olympiad (AIMO) were announced, and the DeepSeekMath model became the common choice of the Top teams. The winning Top 4 teams all chose DeepSeekMath-7B as the basis for their entry models and achieved impressive results in the competition.

On July 18, 2024, DeepSeek-V2 topped the list of open source models on the Chatbot Arena, surpassing star models such as Llama3-70B, Qwen2-72B, Nemotron-4-340B, and Gemma2-27B, and becoming a new benchmark for open source large models.

In July 2024, DeepSeek continued to recruit talent and recruited top talent from around the world in multiple fields, including AI algorithms, AI Infra, AI Tutor, and AI products, to prepare for future technological innovation and product development.

On July 26, 2024, DeepSeek API ushered in an important upgrade, fully supporting a series of advanced features such as overwriting, FIM (Fill-in-the-Middle) completion, Function Calling, and JSON Output. The FIM function is very interesting: the user gives the beginning and end, and the big model fills in the middle, which is very suitable for the programming process to fill in the exact function code. Take writing the Fibonacci sequence as an example:

On August 2, 2024, DeepSeek innovatively introduced hard disk caching technology, slashing API prices to the ankles. Previously, API prices were only ¥1 per million tokens. Now, however, once a cache hit is made, the API fee drops directly to ¥0.1.

This feature is very practical when continuous conversations and batch processing tasks are involved.

On August 16, 2024, DeepSeek released its mathematical theorem proving model DeepSeek-Prover-V1.5 as open source, which surpassed many well-known open source models in high school and college mathematical theorem proving tests.

On September 6, 2024, DeepSeek released the DeepSeek-V2.5 fusion model. Previously, DeepSeek mainly provided two models: the Chat model focused on general conversation skills, and the Code model focused on code processing skills. This time, the two models have been combined into one, upgraded to DeepSeek-V2.5, which better aligns with human preferences and has also achieved significant improvements in writing tasks, command following, and other aspects.

On September 18, 2024, DeepSeek-V2.5 was once again on the latest LMSYS list, leading the domestic models and setting new best scores for domestic models in multiple individual abilities.

On November 20, 2024, DeepSeek released DeepSeek-R1-Lite on the official website. This is an inference model comparable to o1-preview, and also provides a sufficient amount of synthetic data for the post-training of V3.

On December 10, 2024, the DeepSeek V2 series ushered in its finale with the release of the final fine-tuned version of DeepSeek-V2.5-1210. This version comprehensively improves multiple abilities including mathematics, coding, writing, and role-playing through post-training.

With the arrival of this version, the DeepSeek web app also opened up the network search function.

On December 13, 2024, DeepSeek made another breakthrough in the field of multimodality and released the open source multimodal large model DeepSeek-VL2. DeepSeek-VL2 adopts the MoE architecture, which significantly improves its visual capabilities. It is available in three sizes: 3B, 16B, and 27B, and has an advantage in all metrics.

On December 26, 2024, DeepSeek-V3 was released with open source: the estimated training cost was only 5.5 million US dollars. DeepSeek-V3 fully benchmarked the performance of leading closed source models overseas and greatly improved the generation speed.

The pricing of API services was adjusted, but at the same time, a 45-day preferential trial period was set for the new model.

On January 15, 2025, the official DeepSeek app was officially released and fully launched on major iOS/Android app markets.

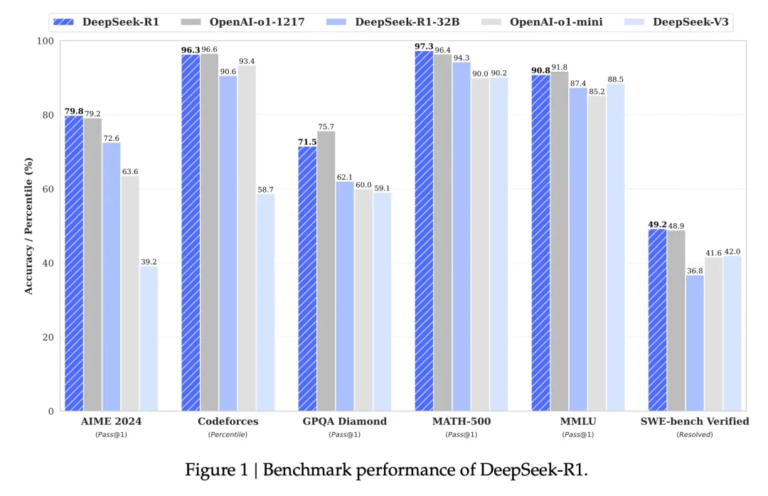

On January 20, 2025, close to the Chinese New Year, the DeepSeek-R1 inference model was officially released and open-sourced. DeepSeek-R1 fully aligned its performance with the official OpenAI o1 release and opened up the thought chain output function. At the same time, DeepSeek also announced that the model open source license would be changed to the MIT license, and the user agreement would explicitly allow “model distillation”, further embracing open source and promoting technology sharing.

Later, this model became very popular and ushered in a new era

As a result, as of January 27, 2025, the DeepSeek App successfully surpassed ChatGPT and topped the free app download list on the US iOS App Store, becoming a phenomenal AI app.

On January 27, 2025, at 1:00 a.m. on New Year’s Eve, DeepSeek Janus-Pro was released as open source. This is a multimodal model named after the two-faced god Janus in ancient Roman mythology: it faces both the past and the future. This also represents the model’s two abilities—visual understanding and image generation—and its domination of multiple rankings.

DeepSeek’s explosive popularity immediately triggered a global technology shockwave, even directly causing NVIDIA’s stock price to plummet 18%, and the market value of the global technology stock market to evaporate by about 1 trillion U.S. dollars. Wall Street and technology media exclaimed that DeepSeek’s rise is subverting the global AI industry landscape and posing an unprecedented challenge to American technology giants.

DeepSeek’s success has also triggered high international attention and heated discussions about China’s AI technological innovation capabilities. US President Donald Trump, in a rare public comment, praised the rise of DeepSeek as “positive” and said it was a “wake-up call” for the United States. Microsoft CEO Satya Nadella and OpenAI CEO Sam Altman also praised DeepSeek, calling its technology “very impressive.”

Of course, we must also understand that their praise is partly a recognition of DeepSeek’s strength, and partly a reflection of their own motives. For example, while Anthropic recognizes DeepSeek’s achievements, it is also calling on the US government to strengthen chip controls on China.

Anthropic CEO publishes a 10,000-word article: DeepSeek’s rise means the White House should step up controls

Summary and outlook

Looking back on DeepSeek’s past two years, it has truly been a “Chinese miracle”: from an unknown startup to the “mysterious Eastern power” that is now shining on the global AI stage, DeepSeek has written one “impossible” after another with its strength and innovation.

The deeper meaning of this technological expedition has long since transcended the scope of commercial competition. DeepSeek has announced with facts that in the strategic field of artificial intelligence that concerns the future, Chinese companies are fully capable of climbing to the heights of core technology.

The “alarm bell” trumpeted by Trump and the hidden fear of Anthropic precisely confirm the importance of China’s AI capabilities: not only can it ride the waves, but it is also reshaping the direction of the tide

Deepseek product release milestones

- November 2, 2023: DeepSeek Coder Large Model

- November 29, 2023: DeepSeek LLM 67B Universal Model

- December 18, 2023: DreamCraft3D 3D model

- January 11, 2024: DeepSeekMoE MoE large model

- February 5, 2024: DeepSeekMath Mathematical reasoning model

- March 11, 2024: DeepSeek-VL Multimodal large model

- May 2024: DeepSeek-V2 MoE general model

- June 17, 2024: DeepSeek Coder V2 code model

- September 6, 2024: DeepSeek-V2.5 fusion of general and code competency models

- December 13, 2024: DeepSeek-VL2 multimodal MoE model

- December 26, 2024: DeepSeek-V3 new series of general-purpose large models

- January 20, 2025: DeepSeek-R1 inference model

- January 20, 2025: DeepSeek official App (iOS & Android)

- January 27, 2025: DeepSeek Janus-Pro multimodal model