The 32B inference model uses only 1/8 of the data and is tied with DeepSeek-R1 of the same size!

Just now, institutions such as Stanford, UC Berkeley, and the University of Washington have jointly released an SOTA-level inference model, OpenThinker-32B, and have also open-sourced up to 114k training data.

Team discovery: Using a large-scale high-quality dataset with DeepSeek-R1 verified annotations (based on R1 distillation), a SOTA inference model can be trained.

The specific method is to scale the data, verify the inference process, and scale the model.

The resulting OpenThinker-32B outperformed Li Fei-Fei’s s1 and s1.1 models in multiple benchmark tests in mathematics, coding, and science, and was close to R1-Distill-32B.

It is worth mentioning that compared to R1-Distill-32B, which used 800k data (including 600k inference samples), OpenThinker-32B only used 114k data to achieve almost the same excellent results.

In addition, OpenThinker-32 also made public all the model weights, datasets, data generation code, and training code!

Data curation

The researchers trained OpenThinker-32B using the same OpenThoughts-114k dataset as they had previously trained OpenThinker-7B.

They used the DeepSeek-R1 model to collect the reasoning processes and answer attempts for a carefully selected set of 173,000 questions. This raw data was then published as the OpenThoughts-Unverified-173k dataset.

The final step in the process is to filter out the corresponding data samples if the reasoning process fails to pass the verification.

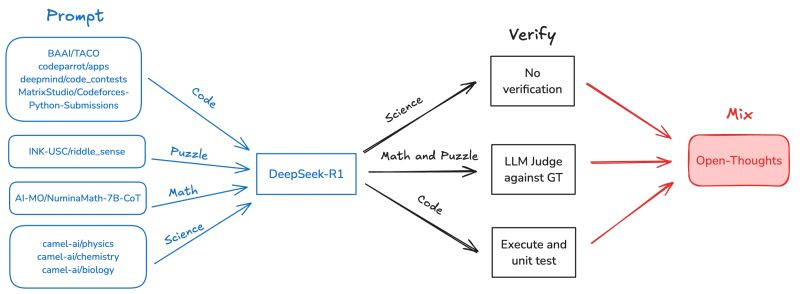

The following figure visually displays the entire process.

The research team first enters source data or question prompts, which can come from different fields and platforms, such as BAAI/TACO, DeepMind, Python submissions, etc., covering various aspects such as code, puzzles, science, and mathematics.

These diverse inputs are then passed to the core processing module, DeepSeek-R1, where the data is analyzed and processed. The questions are divided into three categories: science questions, math and puzzles, and code.

Some results do not require verification and may be simple analyses or direct outputs. For some content that requires in-depth verification, a large language model (LLM) is used to judge it in a way that is comparable to GT (Ground Truth). If it is code, the code is executed and unit tests are performed to ensure its correctness and effectiveness.

Finally, the results from different directions can be combined to generate open-minded thinking and more comprehensive solutions.

The research team has updated the final OpenThoughts-114k dataset with a configuration called “metadata” that contains some additional columns used to construct the dataset:

- problem

- ground_truth_solution

- test_cases (code only)

- starter_code (code only)

- DeepSeek_reasoning

- DeepSeek_solution

- domain

- source

These additional metadata will make it easier to use this dataset in new scenarios, such as data filtering, domain switching, verification checks, and changing the inference process template.

These additional metadata will make it easier to use this dataset, and it can be done with just one line of code, such as filtering, changing the domain, checking the verification, and changing the inference tracking template.

load_dataset("open-thoughts/OpenThoughts-114k", "metadata", split="train")

The research team says they look forward to seeing the community leverage these questions and standard answers for research on reinforcement learning (RL) on the OpenThinker model. DeepScaleR has already demonstrated that this approach works particularly well at smaller scales.

Verification

To arrive at the final OpenThoughts-114k dataset, the research team verified the answers and eliminated incorrect responses.

As shown in the table below, retaining inferences that do not pass verification can hurt performance, although the unverified model still performs well compared to the other 32B inference models.

The role of verification is to maintain the quality of R1 annotations while expanding the diversity and size of the training prompt set. On the other hand, unverified data can be expanded more easily and is therefore also worth exploring further.

For code problems, we complete the verification of the inference process by verifying answer attempts against existing test cases.

Inspired by the challenges faced during code execution, we implemented a code execution framework in Curator that enables users to execute code at scale, safely, and verify it against the expected output.

For mathematical problems, the research team used an LLM (Large Language Model) judge for verification, which receives both the standard answer and the DeepSeek-R1 solution attempt.

It was found that using the LLM evaluator for data generation instead of the more stringent parsing engine (Math-Verify) resulted in a higher effective data rate and allowed for the training of downstream models with better performance.

Training

The research team used LLaMa-Factory to fine-tune Qwen2.5-32B-Instruct three times on the OpenThoughts-114k dataset with a context length of 16k. The complete training configuration can be found on GitHub.

OpenThinker-32B was trained for 90 hours using four 8xH100 P5 nodes on an AWS SageMaker cluster, for a total of 2,880 H100-hours.

Meanwhile, OpenThinker-32B-Unverified trained for 30 hours on the Leonardo supercomputer using 96 4xA100 nodes (64GB per GPU), accumulating 11,520 A100 hours.

Evaluation

The research team used the open source evaluation library Evalchemy to evaluate all models.

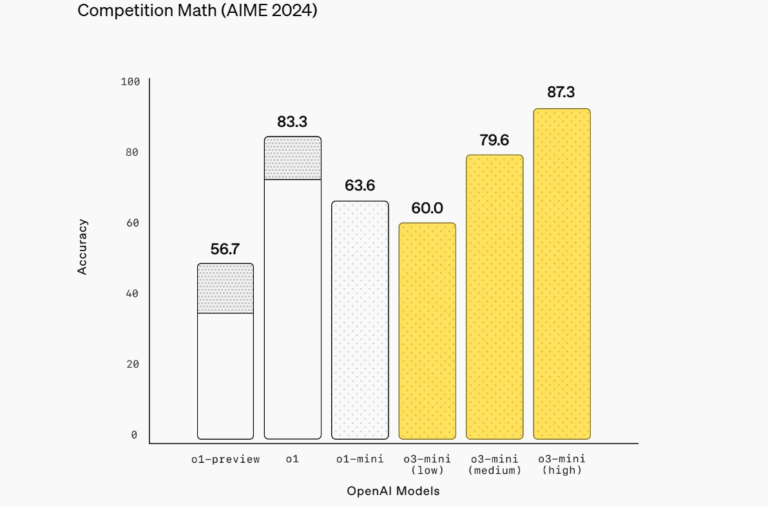

For AIME24 and AIME25, they calculated the accuracy by averaging the results of five runs. The evaluation configuration used a temperature parameter of 0.7, limited the model response to 32,768 tokens, did not add any additional system or user prompt words, and did not use any special decoding strategies (such as budget forcing).

When the OpenThoughts project was launched, they set a goal of creating an open data model with performance that could match DeepSeek-R1-Distill-Qwen-32B.

Now that gap has almost been eliminated.

Finally, the research team is excited by the rapid progress the community has made in building open data inference models over the past few weeks, and looks forward to continuing to move forward based on each other’s insights.

The open source release of OpenThinker-32B demonstrates that synergies between data, validation, and model size are key to improving inference capabilities.

This result not only promotes the development of open source inference models, but also provides valuable resources and inspiration for the entire AI community.