The high cost of using large AI models is a major reason why many AI applications have not yet been implemented and promoted. Choosing extreme performance means huge computing power costs, which leads to high usage costs that ordinary users cannot accept.

The competition for large AI models is like a war without smoke. After DeepSeek released and opened source the latest R1 large model, OpenAI also released its own latest o3 model under pressure. Large model player Google also had to join the fierce competition for low-cost models.

Google’s new move: new members of the Gemini series unveiled

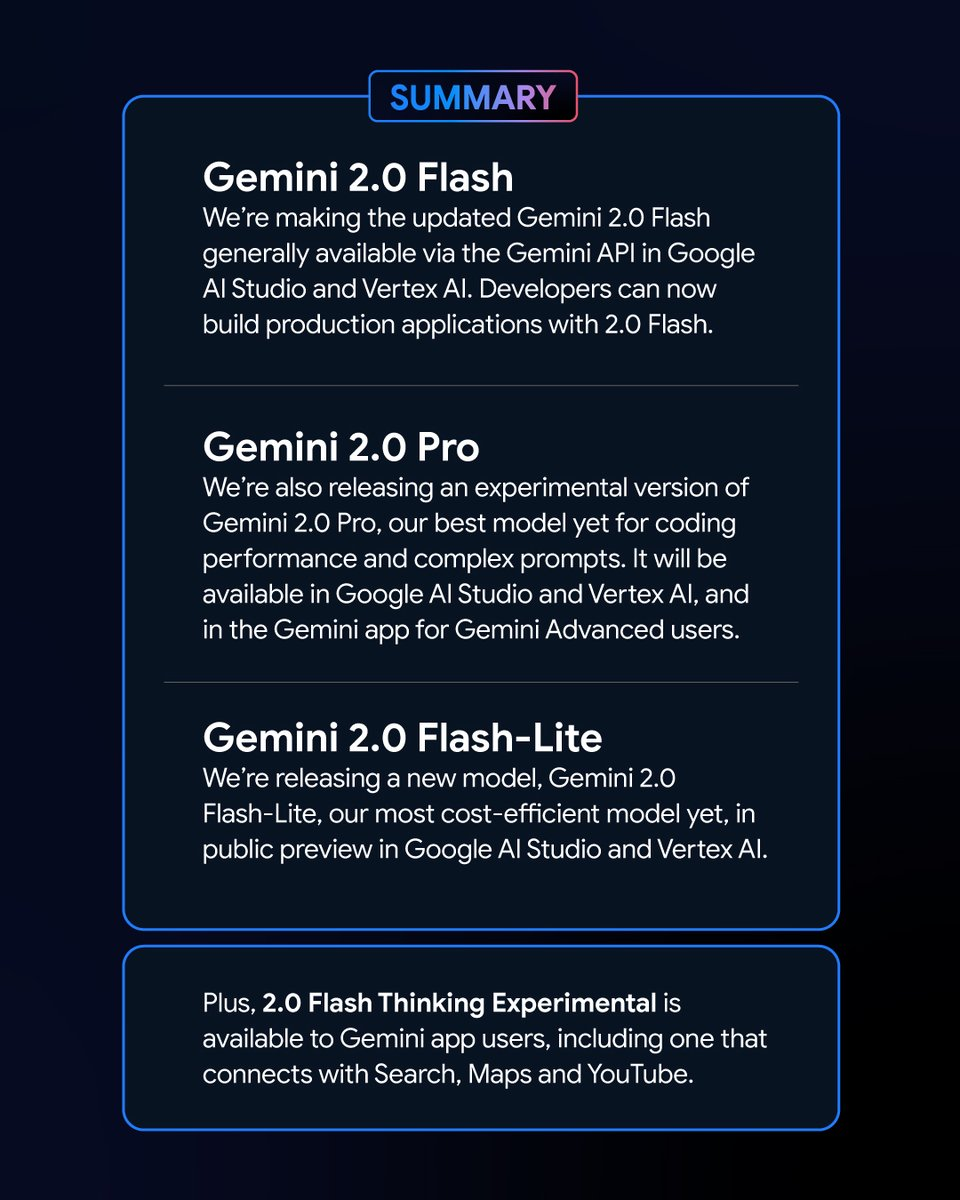

In the early morning of February 6, Google launched a series of new versions of the Gemini model. Among them, the experimental version of Gemini 2.0 Pro and the preview version of Gemini 2.0 Flash – Lite attracted much attention, and the latest version of Gemini 2.0 Flash was officially released.

As a new variant, Google Gemini 2.0 Flash – Lite has a very attractive price of only 0.3 USD per million tokens, making it Google’s most affordable model to date.

The experimental version of Gemini 2.0 Pro, on the other hand, has powerful native multimodal capabilities that can convert between text and audio and video.

The experimental version of Gemini 2.0 Flash Thinking is free to use and also has the ability to access, extract and summarize the content of YouTube videos.

Logan Kilpatrick, head of Google AI Studio products, announced on the X platform that these models are “the most powerful models in Google’s history” and are available to all developers.

The new Gemini models’ impressive performance and results in the leaderboard

In the Chatbot Arena Large Model Leaderboard, the Gemini 2.0 Flash Thinking Experimental Edition and Gemini 2.0 Pro Experimental Edition have achieved outstanding results. Compared with previous Google large models, Gemini 2.0 has made great progress, and unsurprisingly, they have successfully reached the top of the leaderboard, with a combined score surpassing ChatGPT-4o and DeepSeek-R1. This is a huge improvement.

This result is based on a comprehensive evaluation of the capabilities of large models in various areas, including mathematics, coding, and multilingual processing.

Price and performance: each variant of Gemini 2.0 has its own advantages

The different versions of Gemini 2.0 have their own characteristics in terms of price and performance. A balance between performance and price has been achieved, giving users more choices. The APIs of the different versions of Gemini 2.0 can be called through Google AI Studio and Vertex AI. Developers and users can choose the appropriate version according to their needs.

Gemini 2.0 has made great progress and development compared to Gemini 1.5. Although the different versions of Gemini 2.0 have differences, they have all been improved overall. Specifically, you need to determine the scenario you are using, and then you can better choose the Gemini model that suits you.

In terms of price, Gemini 2.0 Flash and Gemini 2.0 Flash – Lite focus on lightweight deployment. They support up to 1 million tokens in the length of the context window, and in terms of pricing, the distinction between long and short text processing in Gemini 1.5 Flash has been removed, and the price is unified at a unit token price.

Gemini 2.0 Flash costs 0.4 USD per million tokens for text output, which is half the price of Gemini 1.5 Flash when processing long texts.

Gemini 2.0 Flash – Lite is even better at cost optimization in large-scale text output scenarios, with a text output pricing of 0.3 USD per million tokens. Even Google CEO Sundar Pichai praised it as “efficient and powerful”.

In terms of performance improvement, Gemini 2.0 Flash has more comprehensive multimodal interaction functions than the Lite version. It is scheduled to support image output, as well as bidirectional real-time low-latency input and output of modalities such as text, audio, and video.

The experimental version of Gemini 2.0 Pro excels in terms of encoding performance and complex prompts. Its context window can reach up to 2 million tokens, and its general ability has increased from 75.8% to 79.1% compared to the previous generation, which is a significant difference in encoding and reasoning ability with Gemini 2.0 Flash and Gemini 2.0 Flash – Lite.

The Gemini application team said on the X platform that Gemini Advanced users can access the Gemini 2.0 Pro experimental version through the model drop-down menu, and the Gemini 2.0 Flash Thinking experimental version is free to Gemini application users, and this version can be used in conjunction with YouTube, Google search, and Google Maps.

Countering competition: Google model cost-effectiveness contest

At a time when the cost of model development has become a hot topic in the industry, the launch of the open source, low-cost, high-performance DeepSeek – R1 has had an impact on the entire industry.

During the conference call after the release of Google’s fourth quarter 2024 financial report, Pichai, while acknowledging the achievements of DeepSeek, also emphasized that the Gemini series of models are leading in the balance between cost, performance, and latency, and that their overall performance is better than that of DeepSeek’s V3 and R1 models.

From the perspective of the ranking of the LiveBench large model performance benchmark test built by Yang Likun and his team, the overall ranking of Gemini 2.0 Flash is higher than that of DeepSeek V3 and OpenAI’s o1 – mini, but it is behind DeepSeek – R1 and OpenAI’s o1. However, Google’s launch of Gemini 2.0 Flash – Lite is like a trump card. Google hopes to make the latest large models affordable for more people, reduce users’ usage costs, and hopes to occupy a place in the competition between companies for price/performance.

After Google released the latest Gemini 2.0, a netizen began to try and analyze Gemini 2.0 Flash and other popular deepseek and openai GPT-4o models on his own. He found that the new version of Gemini 2.0 Flash outperforms the other two models in terms of both performance and cost. This also gives us a glimpse of Google’s development and evolution, and it’s a good start.

Specifically, Gemini 2.0 Flash costs 0.1 USD per million tokens for input and 0.4 USD for output, both of which are far lower than DeepSeek V3. This is a huge improvement and development. The netizen also pointed out on the X platform: “The official version of Gemini 2.0 Flash costs one-third of GPT-4o-mini, while it is three times as fast.”

A new trend in the large model market: value for money is king

Today, the large model field is caught in a new price war. In the past, the high cost of using large models has created some resistance to their use and promotion. The impact of the price war for large models triggered by DeepSeek on the overseas large model market is still continuing to ferment. At the same time, the open source option has also allowed more users to understand and use the latest large model research results. The open source + low price strategy has also put pressure on many American large model companies.

Google launched Gemini 2.0 Flash-Lite, and OpenAI made the ChatGPT search function freely available to all users, so that users can use the search function to complete more diverse tasks. The Meta internal team is also stepping up research on large model price reduction strategies while promoting the further development of Meta’s open source large models.

In this highly competitive field, no company can sit comfortably in the number one spot. Companies are trying to attract and retain users by improving cost-effectiveness. This trend will help large models move from pure technology development to wider application, and the future large model market will continue to evolve and change in the competition for cost-effectiveness.